pyqt5 + Resnet18 实现图片分类小系统 |

您所在的位置:网站首页 › 图案设计分类图片 › pyqt5 + Resnet18 实现图片分类小系统 |

pyqt5 + Resnet18 实现图片分类小系统

|

一、介绍Resnet18网络

ResNet(Residual Neural Network)由微软研究院的Kaiming He等四名华人提出,通过使用ResNet Unit成功训练出了152层的神经网络,并在ILSVRC2015比赛中取得冠军,在top5上的错误率为3.57%,同时参数量比VGGNet低,效果非常突出。ResNet的结构可以极快的加速神经网络的训练,模型的准确率也有比较大的提升。同时ResNet的推广性非常好,甚至可以直接用到InceptionNet网络中。 ResNet详解在ResNet网络中有如下几个亮点: 提出residual结构(残差结构),并搭建超深的网络结构(突破1000层)使用Batch Normalization加速训练(丢弃dropout)在ResNet网络提出之前,传统的卷积神经网络都是通过将一系列卷积层与下采样层进行堆叠得到的。但是当堆叠到一定网络深度时,就会出现两个问题。 梯度缺失或梯度爆炸。退化问题在ResNet论文中说通过数据的预处理以及在网络中使用BN(Batch Normalization)层能够解决梯度消失或者梯度爆炸问题。BN一般至于激活函数ReLU之前。同时还提出了residual结构(残差结构)来减轻退化问题。下图是使用residual结构的卷积网络,可以看到随着网络的不断加深,效果并没有变差,反而变的更好了。 残差指的是什么? 其中ResNet提出了两种mapping: identity mapping,指的就是下图中“弯弯的曲线"residual mapping,指的是除了“弯弯的曲线”的部分所以最后的输出为:

y

=

F

(

x

)

+

x

y=F(x)+ x

y=F(x)+xidentity mapping顾名思义,就是指本身,也就是公式中的x,而residual mapping指的是“差”,也就是

F

(

x

)

F(x)

F(x)部分。 下图是论文中给出的两种残差结构。左边的残差结构是针对层数较少网络,例如ResNet18层和ResNet34层网络。右边是针对网络层数较多的网络,例如ResNet101,ResNet152等。为什么深层网络要使用右侧的残差结构呢。因为,右侧的残差结构能够减少网络参数与运算量。同样输入一个channel为256的特征矩阵,如果使用左侧的残差结构需要大约1170648个参数,但如果使用右侧的残差结构只需要69632个参数。明显搭建深层网络时,使用右侧的残差结构更合适。 这部分是在执行完residual mapping部分之后,对identity mapping部分的实现,如上图右半部分可知,可知,stride != 1或者in_channel=64 不等于out_channel=128。故当满足此条件时执行self.shortcut。 接着我们再来分析下针对ResNet50/101/152的残差结构,如下图所示。在该残差结构当中,主分支使用了三个卷积层,第一个是1x1的卷积层用来压缩channel维度,第二个是3x3的卷积层,第三个是1x1的卷积层用来还原channel维度(注意主分支上第一层卷积层和第二次卷积层所使用的卷积核个数是相同的,第三次是第一层的4倍)。该残差结构所对应的虚线残差结构如下图右侧所示,同样在捷径分支上有一层1x1的卷积层,它的卷积核个数与主分支上的第三层卷积层卷积核个数相同,注意每个卷积层的步距。

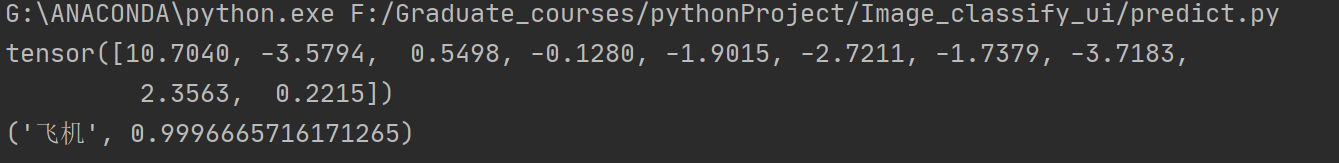

resnet.py import torch.nn as nn import torch.nn.functional as F class ResidualBlock(nn.Module): def __init__(self, inchannel, outchannel, stride=1): super(ResidualBlock, self).__init__() self.left = nn.Sequential( nn.Conv2d(inchannel, outchannel, kernel_size=3, stride=stride, padding=1, bias=False), nn.BatchNorm2d(outchannel), nn.ReLU(inplace=True), nn.Conv2d(outchannel, outchannel, kernel_size=3, stride=1, padding=1, bias=False), nn.BatchNorm2d(outchannel) ) self.shortcut = nn.Sequential() if stride != 1 or inchannel != outchannel: self.shortcut = nn.Sequential( nn.Conv2d(inchannel, outchannel, kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(outchannel) ) def forward(self, x): out = self.left(x) out += self.shortcut(x) out = F.relu(out) return out class ResNet(nn.Module): def __init__(self, ResidualBlock, num_classes=10): super(ResNet, self).__init__() self.inchannel = 64 self.conv1 = nn.Sequential( nn.Conv2d(3, 64, kernel_size=3, stride=1, padding=1, bias=False), nn.BatchNorm2d(64), nn.ReLU() ) self.layer1 = self.make_layer(ResidualBlock, 64, 2, stride=1) self.layer2 = self.make_layer(ResidualBlock, 128, 2, stride=2) self.layer3 = self.make_layer(ResidualBlock, 256, 2, stride=2) self.layer4 = self.make_layer(ResidualBlock, 512, 2, stride=2) self.fc = nn.Linear(512, num_classes) def make_layer(self, block, channels, num_blocks, stride): strides = [stride] + [1] * (num_blocks - 1) # strides = [1, 1] layers = [] for stride in strides: layers.append(block(self.inchannel, channels, stride)) self.inchannel = channels return nn.Sequential(*layers) def forward(self, x): out = self.conv1(x) out = self.layer1(out) out = self.layer2(out) out = self.layer3(out) out = self.layer4(out) out = F.avg_pool2d(out, 4) out = out.view(out.size(0), -1) out = self.fc(out) return out def ResNet18(): return ResNet(ResidualBlock) 三、训练与测试网络train.py import torch import torch.nn as nn import torch.optim as optim import torchvision import torchvision.transforms as transforms import argparse from resnet import ResNet18 import os # 定义是否使用GPU device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # 参数设置,使我们能够手动输入命令行参数 parser = argparse.ArgumentParser(description='PyTorch CIFAR10 Training') parser.add_argument('--outf', default='./model', help='folder to output images and model checkpoints') args = parser.parse_args() # 超参数设置 EPOCH = 135 # 遍历数据集次数 pre_epoch = 0 # 定义已经遍历数据集的次数 BATCH_SIZE = 128 # 批处理尺寸(batch_size) LR = 0.01 # 学习率 # 准备数据集并预处理 transform_train = transforms.Compose([ transforms.RandomCrop(32, padding=4), # 先四周填充为0,再把图像随机裁剪成32*32 transforms.RandomHorizontalFlip(), # 图像一半的概率翻转,一半的概率不翻转 transforms.ToTensor(), transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)) ]) transform_test = transforms.Compose([ transforms.ToTensor(), transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)) ]) trainset = torchvision.datasets.CIFAR10(root='./data', train=True, download=True, transform=transform_train) # 训练数据集 trainloader = torch.utils.data.DataLoader(trainset, batch_size=BATCH_SIZE, shuffle=True, num_workers=2) # 生成一个个batch进行训练,组成batch的时候顺序打乱取 testset = torchvision.datasets.CIFAR10(root='./data', train=False, download=True, transform=transform_test) testloader = torch.utils.data.DataLoader(testset, batch_size=100, shuffle=False, num_workers=2) # Cifar-10的标签 classes = ('plane', 'car', 'bird', 'cat', 'deer', 'dog', 'frog', 'horse', 'ship', 'truck') # 模型定义-ResNet net = ResNet18().to(device) # 定义损失函数和优化方式 criterion = nn.CrossEntropyLoss() # 损失函数为交叉熵,多用于多分类问题 optimizer = optim.SGD(net.parameters(), lr=LR, momentum=0.9, weight_decay=5e-4) # 优化方式为mini-batch momentum-SGD,并采用L2正则化(权重衰减) # 训练 if __name__ == "__main__": if not os.path.exists(args.outf): os.mkdir(args.outf) best_acc = 85 # 2 初始化 best test accuracy print("Start Training, Resnet-18!") # 定义遍历数据集的次数 with open("acc.txt", "w") as f: with open("log.txt", "w") as f2: for epoch in range(pre_epoch, EPOCH): print('\nEpoch:%d' % (epoch + 1)) net.train() sum_loss = 0.0 correct = 0.0 total = 0.0 for i, data in enumerate(trainloader, 0): # 准备数据 length = len(trainloader) inputs, labels = data inputs, labels = inputs.to(device), labels.to(device) optimizer.zero_grad() # forward + backward outputs = net(inputs) loss = criterion(outputs, labels) loss.backward() optimizer.step() # 每训练1个batch打印一次loss和准确率 sum_loss += loss.item() _, predicted = torch.max(outputs.data, 1) total += labels.size(0) correct += predicted.eq(labels.data).cpu().sum() print('[epoch:%d, iter:%d] Loss:%.03f | Acc: %.3f%% ' % (epoch + 1, (i + 1 + epoch * length), sum_loss / (i + 1), 100. * correct / total)) f2.write('%03d %05d | Loss: %.03f | Acc: %.3f%%' % (epoch + 1, (i + 1 + epoch * length), sum_loss / (i + 1), 100. * correct / total)) f2.write('\n') f2.flush() # 每训练完一个epoch测试一下准确率 print("Waiting Test!") with torch.no_grad(): correct = 0 total = 0 for data in testloader: net.eval() images, labels = data images, labels = images.to(device), labels.to(device) outputs = net(images) # 取得分最高的那个类 (outputs.data的索引号) _, predicted = torch.max(outputs.data, 1) total += labels.size(0) correct += (predicted == labels).sum() print('测试分类准确率为:%.3f%%' % (100 * correct / total)) acc = 100. * correct / total # 将每次测试结果实时写入acc.txt文件中 print('Saving model......') torch.save(net.state_dict(), '%s/net_%03d.pth' % (args.outf, epoch + 1)) f.write("EPOCH=%03d, Accuracy= %.3f%%" % (epoch + 1, acc)) f.write('\n') f.flush() # 记录最佳测试分类准确率并写入best_acc.txt文件中 if acc > best_acc: f3 = open("best_acc.txt", "w") f3.write("EPOCH=%d, best_acc=%.3%%" % (epoch + 1, acc)) f3.close() best_acc = acc print("Training Finished, TotalEPOCH=%d" % EPOCH)predict.py import torch import torchvision.transforms as transforms from resnet import ResNet18 from PIL import Image def predict_(img): data_ransform = transforms.Compose([ transforms.ToTensor(), # transforms.Normalize(mean, std) → mean = [0.4914, 0.4822, 0.4465], std = [0.2023, 0.1994, 0.2010]) transforms.Normalize((0.4914, 0.4822, 0.4465), (0.2023, 0.1994, 0.2010)) ]) img = data_ransform(img) img = torch.unsqueeze(img, dim=0) model = ResNet18() model_weight_pth = './model/net_128.pth' model.load_state_dict(torch.load(model_weight_pth)) model.eval() classes = {'0': '飞机', '1': '汽车', '2': '鸟', '3': '猫', '4': '鹿', '5': '狗', '6': '青蛙', '7': '马', '8': '船', '9': '卡车'} with torch.no_grad(): output = torch.squeeze(model(img)) print(output) predict = torch.softmax(output, dim=0) predict_cla = torch.argmax(predict).numpy() return classes[str(predict_cla)], predict[predict_cla].item() if __name__ == '__main__': img = Image.open('./test/0_3.jpg') net = predict_(img) print(net)效果图: 在PyCharm中打开QtDesigner进行界面布局设计,具体步骤参考:链接 注意一下此处代码是转换后经过修改得到的: self.selectImage_Btn.clicked.connect(Dialog.openImage) self.run_Btn.clicked.connect(Dialog.run)后台代码main.py import Image_classify from PyQt5.QtWidgets import QApplication, QDialog from PyQt5.QtWidgets import * from PyQt5.QtGui import * from PyQt5.QtCore import Qt from PyQt5.QtCore import * import sys from predict import predict_ from PIL import Image class MainDialog(QDialog): def __init__(self, parent=None): super(QDialog, self).__init__(parent) self.ui = Image_classify.Ui_Dialog() self.ui.setupUi(self) self.setWindowTitle("CLFAR-10 十分类") self.setWindowIcon(QIcon('1.png')) def openImage(self): global fname imgName, imgType = QFileDialog.getOpenFileName(self, "选择图片", "", "*.jpg;;*.png;;All Files(*)") jpg = QPixmap(imgName).scaled(self.ui.label_image.width(), self.ui.label_image.height()) self.ui.label_image.setPixmap(jpg) fname = imgName def run(self): global fname file_name = str(fname) img = Image.open(file_name) a, b = predict_(img) self.ui.display_result.setText(a) self.ui.disply_acc.setText(str(b)) if __name__ == '__main__': myapp = QApplication(sys.argv) myDlg =MainDialog() myDlg.show() sys.exit(myapp.exec_()) 四、效果展示:

|

【本文地址】

我们先对左侧的残差结构(针对ResNet18/34)进行一个分析。 如下图所示,该残差结构的主分支是由两层3x3的卷积层组成,而残差结构右侧的连接线是shortcut分支也称捷径分支(注意为了让主分支上的输出矩阵能够与我们捷径分支上的输出矩阵进行相加,必须保证这两个输出特征矩阵有相同的shape)。如果刚刚仔细观察了ResNet34网络结构图的同学,应该能够发现图中会有一些虚线的残差结构。在原论文中作者只是简单说了这些虚线残差结构有降维的作用,并在捷径分支上通过1x1的卷积核进行降维处理。而下图右侧给出了详细的虚线残差结构,注意下每个卷积层的步距stride,以及捷径分支上的卷积核的个数(与主分支上的卷积核个数相同)。

我们先对左侧的残差结构(针对ResNet18/34)进行一个分析。 如下图所示,该残差结构的主分支是由两层3x3的卷积层组成,而残差结构右侧的连接线是shortcut分支也称捷径分支(注意为了让主分支上的输出矩阵能够与我们捷径分支上的输出矩阵进行相加,必须保证这两个输出特征矩阵有相同的shape)。如果刚刚仔细观察了ResNet34网络结构图的同学,应该能够发现图中会有一些虚线的残差结构。在原论文中作者只是简单说了这些虚线残差结构有降维的作用,并在捷径分支上通过1x1的卷积核进行降维处理。而下图右侧给出了详细的虚线残差结构,注意下每个卷积层的步距stride,以及捷径分支上的卷积核的个数(与主分支上的卷积核个数相同)。  在代码实现过程中有如下的几行,借此处我先解释一下:

在代码实现过程中有如下的几行,借此处我先解释一下:

各大ResNet网络结构:

各大ResNet网络结构:

将Image_classify.ui转成Image_classify.py可得 Image_classify.py

将Image_classify.ui转成Image_classify.py可得 Image_classify.py 项目:https://github.com/all1new/CLFAR_10_Image_cls

项目:https://github.com/all1new/CLFAR_10_Image_cls