【图像分割】医学图像分割多目标分割(多分类)实践 |

您所在的位置:网站首页 › 医学图像目标检测和分类识别论文怎么写 › 【图像分割】医学图像分割多目标分割(多分类)实践 |

【图像分割】医学图像分割多目标分割(多分类)实践

|

文章目录

本文已更新到[【附源码】医学图像分割入门实践](https://blog.csdn.net/baidu_36511315/article/details/120902937)1. 数据集2. 数据预处理3. 代码部分3.1 训练集和验证集划分3.2 数据加载和处理3.2.1 数据变换

3.3 One-hot 工具函数3.4 网络模型3.5 模型权重初始化3.6 损失函数3.7 模型评价指标3.8 训练3.9 模型验证3.10 实验结果

本文已更新到【附源码】医学图像分割入门实践

1. 数据集

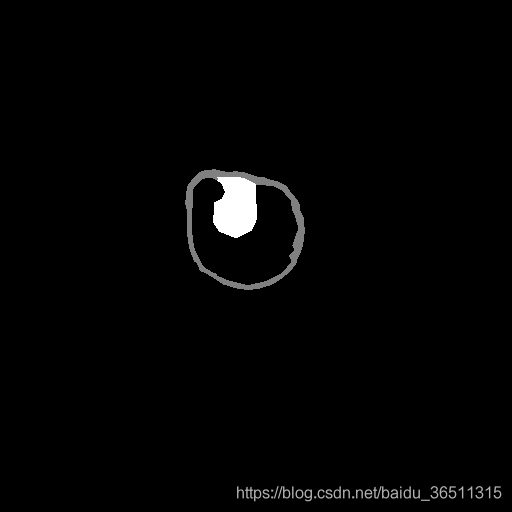

来自ISICDM 2019 临床数据分析挑战赛的基于磁共振成像的膀胱内外壁分割与肿瘤检测数据集。

灰度值:灰色128为膀胱内外壁,白色255为肿瘤。 任务是要同时分割出膀胱内外壁和肿瘤部分,加上背景,最后构成一个三分类问题。 2. 数据预处理数据预处理最重要的一步就是要对gt进行one-hot编码,如果对one-hot编码不太清楚可以看下这篇文章(数据预处理 One-hot 编码的两种实现方式)。 由于笔记本性能较差,为了代码能够在笔记本上跑起来。在对数据预处理的时候进行了缩放(scale)和中心裁剪(center crop)。原始数据大小为512,首先将数据缩放到256,再裁剪到128的大小。 3. 代码部分 3.1 训练集和验证集划分按照训练集80%,验证集20%的策略进行重新分配数据集。直接运行当前文件进行数据重新划分, 仅供参考,当然这一部分代码可根据自己的需求随意设计。 # repartition_dataset.py import os import math import random def partition_data(dataset_dir, ouput_root): """ Divide the raw data into training sets and validation sets :param dataset_dir: path root of dataset :param ouput_root: the root path to the output file :return: """ image_names = [] mask_names = [] val_size = 0.2 train_names = [] val_names = [] for file in os.listdir(os.path.join(dataset_dir, "Images")): image_names.append(file) image_names.sort() for file in os.listdir(os.path.join(dataset_dir, "Labels")): mask_names.append(file) mask_names.sort() rawdata_size = len(image_names) random.seed(361) val_indices = random.sample(range(0, rawdata_size), math.floor(rawdata_size * val_size)) train_indices = [] for i in range(0, rawdata_size): if i not in val_indices: train_indices.append(i) with open(os.path.join(ouput_root, 'val.txt'), 'w') as f: for i in val_indices: val_names.append(image_names[i]) f.write(image_names[i]) f.write('\n') with open(os.path.join(ouput_root, 'train.txt'), 'w') as f: for i in train_indices: train_names.append(image_names[i]) f.write(image_names[i]) f.write('\n') train_names.sort(), val_names.sort() return train_names, val_names if __name__ == '__main__': dataset_dir = '../media/LIBRARY/Datasets/Bladder/' output_root = '../media/LIBRARY/Datasets/Bladder/' train_names, val_names = partition_data(dataset_dir, output_root) print(len(train_names)) print(train_names) print(len(val_names)) print(val_names) 3.2 数据加载和处理数据加载写一个专门的数据类来做就可以了,最核心的其实就是实现里面的__getitem__()方法。make_dataset方法用来加载数据的文件名,真正加载数据是在__getitem__()里面,在DataLoder的时候自动调用。 # baldder.py import os import cv2 import torch import numpy as np from PIL import Image from torch.utils import data from torchvision import transforms from utils import helpers ''' 128= bladder 255 = tumor 0 = background ''' palette = [[0], [128], [255]] num_classes = 3 def make_dataset(root, mode): assert mode in ['train', 'val', 'test'] items = [] if mode == 'train': img_path = os.path.join(root, 'Images') mask_path = os.path.join(root, 'Labels') if 'Augdata' in root: data_list = os.listdir(os.path.join(root, 'Images')) else: data_list = [l.strip('\n') for l in open(os.path.join(root, 'train.txt')).readlines()] for it in data_list: item = (os.path.join(img_path, it), os.path.join(mask_path, it)) items.append(item) elif mode == 'val': img_path = os.path.join(root, 'Images') mask_path = os.path.join(root, 'Labels') data_list = [l.strip('\n') for l in open(os.path.join( root, 'val.txt')).readlines()] for it in data_list: item = (os.path.join(img_path, it), os.path.join(mask_path, it)) items.append(item) else: pass return items class Bladder(data.Dataset): def __init__(self, root, mode, joint_transform=None, center_crop=None, transform=None, target_transform=None): self.imgs = make_dataset(root, mode) self.palette = palette self.mode = mode if len(self.imgs) == 0: raise RuntimeError('Found 0 images, please check the data set') self.mode = mode self.joint_transform = joint_transform self.center_crop = center_crop self.transform = transform self.target_transform = target_transform def __getitem__(self, index): img_path, mask_path = self.imgs[index] img = Image.open(img_path) mask = Image.open(mask_path) if self.joint_transform is not None: img, mask = self.joint_transform(img, mask) if self.center_crop is not None: img, mask = self.center_crop(img, mask) img = np.array(img) mask = np.array(mask) # Image.open读取灰度图像时shape=(H, W) 而非(H, W, 1) # 因此先扩展出通道维度,以便在通道维度上进行one-hot映射 img = np.expand_dims(img, axis=2) mask = np.expand_dims(mask, axis=2) mask = helpers.mask_to_onehot(mask, self.palette) # shape from (H, W, C) to (C, H, W) img = img.transpose([2, 0, 1]) mask = mask.transpose([2, 0, 1]) if self.transform is not None: img = self.transform(img) if self.target_transform is not None: mask = self.target_transform(mask) return img, mask def __len__(self): return len(self.imgs) 3.2.1 数据变换 # joint_transforms import cv2 import math import sys import numbers import random from PIL import Image, ImageOps import numpy as np from skimage import measure import matplotlib.pyplot as plt from matplotlib.patches import Rectangle from utils import helpers class Compose(object): def __init__(self, transforms): self.transforms = transforms def __call__(self, img, mask): assert img.size == mask.size for t in self.transforms: img, mask = t(img, mask) return img, mask class RandomCrop(object): def __init__(self, size, padding=0): if isinstance(size, numbers.Number): self.size = (int(size), int(size)) else: self.size = size self.padding = padding def __call__(self, img, mask): if self.padding > 0: img = ImageOps.expand(img, border=self.padding, fill=0) mask = ImageOps.expand(mask, border=self.padding, fill=0) assert img.size == mask.size w, h = img.size th, tw = self.size if w == tw and h == th: return img, mask if w |

【本文地址】

今日新闻 |

推荐新闻 |