【参加CUDA线上训练营】共享内存实例1:矩阵转置实现及其优化① |

您所在的位置:网站首页 › 矩阵转置代码优化 › 【参加CUDA线上训练营】共享内存实例1:矩阵转置实现及其优化① |

【参加CUDA线上训练营】共享内存实例1:矩阵转置实现及其优化①

|

【参加CUDA线上训练营】共享内存实例1:矩阵转置实现及其优化①

1.完整代码2.原理介绍2.1 将各block 线程对应元素放入共享内存tile2.2 实现转置2.3 在此基础上修改参考文献

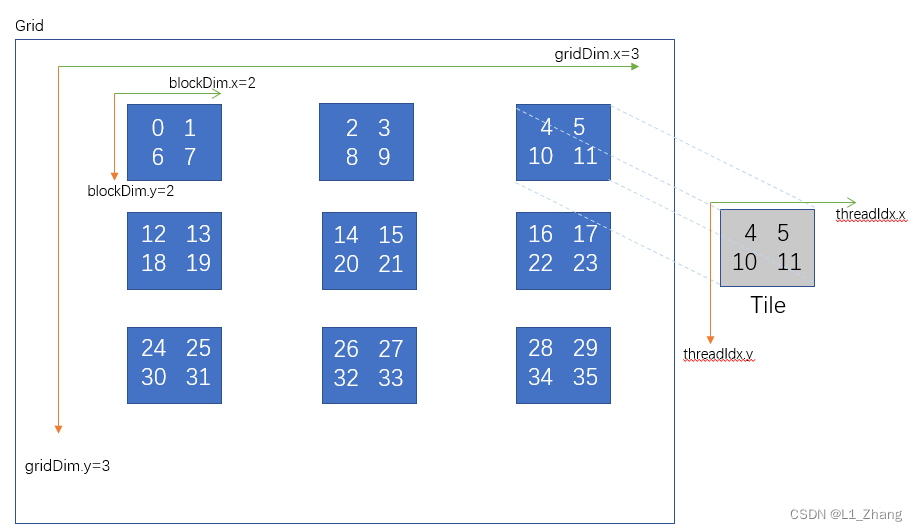

本文参考Nvidia官方blog[An Efficient Matrix Transpose in CUDA C/C++及其对应的github代码transpose.cu学习下共享内存(Shared Memory)的使用,感受下其加速效果。 使用的共享内存大小为2*2的tile,一个block中定义的线程数也是2*2。这也是本文与共享内存实例1:矩阵转置实现及其优化②的主要区别。 1.完整代码 #include "error.cuh" #include #ifdef USE_DP typedef double real; #else typedef float real; #endif const int NUM_REPEATS = 10; const int TILE_DIM = 32; void timing(const real *d_A, real *d_B, const int N, const int task); __global__ void transpose1(const real *A, real *B, const int N); __global__ void transpose2(const real *A, real *B, const int N); void print_matrix(const int N, const real *A); int main(int argc, char **argv) { if (argc != 2) { printf("usage: %s N\n", argv[0]); exit(1); } const int N = atoi(argv[1]); const int N2 = N * N; const int M = sizeof(real) * N2; real *h_A = (real *) malloc(M); real *h_B = (real *) malloc(M); for (int n = 0; n printf("A =\n"); print_matrix(N, h_A); printf("\nB =\n"); print_matrix(N, h_B); } free(h_A); free(h_B); CHECK(cudaFree(d_A)); CHECK(cudaFree(d_B)); return 0; } void timing(const real *d_A, real *d_B, const int N, const int task) { const int grid_size_x = (N + TILE_DIM - 1) / TILE_DIM; const int grid_size_y = grid_size_x; const dim3 block_size(TILE_DIM, TILE_DIM); const dim3 grid_size(grid_size_x, grid_size_y); float t_sum = 0; float t2_sum = 0; for (int repeat = 0; repeat case 1: transpose1(d_A, d_B, N); break; case 2: transpose2(d_A, d_B, N); break; default: printf("Error: wrong task\n"); exit(1); break; } CHECK(cudaEventRecord(stop)); CHECK(cudaEventSynchronize(stop)); float elapsed_time; CHECK(cudaEventElapsedTime(&elapsed_time, start, stop)); printf("Time = %g ms.\n", elapsed_time); if (repeat > 0) { t_sum += elapsed_time; t2_sum += elapsed_time * elapsed_time; } CHECK(cudaEventDestroy(start)); CHECK(cudaEventDestroy(stop)); } const float t_ave = t_sum / NUM_REPEATS; const float t_err = sqrt(t2_sum / NUM_REPEATS - t_ave * t_ave); printf("Time = %g +- %g ms.\n", t_ave, t_err); } __global__ void transpose1(const real *A, real *B, const int N) { __shared__ real S[TILE_DIM][TILE_DIM]; int bx = blockIdx.x * TILE_DIM; int by = blockIdx.y * TILE_DIM; int nx1 = bx + threadIdx.x; int ny1 = by + threadIdx.y; if (nx1 B[nx2 * N + ny2] = S[threadIdx.x][threadIdx.y]; } } __global__ void transpose2(const real *A, real *B, const int N) { __shared__ real S[TILE_DIM][TILE_DIM + 1]; int bx = blockIdx.x * TILE_DIM; int by = blockIdx.y * TILE_DIM; int nx1 = bx + threadIdx.x; int ny1 = by + threadIdx.y; if (nx1 B[nx2 * N + ny2] = S[threadIdx.x][threadIdx.y]; } } void print_matrix(const int N, const real *A) { for (int ny = 0; ny printf("%g\t", A[ny * N + nx]); } printf("\n"); } } 2.原理介绍核函数transpose1和transpose2主要区别在于消除Bank Conflicts(更详细的信息可见共享内存实例1:矩阵转置实现及其优化②),这里就transpose1进行下分析。 __global__ void transpose1(const real *A, real *B, const int N) { __shared__ real S[TILE_DIM][TILE_DIM]; int bx = blockIdx.x * TILE_DIM; int by = blockIdx.y * TILE_DIM; int nx1 = bx + threadIdx.x; int ny1 = by + threadIdx.y; if (nx1 B[nx2 * N + ny2] = S[threadIdx.x][threadIdx.y]; } }以6*6矩阵的转置为例: 2.1 将各block 线程对应元素放入共享内存tile前半部分代码主要实现的是将各block 线程对应元素放入对应的共享内存tile。 S[threadIdx.y][threadIdx.x] = A[ny1 * N + nx1];

|

【本文地址】