统计学 |

您所在的位置:网站首页 › 模型的可决系数怎么看 › 统计学 |

统计学

|

决定系数(coefficient ofdetermination),有的教材上翻译为判定系数,也称为拟合优度。 决定系数反应了y的波动有多少百分比能被x的波动所描述,即表征依变数Y的变异中有多少百分比,可由控制的自变数X来解释.

表达式:R2=SSR/SST=1-SSE/SST 其中:SST=SSR+SSE,SST(total sum of squares)为总平方和,SSR(regression sum of squares)为回归平方和,SSE(error sum of squares) 为残差平方和。 注:(不同书命名不同)

回归平方和:SSR(Sum of Squares forregression) = ESS (explained sum of squares) 残差平方和:SSE(Sum of Squares for Error) = RSS(residual sum of squares) 总离差平方和:SST(Sum of Squares fortotal) = TSS(total sum of squares) SSE+SSR=SST RSS+ESS=TSS

意义:拟合优度越大,自变量对因变量的解释程度越高,自变量引起的变动占总变动的百分比高。观察点在回归直线附近越密集。 取值范围:0-1.

举例:

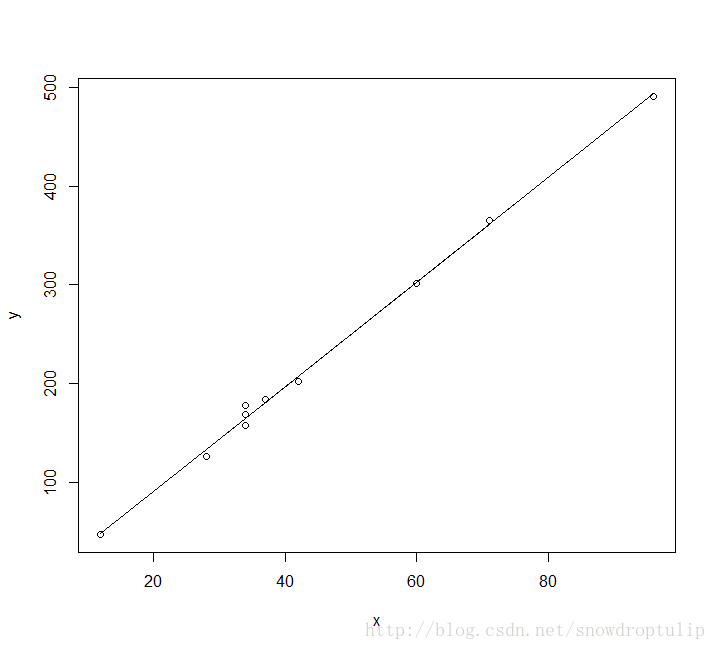

假设有10个点,如下图:

我们R来实现如何求线性方程和R2: # 线性回归的方程 mylr = function(x,y){ plot(x,y) x_mean = mean(x) y_mean = mean(y) xy_mean = mean(x*y) xx_mean = mean(x*x) yy_mean = mean(y*y) m = (x_mean*y_mean - xy_mean)/(x_mean^2 - xx_mean) b = y_mean - m*x_mean f = m*x+b# 线性回归方程 lines(x,f) sst = sum((y-y_mean)^2) sse = sum((y-f)^2) ssr = sum((f-y_mean)^2) result = c(m,b,sst,sse,ssr) names(result) = c('m','b','sst','sse','ssr') return(result) } x = c(60,34,12,34,71,28,96,34,42,37) y = c(301,169,47,178,365,126,491,157,202,184) f = mylr(x,y) f['m'] f['b'] f['sse']+f['ssr'] f['sst'] R2 = f['ssr']/f['sst'] 最后方程为:f(x)=5.3x-15.5R2为99.8,说明x对y的解释程度非常高。

|

【本文地址】