k8s之挂载NFS到POD中 |

您所在的位置:网站首页 › 挂载odm失败是啥意思 › k8s之挂载NFS到POD中 |

k8s之挂载NFS到POD中

|

写在前面

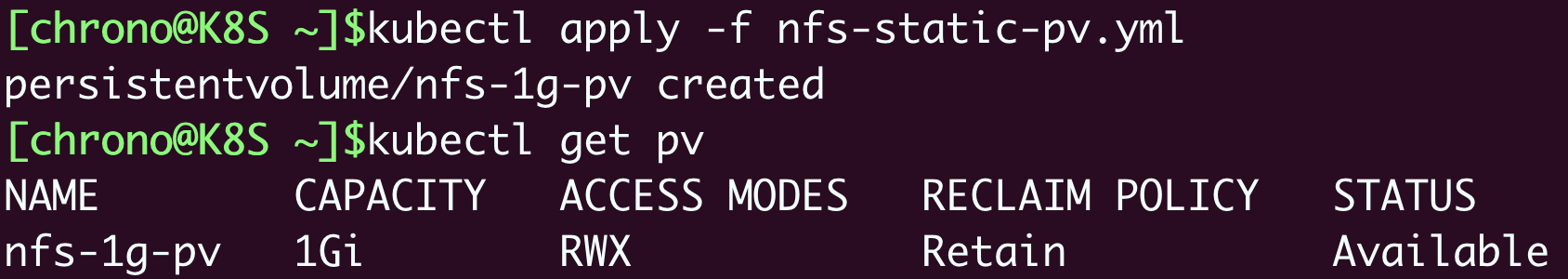

在k8s之挂载本地磁盘到POD中 一文中我们看了如何将POD中的数据写到本地磁盘中,这种方式要求POD只能在指定的Node上,一旦POD更换Node,数据依然会丢失,所以本文看下如何通过将数据写到NFS中来解决这个问题。下面我们就开始吧! 1:NFS 1.1:什么是NFSNFS全称network file system,是一种允许我们像使用本地磁盘一样,使用远端某机器的磁盘的一种技术,采用cs架构,所以其本质上可以看做是一种数据存储解决方案。 1.2:安装server 1.2.1:安装服务器 sudo apt -y install nfs-kernel-server 1.2.2:创建服务器写文件目录 mkdir -p /tmp/nfs注意,这里的目录仅仅用于测试,生产环境还是要使用专门的目录来做,例如创建专门的data目录,并挂载一块专门的盘。 1.2.3:配置访问规则编辑/etc/exports文件添加如下内容: /tmp/nfs 192.168.64.132(rw,sync,no_subtree_check,no_root_squash,insecure)这里的IP地址注意改成你自己的,也支持配置多条,或者配置网段,根据具体情况设置即可。配置完毕后还需要将修改通知到nfs server,通过如下命令: sudo exportfs -ra sudo exportfs -v 1.2.4:启动服务 sudo systemctl start nfs-server sudo systemctl enable nfs-server sudo systemctl status nfs-server其中sudo systemctl status nfs-server如果输出如下信息则说明启动成功了: dongyunqi@mongodaddy:/tmp/nfs/1g-pv$ sudo systemctl status nfs-server ...... 1月 19 13:01:59 mongodaddy systemd[1]: Starting NFS server and services... 1月 19 13:02:00 mongodaddy systemd[1]: Finished NFS server and services.我们也可以通过如下命令查看nfs server配置的挂载信息: dongyunqi@mongodaddy:/tmp/nfs/1g-pv$ showmount -e 127.0.0.1 Export list for 127.0.0.1: /tmp/nfs 192.168.64.132/tmp/nfs 192.168.64.132意思是允许IP地址192.168.64.132的机器挂载到nfs server的/tmp/nfs目录。 1.3:安装client执行如下命令: sudo apt -y install nfs-common dongyunqi@mongomongo:~/nfslocal$ showmount -e 192.168.64.131 # 注意这里的IP是nfs server的地址 Export list for 192.168.64.131: /tmp/nfs 192.168.64.132然后通过如下命令挂载目录: sudo mount -t nfs 192.168.64.131:/tmp/nfs /home/dongyunqi/nfslocal这样当我们在本地/home/dongyunqi/nfslocal目录中创建的文件就会同步到nfs server,即192.168.64.131的/tmp/nfs目录。如下测试: 在nfs client /home/dongyunqi/nfslocal操作: dongyunqi@mongomongo:~/nfslocal$ pwd /home/dongyunqi/nfslocal dongyunqi@mongomongo:~/nfslocal$ sudo tee /home/dongyunqi/nfslocal/ntf-pod.yml EOF [sudo] password for dongyunqi: apiVersion: v1 在nfs server查看: dongyunqi@mongodaddy:/tmp/nfs$ pwd /tmp/nfs dongyunqi@mongodaddy:/tmp/nfs$ cat ntf-pod.yml apiVersion: v1到这里nfs就安装成功了,接下来看下如何在POD中使用。 2:在POD中使用NFS 2.1:定义PV首先我们来定义代表NFS的PV,yaml如下: dongyunqi@mongodaddy:~/k8s$ cat ntf-1g.yml apiVersion: v1 kind: PersistentVolume metadata: name: nfs-1g-pv spec: storageClassName: nfs accessModes: - ReadWriteMany capacity: storage: 1Gi nfs: path: /tmp/nfs/1g-pv server: 192.168.64.131这里accessModes设置为ReadWriteMany,因为NFS是基于网络来存储数据的,所以可以被多个POD读写多次,path: /tmp/nfs/1g-pv代表nfs server要写入的目录是/tmp/nfs/1g-pv,因为C端不能直接操作S端来创建目录,所以该目录一定要提前创建好,server是nfs server的IP地址,apply: kubectl apply -f nfs-static-pv.yml

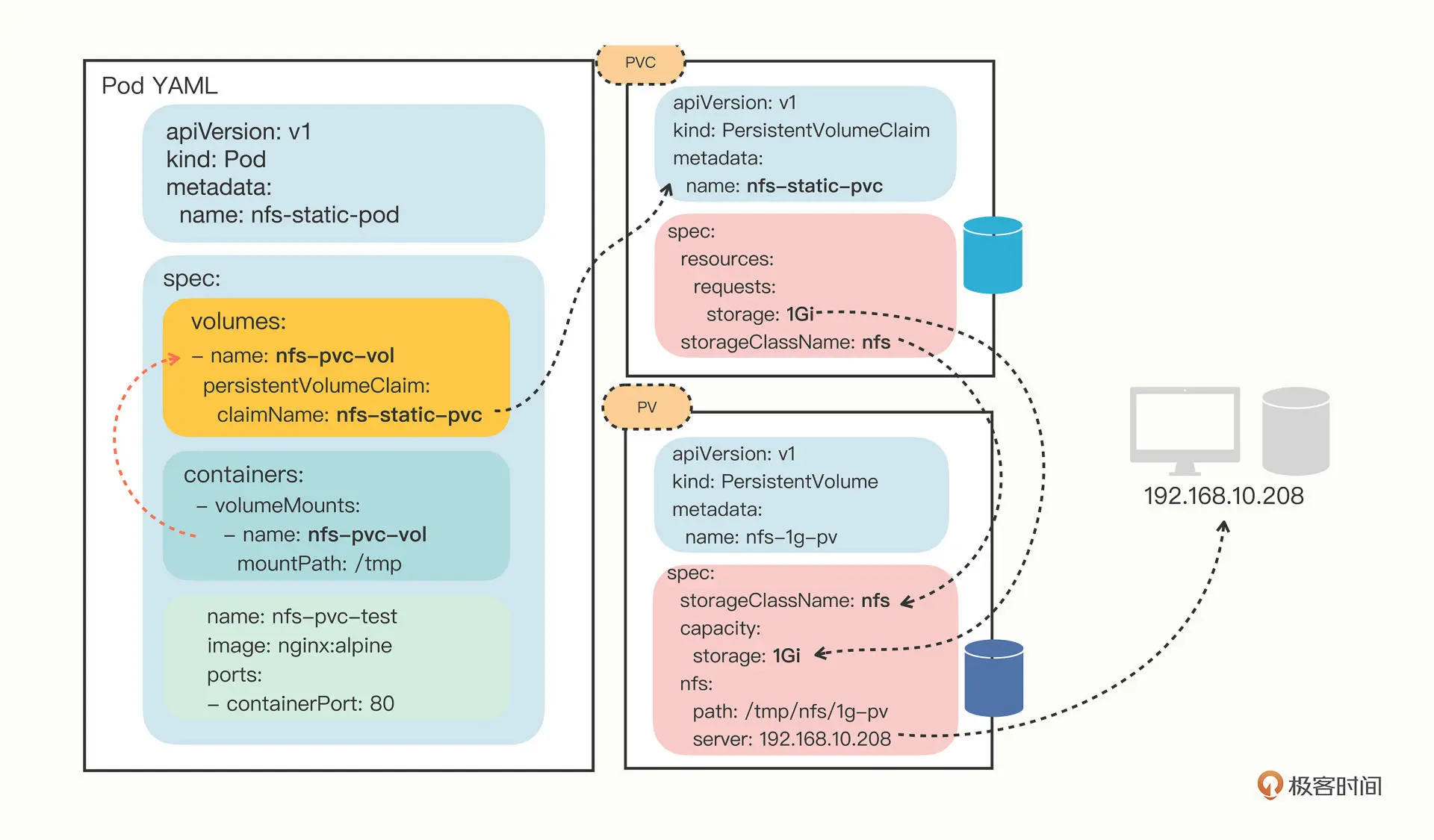

定义PVC来使用PV,yaml如下: dongyunqi@mongodaddy:~/k8s$ cat ntf-pvc.yml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-static-pvc spec: storageClassName: nfs accessModes: - ReadWriteMany resources: requests: storage: 1Gi这里从storage class为nfs的PV中申请1g的空间,这样就会找到我们已经定义的PVnfs-1g-pv,apply,pvc使用pv后二者就会绑定在一起了,如下: dongyunqi@mongodaddy:~/k8s$ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE nfs-1g-pv 1Gi RWX Retain Bound default/nfs-static-pvc nfs 40h dongyunqi@mongodaddy:~/k8s$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-static-pvc Bound nfs-1g-pv 1Gi RWX nfs 40h 2.2:在POD中使用yaml如下: dongyunqi@mongodaddy:~/k8s$ cat ntf-pod.yml apiVersion: v1 kind: Pod metadata: name: nfs-static-pod spec: volumes: - name: nfs-pvc-vol persistentVolumeClaim: claimName: nfs-static-pvc containers: - name: nfs-pvc-test image: nginx:alpine ports: - containerPort: 80 volumeMounts: - name: nfs-pvc-vol mountPath: /tmp首先将PVC定义为volume卷,然后挂载到容器中的/tmp目录,这样在POD中的/tmp目录创建的文件就会同步到nfs server的/tmp/nfs/1g-pv目录了,apply后进入到容器中测试如下: dongyunqi@mongodaddy:~/k8s$ kubectl get pod NAME READY STATUS RESTARTS AGE nfs-static-pod 1/1 Running 0 40h dongyunqi@mongodaddy:~/k8s$ kubectl exec -it nfs-static-pod -- sh /tmp # cd /tmp/ /tmp # touch hello.txt /tmp # echo "hello from pod" > hello.txt /tmp # cat hello.txt hello from pod到这里在POD中使用NFS就分享完了,最后再看下pv,pvc,pod,nfs server之间的关系图:

以上的PV我们都是手动定义的,接下来继续看下如何使用自动的方式来创建PV。 3:NFS provisoner 3.1:定义动态存储卷这种方式我们叫做是动态存储卷,那么之前手动定义PV的方式我们就可以叫做是静态存储卷。 一种存储设备对应一种provisoner,想要使用某种存储介质,只需要创建其对应的相关资源即可,比如我们这里的NFS对应的provisoner的项目就在这里 ,我们需要其中的三个yaml文件,rbac.yaml、class.yaml 和 deployment.yaml,如下是我本地修改后的: rbac.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["nodes"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system roleRef: kind: Role name: leader-locking-nfs-client-provisionerclass.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client provisioner: k8s-sigs.io/nfs-subdir-external-provisioner # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "false"deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: nfs-client-provisioner labels: app: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: kube-system spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner image: chronolaw/nfs-subdir-external-provisioner:v4.0.2 volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: k8s-sigs.io/nfs-subdir-external-provisioner - name: NFS_SERVER value: 192.168.64.131 - name: NFS_PATH value: /tmp/nfs volumes: - name: nfs-client-root nfs: server: 192.168.64.131 path: /tmp/nfs分别apply后查看: dongyunqi@mongodaddy:~/k8s$ kubectl get deploy -n kube-system NAME READY UP-TO-DATE AVAILABLE AGE coredns 2/2 2 2 5d1h nfs-client-provisioner 1/1 1 1 5h38m=] dongyunqi@mongodaddy:~/k8s$ kubectl get pod -n kube-system -l app=nfs-client-provisioner NAME READY STATUS RESTARTS AGE nfs-client-provisioner-6b7bd59ccf-76xmb 1/1 Running 0 5h39m这样PV定义好了,接下来就需要定义storage class对动态PV进行分类了。 3.2:storage class比较简单,yaml如下: dongyunqi@mongodaddy:~/k8s$ cat dynamic_class.yml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: nfs-client-retained provisioner: k8s-sigs.io/nfs-subdir-external-provisioner parameters: onDelete: "retain"这里不仅仅定义了class的名称还通过参数进行了相关的设置。 3.3:定义PVC接下来就能定义PVC来使用动态PV了,yaml如下: dongyunqi@mongodaddy:~/k8s$ cat dynamic_pvc.yml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: nfs-dyn-10m-pvc spec: storageClassName: nfs-client accessModes: - ReadWriteMany resources: requests: storage: 10Mi这里从storage class为nfs-client的PV中查找大小满足10M的存储设备,apply后会和自动创建的PV进行绑定,如下: dongyunqi@mongodaddy:~/k8s$ kubectl get pv NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE pvc-d45bca08-34a6-4c32-9382-6abac724c842 10Mi RWX Delete Bound default/nfs-dyn-10m-pvc nfs-client 15h dongyunqi@mongodaddy:~/k8s$ kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE nfs-dyn-10m-pvc Bound pvc-d45bca08-34a6-4c32-9382-6abac724c842 10Mi RWX nfs-client 15h可以看到PV已经自动创建了,名字是pvc-d45bca08-34a6-4c32-9382-6abac724c842,但不知道为什么前缀是pvc而不是pv,我想可能是手误吧!不过这都不重要。 3.4:在POD中使用yaml如下: dongyunqi@mongodaddy:~/k8s$ cat dynamic_pod.yml apiVersion: v1 kind: Pod metadata: name: nfs-dyn-pod spec: volumes: - name: nfs-dyn-10m-vol persistentVolumeClaim: claimName: nfs-dyn-10m-pvc containers: - name: nfs-dyn-test image: nginx:alpine ports: - containerPort: 80 volumeMounts: - name: nfs-dyn-10m-vol mountPath: /tmp这里我们将NFS共享目录挂载到POD的/tmp目录,apply后将会在NFS server创建共享目录,如下: dongyunqi@mongodaddy:/tmp/nfs/default-nfs-dyn-10m-pvc-pvc-d45bca08-34a6-4c32-9382-6abac724c842$ pwd /tmp/nfs/default-nfs-dyn-10m-pvc-pvc-d45bca08-34a6-4c32-9382-6abac724c842此时我们在POD的/tmp目录创建文件的话将会自动同步到该NFS共享目录: 操作POD: dongyunqi@mongodaddy:~$ kubectl get pod NAME READY STATUS RESTARTS AGE nfs-dyn-pod 1/1 Running 0 15h dongyunqi@mongodaddy:~$ kubectl exec -it nfs-dyn-pod -- sh / # cd /tmp/ /tmp # pwd /tmp /tmp # touch hello.txt /tmp # echo "helloooooo" > hello.txt /tmp # 共享目录查看: dongyunqi@mongodaddy:~$ cat /tmp/nfs/default-nfs-dyn-10m-pvc-pvc-d45bca08-34a6-4c32-9382-6abac724c842/hello.txt helloooooo 写在后面 小结本文分析了如何挂载NFS server到POD中,并一起看了如何使用provisoner来实现自动创建PV的工作,减少手工重复劳动。希望本文能够帮助到你。 参考文章列表 |

【本文地址】

今日新闻 |

推荐新闻 |