BERT文本分类 |

您所在的位置:网站首页 › 房县今日头条新闻 › BERT文本分类 |

BERT文本分类

|

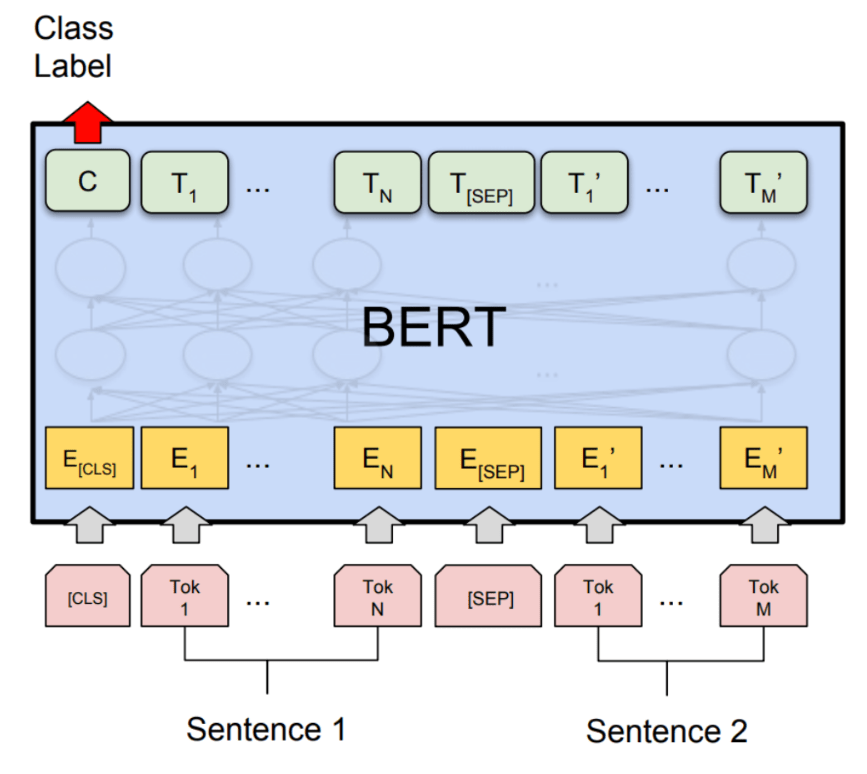

在上一篇中我们基于美团评论构建了分类模型,取得了较好的结果。然而当数据量骤增,分类目标较多的时候,上述模型就不再适用,这时就需要将数据放到GPU上进行训练,并对模型进行适当的优化。今天我们基于今日头条新闻数据集进行文本分类,完整代码及权重可在文末获取。

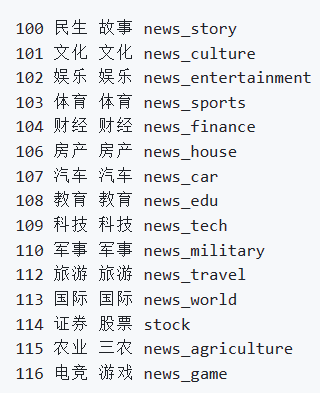

一.今日头条新闻文本 数据来源:今日头条客户端 ;数据规模:共382688条,分布于15个分类中。每行为一条数据,以_!_分割的个字段,从前往后分别是 新闻ID,分类code(见下文),分类名称(见下文),新闻字符串(仅含标题),新闻关键词。原始数据集格式如下:“6552431613437805063_!_102_!_news_entertainment_!_谢娜为李浩菲澄清网络谣言,之后她的两个行为给自己加分_!_佟丽娅,网络谣言,快乐大本营,李浩菲,谢娜,观众们”。 对于标签的对照如下图所示:

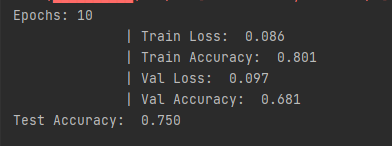

二.代码实现 1.下载预训练模型 bert-chinese: https://huggingface.co/bert-base-chinese 今日头条新闻数据集 https://github.com/aceimnorstuvwxz/toutiao-text-classfication-dataset 2. 数据预处理 在模型构建前,我们需要先进行数据预处理,提取出标签和文本。 # 实现文本的预处理,保存至csv文件 import pandas as pd def label_text(data): items = data.split('_!_') code = items[2] title = items[3] keyword = items[4] label = code text = title + keyword return label, text df = pd.DataFrame(columns=['label', 'text']) with open('toutiao_cat_data.txt', 'r', encoding='utf-8') as file: for line in file: label, text = label_text(line) print(label) df = df._append({'label': label, 'text': text}, ignore_index=True) df.to_csv('toutiao_cat_data.csv', index=False, header=True)3. 训练代码 import torch import numpy as np from transformers import BertTokenizer import pandas as pd from torch import nn from transformers import BertModel from torch.optim import Adam from tqdm import tqdm df = pd.read_csv('toutiao_cat_data.csv') df = df.head(1600) #时间原因,我只取了1600条训练 np.random.seed(112) df_train, df_val, df_test = np.split(df.sample(frac=1, random_state=42), [int(.8*len(df)), int(.9*len(df))]) # 拆分为训练集、验证集和测试集,比例为 80:10:10。 tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') # 读取数据集 labels = {'news_story':0, 'news_culture':1, 'news_entertainment':2, 'news_sports':3, 'news_finance':4, 'news_house':5, 'news_car':6, 'news_edu':7, 'news_tech':8, 'news_military':9, 'news_travel':10, 'news_world':11, 'stock':12, 'news_agriculture':13, 'news_game':14 } class Dataset(torch.utils.data.Dataset): def __init__(self, df): self.labels = [labels[label] for label in df['label']] self.texts = [tokenizer(text, padding='max_length', max_length = 512, truncation=True, return_tensors="pt") for text in df['text']] def classes(self): return self.labels def __len__(self): return len(self.labels) def get_batch_labels(self, idx): # Fetch a batch of labels return np.array(self.labels[idx]) def get_batch_texts(self, idx): # Fetch a batch of inputs return self.texts[idx] def __getitem__(self, idx): batch_texts = self.get_batch_texts(idx) batch_y = self.get_batch_labels(idx) return batch_texts, batch_y # 构建模型 class BertClassifier(nn.Module): def __init__(self, dropout=0.5): super(BertClassifier, self).__init__() self.bert = BertModel.from_pretrained('bert-base-chinese',num_labels=15) self.dropout = nn.Dropout(dropout) self.linear = nn.Linear(768, 15) self.relu = nn.ReLU() def forward(self, input_id, mask): _, pooled_output = self.bert(input_ids= input_id, attention_mask=mask,return_dict=False) dropout_output = self.dropout(pooled_output) linear_output = self.linear(dropout_output) final_layer = self.relu(linear_output) return final_layer # 训练模型 def train(model, train_data, val_data, learning_rate, epochs, batch_size): # 通过Dataset类获取训练和验证集 train, val = Dataset(train_data), Dataset(val_data) # DataLoader根据batch_size获取数据,训练时选择打乱样本 train_dataloader = torch.utils.data.DataLoader(train, batch_size, shuffle=True) val_dataloader = torch.utils.data.DataLoader(val, batch_size) # 判断是否使用GPU use_cuda = torch.cuda.is_available() device = torch.device("cuda" if use_cuda else "cpu") # 定义损失函数和优化器 criterion = nn.CrossEntropyLoss() optimizer = Adam(model.parameters(), lr=learning_rate) if use_cuda: model = model.cuda() criterion = criterion.cuda() # 开始进入训练循环 for epoch_num in range(epochs): # 定义两个变量,用于存储训练集的准确率和损失 total_acc_train = 0 total_loss_train = 0 # 进度条函数tqdm for train_input, train_label in tqdm(train_dataloader): train_label = train_label.to(device) mask = train_input['attention_mask'].to(device) input_id = train_input['input_ids'].squeeze(1).to(device) # 通过模型得到输出 output = model(input_id, mask) # 计算损失 batch_loss = criterion(output, train_label.long()) total_loss_train += batch_loss.item() # 计算精度 acc = (output.argmax(dim=1) == train_label).sum().item() total_acc_train += acc # 模型更新 model.zero_grad() batch_loss.backward() optimizer.step() # ------ 验证模型 ----------- # 定义两个变量,用于存储验证集的准确率和损失 total_acc_val = 0 total_loss_val = 0 # 不需要计算梯度 with torch.no_grad(): # 循环获取数据集,并用训练好的模型进行验证 for val_input, val_label in val_dataloader: # 如果有GPU,则使用GPU,接下来的操作同训练 val_label = val_label.to(device) mask = val_input['attention_mask'].to(device) input_id = val_input['input_ids'].squeeze(1).to(device) output = model(input_id, mask) batch_loss = criterion(output, val_label.long()) total_loss_val += batch_loss.item() acc = (output.argmax(dim=1) == val_label).sum().item() total_acc_val += acc print( f'''Epochs: {epoch_num + 1} | Train Loss: {total_loss_train / len(train_data): .3f} | Train Accuracy: {total_acc_train / len(train_data): .3f} | Val Loss: {total_loss_val / len(val_data): .3f} | Val Accuracy: {total_acc_val / len(val_data): .3f}''') EPOCHS = 10 # 训练轮数 model = BertClassifier() # 定义的模型 LR = 1e-6 # 学习率 Batch_Size = 16 # 看你的GPU,要合理取值 train(model, df_train, df_val, LR, EPOCHS, Batch_Size) torch.save(model.state_dict(), 'BERT-toutiao.pt') # 评估模型 def evaluate(model, test_data): test = Dataset(test_data) test_dataloader = torch.utils.data.DataLoader(test, batch_size=16) use_cuda = torch.cuda.is_available() device = torch.device("cuda" if use_cuda else "cpu") if use_cuda: model = model.cuda() total_acc_test = 0 with torch.no_grad(): for test_input, test_label in test_dataloader: test_label = test_label.to(device) mask = test_input['attention_mask'].to(device) input_id = test_input['input_ids'].squeeze(1).to(device) output = model(input_id, mask) acc = (output.argmax(dim=1) == test_label).sum().item() total_acc_test += acc print(f'Test Accuracy: {total_acc_test / len(test_data): .3f}') evaluate(model, df_test)4. 训练结果 原始数据有382688条,需要训练时间很久,小编只选了1600条,需要的小伙伴自己用服务器去跑吧!经过10轮训练,训练结果如下,可以看到效果已经很不错了。进一步增大数据集和轮数,可以取得更好的结果。

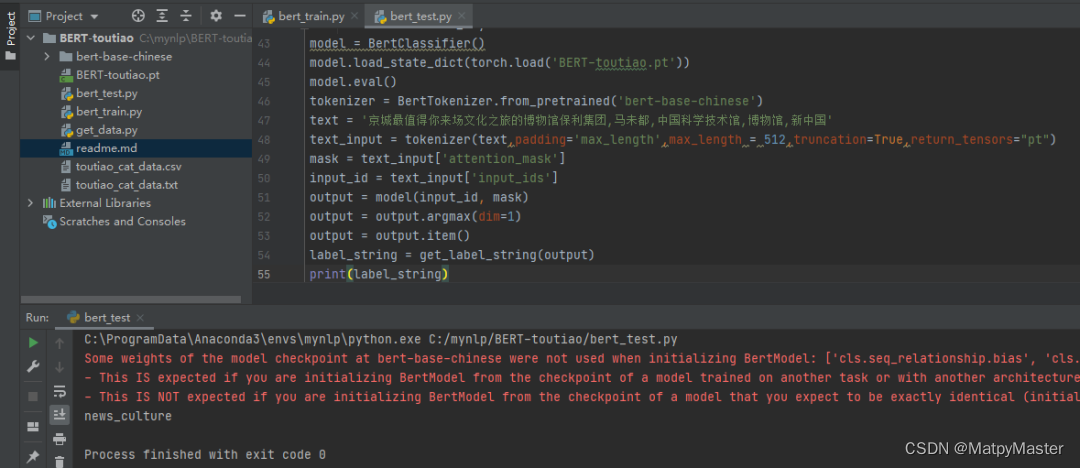

5. 测试代码 import torch from transformers import BertTokenizer from torch import nn from transformers import BertModel def get_label_string(label): labels = {'news_story': 0, 'news_culture': 1, 'news_entertainment': 2, 'news_sports': 3, 'news_finance': 4, 'news_house': 5, 'news_car': 6, 'news_edu': 7, 'news_tech': 8, 'news_military': 9, 'news_travel': 10, 'news_world': 11, 'stock': 12, 'news_agriculture': 13, 'news_game': 14 } for key, value in labels.items(): if value == label: return key return None # 构建模型 class BertClassifier(nn.Module): def __init__(self, dropout=0.5): super(BertClassifier, self).__init__() self.bert = BertModel.from_pretrained('bert-base-chinese',num_labels=15) self.dropout = nn.Dropout(dropout) self.linear = nn.Linear(768, 15) self.relu = nn.ReLU() def forward(self, input_id, mask): _, pooled_output = self.bert(input_ids= input_id, attention_mask=mask,return_dict=False) dropout_output = self.dropout(pooled_output) linear_output = self.linear(dropout_output) final_layer = self.relu(linear_output) return final_layer model = BertClassifier() model.load_state_dict(torch.load('BERT-toutiao.pt')) model.eval() tokenizer = BertTokenizer.from_pretrained('bert-base-chinese') text = '京城最值得你来场文化之旅的博物馆保利集团,马未都,中国科学技术馆,博物馆,新中国' text_input = tokenizer(text,padding='max_length',max_length = 512,truncation=True,return_tensors="pt") mask = text_input['attention_mask'] input_id = text_input['input_ids'] output = model(input_id, mask) output = output.argmax(dim=1) output = output.item() label_string = get_label_string(output) print(label_string)

喜欢的小伙伴可关注公众号回复“BERT头条”,即可获取源代码和训练好的权重文件。 最后: 会不定期发布相关设计内容包括但不限于如下内容:信号处理、通信仿真、算法设计、matlab appdesigner,gui设计、simulink仿真......希望能帮到你!

|

【本文地址】

今日新闻 |

推荐新闻 |