|

本例程是一个处理图像数据的标准的分布过程,利用CNN 实现对狗的品种的鉴定。

实现过程在代码中会有相关注释。

本例程数据集资源在这 https://download.csdn.net/download/rh8866/12532963

import os

#这里用到opencv,需要安装此包(用了清华镜像)

#pip install -i https://mirrors.aliyun.com/pypi/simple/ opencv-python

import cv2

import numpy as np

import pandas as pd

#Tqdm 是一个快速,可扩展的Python进度条,可以在 Python 长循环中添加一个进度提示信息

from tqdm import tqdm

import seaborn as sns

import matplotlib.pyplot as plt

import sklearn

from sklearn.model_selection import train_test_split

#读取数据的标签

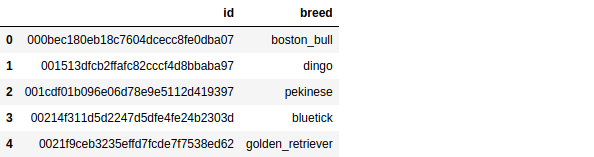

labels = pd.read_csv('./dataset/dog-breed/labels.csv')

labels.head()

#id是train数据集样本图片的名称 ,breed是标签

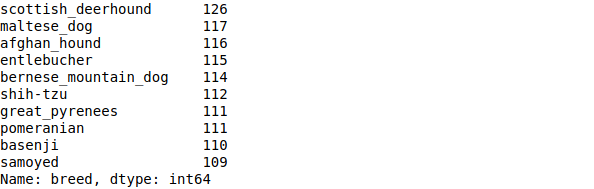

#看看数据的分类统计

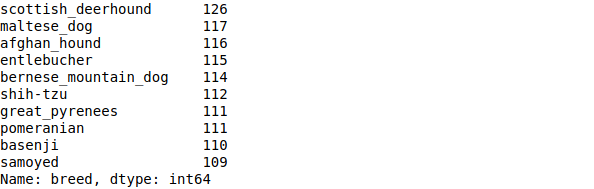

breed_count = labels['breed'].value_counts()

breed_count.head(10)

breed_count.shape

#可以看到有120个种类

# (120,)

#将标签转成one_hot形式

targets = pd.Series(labels['breed'])

one_hot = pd.get_dummies(targets,sparse=True)

one_hot_labels = np.asarray(one_hot)

#设置样本输入模型的参数

img_rows = 128

img_cols = 128

num_channel=1

#输出一张图片看看样式

img_1 = cv2.imread('./dataset/dog-breed/train/001513dfcb2ffafc82cccf4d8bbaba97.jpg',0)

plt.title('Original Image')

plt.imshow(img_1)

#输出图片shape

print(img_1.shape)

#(375, 500)

#由上面的输出可以看到图片是(375, 500) ,为了方便计算我们将其调整大小为128*128

img_1_resize = cv2.resize(img_1,(img_rows,img_cols))

print(img_1_resize.shape)

#(128, 128)

plt.title('Resized Image')

plt.imshow(img_1_resize)

x_feature = []

y_feature = []

i = 0

#加载训练数据和标签

for f,img in tqdm(labels.values):

train_img = cv2.imread('./dataset/dog-breed/train/{}.jpg'.format(f),0)

label = one_hot_labels[i]

train_img_resize = cv2.resize(train_img,(img_rows,img_cols))

x_feature.append(train_img_resize)

y_feature.append(label)

i += 1

#将数据归一化

x_train_data = np.array(x_feature,np.float32) / 255.

print(x_train_data.shape)

#为了符合keras训练数据的格式,我们给数据增加一个纬度

x_train_data = np.expand_dims(x_train_data,axis = 3)

print(x_train_data.shape)

#(10222, 128, 128)

#(10222, 128, 128, 1)

y_train_data = np.array(y_feature,np.uint8)

print(y_train_data.shape)

#(10222, 120)

#分割训练集和验证集

x_train,x_val,y_train,y_val = train_test_split(x_train_data,y_train_data,test_size=0.2,random_state=2)

print(x_train.shape)

print(x_val.shape)

#(8177, 128, 128, 1)

#(2045, 128, 128, 1)

#接下来我们对测试数据做同样的操作

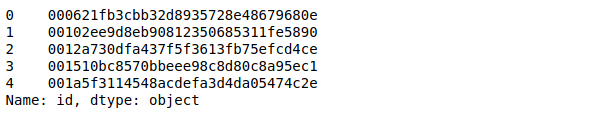

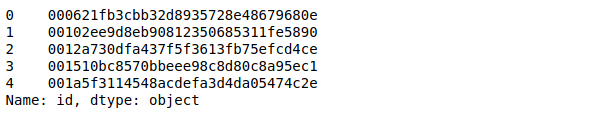

submission = pd.read_csv('./dataset/dog-breed/sample_submission.csv')

test_img = submission['id']

test_img.head()

x_test_feature = []

i = 0

for f in tqdm(test_img.values):

img = cv2.imread('./dataset/dog-breed/test/{}.jpg'.format(f),0)

img_resize = cv2.resize(img,(img_rows,img_cols))

x_test_feature.append(img_resize)

#数据归一化

x_test_data = np.array(x_test_feature, np.float32) / 255.

print (x_test_data.shape)

#增加一个纬度

x_test_data = np.expand_dims(x_test_data, axis = 3)

print (x_test_data.shape)

# (10357, 128, 128)

# (10357, 128, 128, 1)

#现在我们的训练数据,验证数据以及测试聚居都准备妥当,接下来我们开始构建模型

from keras.models import Sequential

from keras.layers import Dense,Dropout

from keras.layers import Convolution2D

from keras.layers import MaxPooling2D

from keras.layers import Flatten

#我们构建一个简单的CNN 模型

model = Sequential()

#卷积层

model.add(Convolution2D(filters = 64,kernel_size = (4,4),padding = 'Same',

activation='relu',input_shape = (img_rows,img_cols,num_channel)))

#池化 最大池化

model.add(MaxPooling2D(pool_size=(2,2)))

#卷积层

model.add(Convolution2D(filters = 64,kernel_size = (4,4),padding='Same',

activation='relu'))

#最大池化

model.add(MaxPooling2D(pool_size=(2,2)))

#展平数据

model.add(Flatten())

#全连接

model.add(Dense(units=120,activation='relu'))

#输出层(由上面可知有120个输出)

model.add(Dense(units=120,activation='softmax'))

#编译模型

model.compile(optimizer='adam',loss = "categorical_crossentropy",metrics=['accuracy'])

model.summary()

#模型结构如下

#开始训练,我们设batch_size = 128,为了能节约时间 epochs为 2(我们只是关注该案例的个工作方法和流程,至于计算速度和准确度暂时我们不考虑,将在后续的博客中探讨这个问题)

batch_size = 128

nb_epochs = 2

history = model.fit(x_train,y_train,

batch_size=batch_size,

epochs=nb_epochs,

verbose=2,

validation_data=(x_val,y_val),

initial_epoch=0)

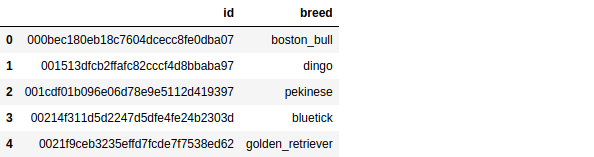

results = model.predict(x_test_data)

prediction = pd.DataFrame(results)

col_names = one_hot.columns.values

prediction.columns = col_names

prediction.insert(0, 'id', submission['id'])

submission = prediction

submission.head()

#保存预测结果

submission.to_csv('new_submission.csv', index=False)

至此本例程就结束了,精确度暂不考虑,勿喷,有好的建议欢迎评论讨论。

|