【最佳实践】阿里云 Elasticsearch 向量检索4步搭建“以图搜图”搜索引擎 |

您所在的位置:网站首页 › 图片搜索的 › 【最佳实践】阿里云 Elasticsearch 向量检索4步搭建“以图搜图”搜索引擎 |

【最佳实践】阿里云 Elasticsearch 向量检索4步搭建“以图搜图”搜索引擎

|

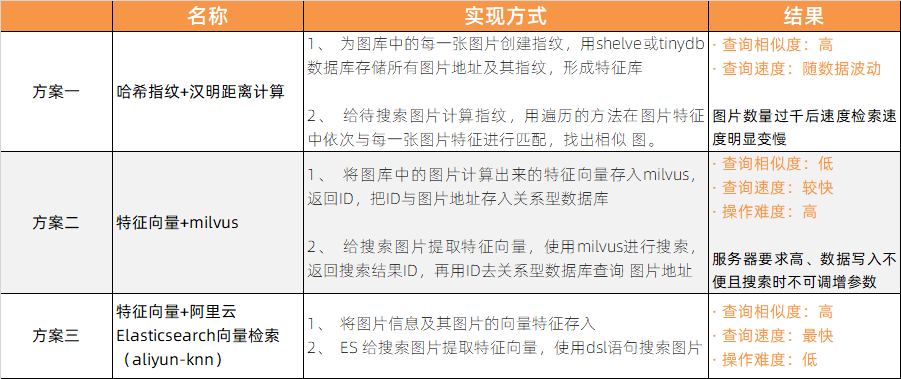

本文作者: 小森同学 阿里云Elasticsearch客户真实实践分享文中涉及到的图片特征提取,使用了yongyuan.name的VGGNet库,再此表示感谢! “图片搜索”是作为导购类网站比较常见的一种功能,其实现的方式有很多,比如“哈西指纹+汉明距离计算”、“特征向量+milvus”,但在实际的应用场景中,要做到快速、精准、简单等特性是比较困难的事情。 “图片搜索”方式优缺点对比

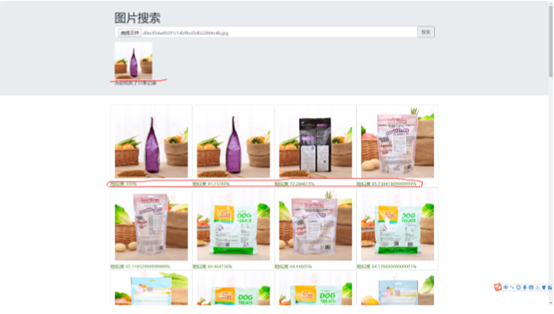

以下是基于 阿里云 Elasticsearch 6.7 版本,通过安装阿里云 Elasticsearch 向量检索插件【aliyun-knn】 实现,且设计图片向量特征为512维度。 如果自建 Elasticsearch ,是无法使用aliyun-knn插件的,自建建议使用开源 Elasticsearch 7.x版本,并安装fast-elasticsearch-vector-scoring插件(https://github.com/lior-k/fast-elasticsearch-vector-scoring/) 一、 Elasticsearch 索引设计1.1、索引结构 1. # 创建一个图片索引 2. PUT images_v2 3. { 4. "aliases": { 5. "images": {} 6. }, 7. "settings": { 8. "index.codec": "proxima", 9. "index.vector.algorithm": "hnsw", 10. "index.number_of_replicas":1, 11. "index.number_of_shards":3 12. }, 13. "mappings": { 14. "_doc": { 15. "properties": { 16. "feature": { 17. "type": "proxima_vector", 18. "dim": 512 19. }, 20. "relation_id": { 21. "type": "keyword" 22. }, 23. "image_path": { 24. "type": "keyword" 25. } 26. } 27. } 28. } 29. } 1.2、DSL 语句 1. GET images/_search 2. 3. "query": { 4. "hnsw": { 5. "feature": { 6. "vector": [255,....255], 7. "size": 3, 8. "ef": 1 9. } 10. } 11. }, 12. "from": 0, 13. "size": 20, 14. "sort": [ 15. { 16. "_score": { 17. "order": "desc" 18. } 19. } 20. ], 21. "collapse": { 22. "field": "relation_id" 23. }, 24. "_source": { 25. "includes": [ 26. "relation_id", 27. "image_path" 28. ] 29. } 二、 图片特征extract_cnn_vgg16_keras.py 1. # -*- coding: utf-8 -*- 2. # Author: yongyuan.name 3. import numpy as np 4. from numpy import linalg as LA 5. from keras.applications.vgg16 import VGG16 6. from keras.preprocessing import image 7. from keras.applications.vgg16 import preprocess_input 8. from PIL import Image, ImageFile 9. ImageFile.LOAD_TRUNCATED_IMAGES = True 10. class VGGNet: 11. def __init__(self): 12. # weights: 'imagenet' 13. # pooling: 'max' or 'avg' 14. # input_shape: (width, height, 3), width and height should >= 48 15. self.input_shape = (224, 224, 3) 16. self.weight = 'imagenet' 17. self.pooling = 'max' 18. self.model = VGG16(weights = self.weight, input_shape = (self.input_shape[0], self.input_shape[1], self.input_shape[2]), pooling = self.pooling, include_top = False) 19. self.model.predict(np.zeros((1, 224, 224 , 3))) 20. ''' 21. Use vgg16 model to extract features 22. Output normalized feature vector 23. ''' 24. def extract_feat(self, img_path): 25. img = image.load_img(img_path, target_size=(self.input_shape[0], self.input_shape[1])) 26. img = image.img_to_array(img) 27. img = np.expand_dims(img, axis=0) 28. img = preprocess_input(img) 29. feat = self.model.predict(img) 30. norm_feat = feat[0]/LA.norm(feat[0]) 31. return norm_feat 1. # 获取图片特征 2. from extract_cnn_vgg16_keras import VGGNet 3. model = VGGNet() 4. file_path = "./demo.jpg" 5. queryVec = model.extract_feat(file_path) 6. feature = queryVec.tolist() 三、 图片特征写入阿里云 Elasticsearchhelper.py 1. import re 2. import urllib.request 3. def strip(path): 4. """ 5. 需要清洗的文件夹名字 6. 清洗掉Windows系统非法文件夹名字的字符串 7. :param path: 8. :return: 9. """ 10. path = re.sub(r'[?\\*|“:/]', '', str(path)) 11. return path 12. 13. def getfilename(url): 14. """ 15. 通过url获取最后的文件名 16. :param url: 17. :return: 18. """ 19. filename = url.split('/')[-1] 20. filename = strip(filename) 21. return filename 22. 23. def urllib_download(url, filename): 24. """ 25. 下载 26. :param url: 27. :param filename: 28. :return: 29. """ 30. return urllib.request.urlretrieve(url, filename)train.py 1. # coding=utf-8 2. import mysql.connector 3. import os 4. from helper import urllib_download, getfilename 5. from elasticsearch5 import Elasticsearch, helpers 6. from extract_cnn_vgg16_keras import VGGNet 7. model = VGGNet() 8. http_auth = ("elastic", "123455") 9. es = Elasticsearch("http://127.0.0.1:9200", http_auth=http_auth) 10. mydb = mysql.connector.connect( 11. host="127.0.0.1", # 数据库主机地址 12. user="root", # 数据库用户名 13. passwd="123456", # 数据库密码 14. database="images" 15. ) 16. mycursor = mydb.cursor() 17. imgae_path = "./images/" 18. def get_data(page=1): 19. page_size = 20 20. offset = (page - 1) * page_size 21. sql = """ 22. SELECT id, relation_id, photo FROM images LIMIT {0},{1} 23. """ 24. mycursor.execute(sql.format(offset, page_size)) 25. myresult = mycursor.fetchall() 26. return myresult 27. 28. def train_image_feature(myresult): 29. indexName = "images" 30. photo_path = "http://域名/{0}" 31. actions = [] 32. for x in myresult: 33. id = str(x[0]) 34. relation_id = x[1] 35. # photo = x[2].decode(encoding="utf-8") 36. photo = x[2] 37. full_photo = photo_path.format(photo) 38. filename = imgae_path + getfilename(full_photo) 39. if not os.path.exists(filename): 40. try: 41. urllib_download(full_photo, filename) 42. except BaseException as e: 43. print("gid:{0}的图片{1}未能下载成功".format(gid, full_photo)) 44. continue 45. if not os.path.exists(filename): 46. continue 47. try: 48. feature = model.extract_feat(filename).tolist() 49. action = { 50. "_op_type": "index", 51. "_index": indexName, 52. "_type": "_doc", 53. "_id": id, 54. "_source": { 55. "relation_id": relation_id, 56. "feature": feature, 57. "image_path": photo 58. } 59. } 60. actions.append(action) 61. except BaseException as e: 62. print("id:{0}的图片{1}未能获取到特征".format(id, full_photo)) 63. continue 64. # print(actions) 65. succeed_num = 0 66. for ok, response in helpers.streaming_bulk(es, actions): 67. if not ok: 68. print(ok) 69. print(response) 70. else: 71. succeed_num += 1 72. print("本次更新了{0}条数据".format(succeed_num)) 73. es.indices.refresh(indexName) 74. 75. page = 1 76. while True: 77. print("当前第{0}页".format(page)) 78. myresult = get_data(page=page) 79. if not myresult: 80. print("没有获取到数据了,退出") 81. break 82. train_image_feature(myresult) 83. page += 1 四、 搜索图片 1. import requests 2. import json 3. import os 4. import time 5. from elasticsearch5 import Elasticsearch 6. from extract_cnn_vgg16_keras import VGGNet 7. model = VGGNet() 8. http_auth = ("elastic", "123455") 9. es = Elasticsearch("http://127.0.0.1:9200", http_auth=http_auth) 10. #上传图片保存 11. upload_image_path = "./runtime/" 12. upload_image = request.files.get("image") 13. upload_image_type = upload_image.content_type.split('/')[-1] 14. file_name = str(time.time())[:10] + '.' + upload_image_type 15. file_path = upload_image_path + file_name 16. upload_image.save(file_path) 17. # 计算图片特征向量 18. queryVec = model.extract_feat(file_path) 19. feature = queryVec.tolist() 20. # 删除图片 21. os.remove(file_path) 22. # 根据特征向量去ES中搜索 23. body = { 24. "query": { 25. "hnsw": { 26. "feature": { 27. "vector": feature, 28. "size": 5, 29. "ef": 10 30. } 31. } 32. }, 33. # "collapse": { 34. # "field": "relation_id" 35. # }, 36. "_source": {"includes": ["relation_id", "image_path"]}, 37. "from": 0, 38. "size": 40 39. } 40. indexName = "images" 41. res = es.search(indexName, body=body) 42. # 返回的结果,最好根据自身情况,将得分低的过滤掉...经过测试, 得分在0.65及其以上的,比较符合要求 依赖的包 1. mysql_connector_repackaged 2. elasticsearch 3. Pillow 4. tensorflow 5. requests 6. pandas 7. Keras 8. numpy 总结:从“用户体验”角度考虑,在可感知层面,速度和精准度决定了产品在用户使用过程中,是否满足“好用”的感觉,通过阿里云 Elasticsearch 向量检索(aliyun-knn)简单四步搭建的“以图搜图”搜索引擎,不仅满足“好用”,同时操作简单一步到位的特征,也加分不少。 相关活动

更多折扣活动,请访问阿里云 Elasticsearch 官网 • 阿里云 Elasticsearch 商业通用版,1核2G首月免费• 阿里云 Elasticsearch 日志增强版,首月六折,年付六折• 阿里云 Logstash 2核4G首月免费

如有相关实践的同学,欢迎关注我们公众号投稿 |

【本文地址】

今日新闻 |

推荐新闻 |