openpose人体姿态估计 |

您所在的位置:网站首页 › 卡耐基梅隆 › openpose人体姿态估计 |

openpose人体姿态估计

|

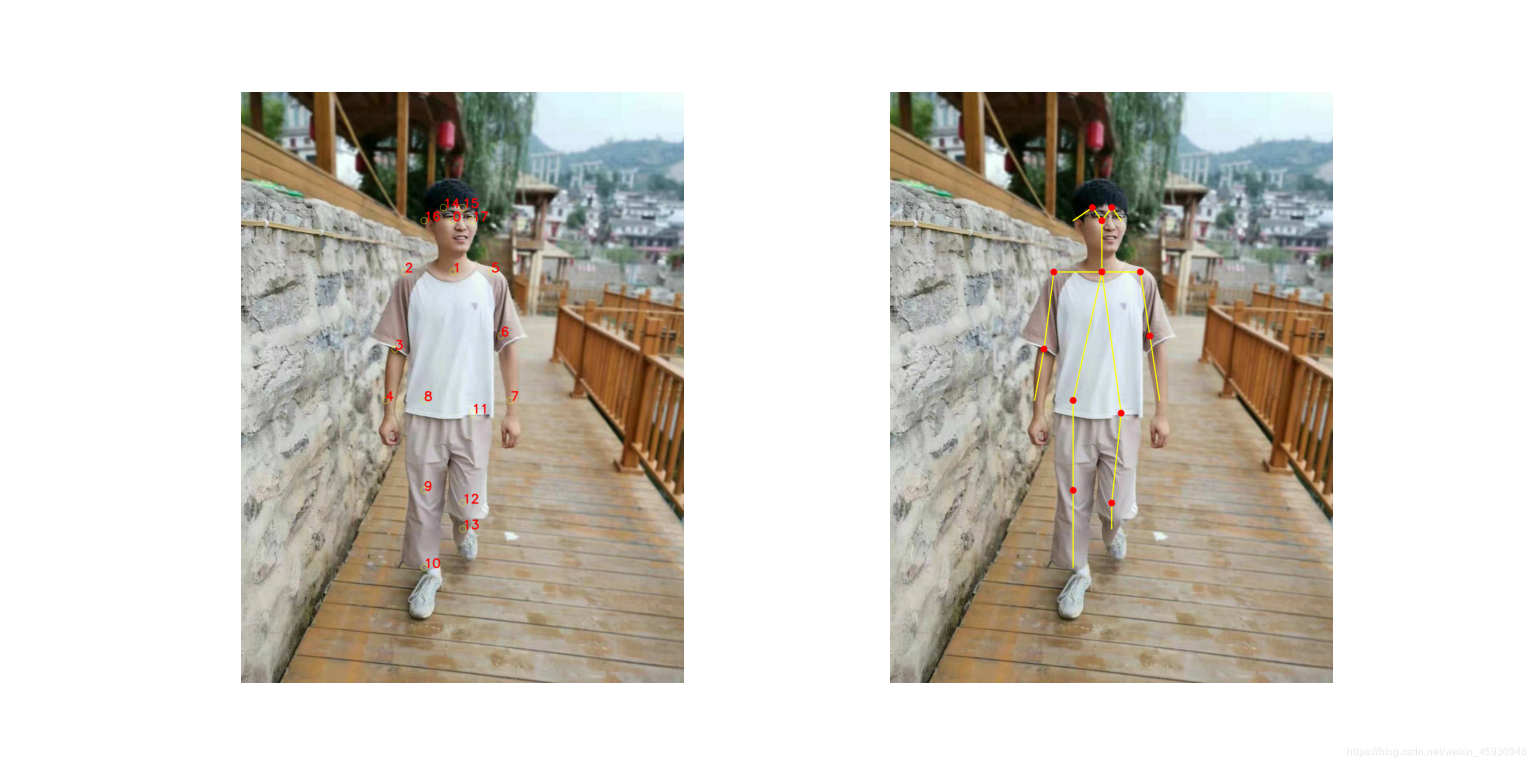

参考博客:Openpose驾驶员危险驾驶检测(抽烟打电话) 人体姿态识别模型—openposeOpenPose人体姿态识别项目是美国卡耐基梅隆大学(CMU)基于卷积神经网络和监督学习并以caffe为框架开发的开源库。可以实现人体动作、面部表情、手指运动等姿态估计。适用于单人和多人,具有极好的鲁棒性。是世界上首个基于深度学习的实时多人二维姿态估计应用。 参考:Github开源人体姿态识别项目OpenPose中文文档 OpenPose项目Github链接:https://github.com/CMU-Perceptual-Computing-Lab/openpose openpose模型有:手,脸,人体姿态。如图: 检测结果: 把上述代码改为由opencv捕获摄像头,实时进行人体姿态检测 import cv2 def detect(img): protoFile = './models/pose/coco/pose_deploy_linevec.prototxt' weightsfile = './models/pose/coco/pose_iter_440000.caffemodel' npoints = 18 POSE_PAIRS = [[1, 0], [1, 2], [1, 5], [2, 3], [3, 4], [5, 6], [6, 7], [1, 8], [8, 9], [9, 10], [1, 11], [11, 12], [12, 13], [0, 14], [0, 15], [14, 16], [15, 17]] net = cv2.dnn.readNetFromCaffe(protoFile, weightsfile) inHeight = img.shape[0] # 1440 inWidth = img.shape[1] # 1080 netInputsize = (368, 368) inpBlob = cv2.dnn.blobFromImage(img, 1.0 / 255, netInputsize, (0, 0, 0), swapRB=True, crop=False) net.setInput(inpBlob) output = net.forward() scaleX = float(inWidth) / output.shape[3] scaleY = float(inHeight) / output.shape[2] points = [] threshold = 0.1 for i in range(npoints): probMap = output[0, i, :, :] # shape(46*46) minVal, prob, minLoc, point = cv2.minMaxLoc(probMap) x = scaleX * point[0] y = scaleY * point[1] if prob > threshold: points.append((int(x), int(y))) else: points.append(None) for i, p in enumerate(points): # enumerate把points的值前面带上索引i cv2.circle(img, p, 8, (255, 255, 0), thickness=1, lineType=cv2.FILLED) cv2.putText(img, '{}'.format(i), p, cv2.FONT_HERSHEY_SIMPLEX, 1, (255, 0, 0), 2, lineType=cv2.LINE_AA) cap = cv2.VideoCapture(0) while True: ret, frame = cap.read() detect(frame) cv2.imshow('frame',frame) if cv2.waitKey(1) & 0xFF == ord('q'): # 按‘q’退出 break检测结果: 为了检测驾驶员是否存在吸烟和打电话等危险行为,可以计算人体特定关键点之间的距离。 吸烟:右手到鼻子的距离或者左手到鼻子的距离。 打电话:右手到右耳的距离或者左手到左耳的距离。 import math def __distance(A,B): if A is None or B is None: return 0 else: return math.sqrt((A[0]-B[0])**2 + (A[1]-B[1])**2) distance0 = __distance(points[0],points[4]) #右手到鼻子距离 distance1 = __distance(points[0],points[7]) #左手到鼻子距离 distance2 = __distance(points[4],points[16]) #右手到右耳距离 distance3 = __distance(points[7],points[17]) #左手到左耳距离 print('右手到鼻子距离:',distance0,'左手到鼻子距离:',distance1) print('右手到右耳距离:',distance2,'左手到左耳距离:',distance3计算结果 右手到鼻子距离: 467.6964827748868 左手到鼻子距离: 460.135849505339 右手到右耳距离: 447.97321348491363 左手到左耳距离: 447.97321348491363 |

【本文地址】

今日新闻 |

推荐新闻 |