|

文章目录

一、前言二、手势1.分析2.效果演示3.完整代码

一、前言

首先要知道,通过openpose对手势进行识别,需要找到骨骼点 ,才能确定手部和脸部的位置 ,再识别手部和脸部。

前一篇文章讲了对 骨骼关键点 进行划分,再找到手部感兴趣图像(ROI): Openpose人体骨骼、手势–静态图像标记及分类(附源码)

本文是对 手势特征 进行划分, 根据2008年,北京师范大学的特殊教育研究学者洛维维对《中国手语》进行归纳统计分析后,将5k多个中国手语词汇的基本手型归纳为61个(见后表)。  思路: 思路:  对于每幅图像提取出手型:指尖和重心(这里以手腕为参考点非实际重心),然后计算出距离和夹角,对于不同手势分别进行距离和夹角的统计,得到其分布的数字特征。 对于每幅图像提取出手型:指尖和重心(这里以手腕为参考点非实际重心),然后计算出距离和夹角,对于不同手势分别进行距离和夹角的统计,得到其分布的数字特征。

参考于:《基于计算机视觉的手势识别研究》

二、手势

1.分析

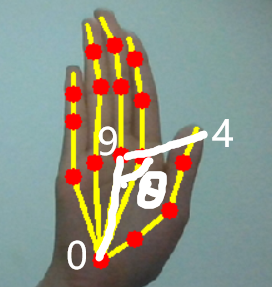

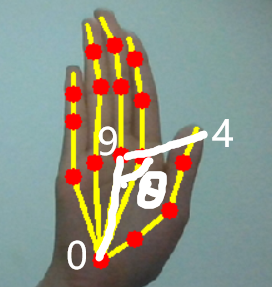

这是简单的手语拼音的部分采集图像:  根据openpose【22关键点】识别手语效果如下: 根据openpose【22关键点】识别手语效果如下:  可以发现,主要是手指头的判别,同coco骨骼模型判别,我们将手势特征划分为:距离和角度。 可以发现,主要是手指头的判别,同coco骨骼模型判别,我们将手势特征划分为:距离和角度。

通过手指到手腕的距离和手指的角度,我们能很好的得到手势的关键信息! 这里划分标准为:【21个手势点,第22个点为背景】

当然这种划分情况,在识别不到 手腕Wrist点 时,会失真,这里暂不考虑这种特殊情况,后面都以假设0点存在进行讨论。

关键函数:

def __distance(self,A,B):

"""距离辅助函数

:param 两个坐标A(x1,y1)B(x2,y2)

:return 距离d=AB的距离

"""

if A is None or B is None:

return 0

else:

return math.sqrt((A[0]-B[0])**2+(A[1]-B[1])**2)

def __myAngle(self,A,B,C):

"""角度辅助函数

:param 三个坐标A(x1,y1)B(x2,y2)C(x3,y3)

:return 角B的余弦值(转换为角度)

"""

if A is None or B is None or C is None:

return 0

else:

a=self.__distance(B,C)

b=self.__distance(A,C)

c=self.__distance(A,B)

if 2*a*c !=0:

return math.degrees(a**2/+c**2-b**2)/(2*a*c)#计算出cos弧度,转换为角度

return 0

def handDistance(self,rkeyPoint,lkeyPoint):

"""距离辅助函数

:param keyPoint:

:return:list

:distance:

"""

if keyPoint[0] is None:

print("未识别到Wrist参考关键点")

distance0 = self.__distance(keyPoint[0],keyPoint[4])#Thumb拇指

distance1 = self.__distance(keyPoint[0],keyPoint[8])#Index食指

distance2 = self.__distance(keyPoint[0],keyPoint[12])#Middle中指

distance3 = self.__distance(keyPoint[0],keyPoint[16])#Ring无名指

distance4 = self.__distance(keyPoint[0],keyPoint[20])#Little小指

return [distance0, distance1, distance2, distance3, distance4]

def handpointAngle(self, keyPoint):

"""角度辅助函数

:param keyPoint:

:return:list

:角度:

"""

angle0 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[4])

angle1 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[8])

angle2 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[12])

angle3 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[16])

angle4 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[20])

return [angle0, angle1, angle2, angle3, angle4]

def getHandsInformation(self,rpoints,lpoints):

"""将右手和左手(各距离5和角度5个特征)信息汇集

:param 左右手关键点

:return 特征集合共20特征点

"""

Information = []

#右手

DistanceList = pose_model.bonepointDistance(rpoints)# 距离关键信息

AngleList = pose_model.bonepointAngle(rpoints)# 角度关键信息

for i in range(len(DistanceList)):

Information.append(DistanceList[i])

for j in range(len(AngleList)):

Information.append(AngleList[j])

#左手

DistanceList = pose_model.bonepointDistance(lpoints)# 距离关键信息

AngleList = pose_model.bonepointAngle(lpoints)# 角度关键信息

for m in range(len(DistanceList)):

Information.append(DistanceList[m])

for n in range(len(AngleList)):

Information.append(AngleList[n])

return Information

2.效果演示

标注

提取

距离和角度计算

定位

标注

距离和角度计算

图像

骨骼检测

骨骼图像

骨骼特征点

有效信息

手部图像

手势检测

手部特征点

3.完整代码

后半部分是傅里叶描述子呈现肤色提取,这里不适用!要求手语者身着长袖,且背景不与肤色相近。据情况选择。 后半部分是傅里叶描述子呈现肤色提取,这里不适用!要求手语者身着长袖,且背景不与肤色相近。据情况选择。

参考图片:(猜猜是什么意思?正确答案是:梨)  源码: 源码:

#!/usr/bin/python3

#!--*-- coding: utf-8 --*--

from __future__ import division# 精确除法

import cv2

import os

import time

import math

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

plt.rcParams['font.sans-serif']=['SimHei'] #用来正常显示中文标签

plt.rcParams['axes.unicode_minus']=False #用来正常显示负号

class general_pose_model(object):

def __init__(self, modelpath):

# 指定采用的模型

# hand: 22 points(21个手势关键点,第22个点表示背景)

# COCO: 18 points()

self.inWidth = 368

self.inHeight = 368

self.threshold = 0.1

self.pose_net = self.general_coco_model(modelpath)

self.hand_num_points = 22

self.hand_point_pairs = [[0,1],[1,2],[2,3],[3,4],

[0,5],[5,6],[6,7],[7,8],

[0,9],[9,10],[10,11],[11,12],

[0,13],[13,14],[14,15],[15,16],

[0,17],[17,18],[18,19],[19,20]]

self.hand_net = self.get_hand_model(modelpath)

self.MIN_DESCRIPTOR = 32 # surprisingly enough, 2 descriptors are already enough

"""提取骨骼特征点,并可视化显示"""

def general_coco_model(self, modelpath):

"""COCO输出格式:

鼻子-0, 脖子-1,右肩-2,右肘-3,右手腕-4,左肩-5,左肘-6,左手腕-7,右臀-8,右膝盖-9,右脚踝-10,

左臀-11,左膝盖-12,左脚踝-13,右眼-14,左眼-15,有耳朵-16,左耳朵-17,背景-18.

"""

self.points_name = {

"Nose": 0, "Neck": 1,

"RShoulder": 2, "RElbow": 3, "RWrist": 4,

"LShoulder": 5, "LElbow": 6, "LWrist": 7,

"RHip": 8, "RKnee": 9, "RAnkle": 10,

"LHip": 11, "LKnee": 12, "LAnkle": 13,

"REye": 14, "LEye": 15,

"REar": 16, "LEar": 17,

"Background": 18}

self.bone_num_points = 18

self.bone_point_pairs = [[1, 0], [1, 2], [1, 5],

[2, 3], [3, 4], [5, 6],

[6, 7], [1, 8], [8, 9],

[9, 10], [1, 11], [11, 12],

[12, 13], [0, 14], [0, 15],

[14, 16], [15, 17]]

prototxt = os.path.join(modelpath,"pose/coco/pose_deploy_linevec.prototxt")

caffemodel = os.path.join(modelpath, "pose/coco/pose_iter_440000.caffemodel")

coco_model = cv2.dnn.readNetFromCaffe(prototxt, caffemodel)

return coco_model

def getBoneKeypoints(self, imgfile):

"""COCO身体关键点检测

:param 图像路径

:return 关键点坐标集合

"""

img_cv2 = cv2.imread(imgfile)

img_height, img_width, _ = img_cv2.shape

#读取图像并生成输入blob

inpBlob = cv2.dnn.blobFromImage(img_cv2,1.0 / 255,(self.inWidth, self.inHeight),(0, 0, 0), swapRB=False, crop=False)

#向前通过网络

self.pose_net.setInput(inpBlob)

self.pose_net.setPreferableBackend(cv2.dnn.DNN_BACKEND_OPENCV)

self.pose_net.setPreferableTarget(cv2.dnn.DNN_TARGET_OPENCL)

output = self.pose_net.forward()

H = output.shape[2]

W = output.shape[3]

print("形状:")

print(output.shape)

# vis heatmaps

self.vis_bone_heatmaps(img_file, output)

#

points = []

for idx in range(self.bone_num_points):

#把输出的大小调整到与输入一样

probMap = output[0, idx, :, :] # confidence map.

# 提取关键点区域的局部最大值

minVal, prob, minLoc, point = cv2.minMaxLoc(probMap)

# Scale the point to fit on the original image

x = (img_width * point[0]) / W

y = (img_height * point[1]) / H

if prob > self.threshold:

points.append((int(x), int(y)))

else:

points.append(None)

#print(points)

return points

def __distance(self,A,B):

"""距离辅助函数

:param 两个坐标A(x1,y1)B(x2,y2)

:return 距离d=AB的距离

"""

if A is None or B is None:

return 0

else:

return math.sqrt((A[0]-B[0])**2+(A[1]-B[1])**2)

def __myAngle(self,A,B,C):

"""角度辅助函数

:param 三个坐标A(x1,y1)B(x2,y2)C(x3,y3)

:return 角B的余弦值(转换为角度)

"""

if A is None or B is None or C is None:

return 0

else:

a=self.__distance(B,C)

b=self.__distance(A,C)

c=self.__distance(A,B)

if 2*a*c !=0:

return math.degrees(a**2/+c**2-b**2)/(2*a*c)#计算出cos弧度,转换为角度

return 0

def bonepointDistance(self, keyPoint):

"""距离辅助函数

:param keyPoint:

:return:list

:distance:

"""

distance0 = self.__distance(keyPoint[4],keyPoint[8])#右手右腰

distance1 = self.__distance(keyPoint[7],keyPoint[11])#左手左腰

distance2 = self.__distance(keyPoint[2],keyPoint[4])#手肩

distance3 = self.__distance(keyPoint[5],keyPoint[7])

distance4 = self.__distance(keyPoint[0],keyPoint[4])#头手

distance5 = self.__distance(keyPoint[0],keyPoint[7])

distance6 = self.__distance(keyPoint[4],keyPoint[7])#两手

distance7 = self.__distance(keyPoint[4],keyPoint[16])#手耳

distance8 = self.__distance(keyPoint[7],keyPoint[17])

distance9 = self.__distance(keyPoint[4],keyPoint[14])#手眼

distance10 = self.__distance(keyPoint[7],keyPoint[15])

distance11 = self.__distance(keyPoint[4],keyPoint[1])#手脖

distance12 = self.__distance(keyPoint[7],keyPoint[1])

distance13 = self.__distance(keyPoint[4],keyPoint[5])#左手左臂

distance14 = self.__distance(keyPoint[4],keyPoint[6])#右手左肩

distance15 = self.__distance(keyPoint[7],keyPoint[2])#右手左肩

distance16 = self.__distance(keyPoint[7],keyPoint[3])#左手右臂

return [distance0, distance1, distance2, distance3, distance4, distance5, distance6, distance7,distance8,

distance9, distance10, distance11, distance12, distance13, distance14, distance15, distance16]

def bonepointAngle(self, keyPoint):

"""角度辅助函数

:param keyPoint:

:return:list

:角度:

"""

angle0 = self.__myAngle(keyPoint[2], keyPoint[3], keyPoint[4])#右手臂夹角

angle1 = self.__myAngle(keyPoint[5], keyPoint[6], keyPoint[7])#左手臂夹角

angle2 = self.__myAngle(keyPoint[3], keyPoint[2], keyPoint[1])#右肩夹角

angle3 = self.__myAngle(keyPoint[6], keyPoint[5], keyPoint[1])

angle4 = self.__myAngle(keyPoint[4], keyPoint[0], keyPoint[7])#头手头

if keyPoint[8] is None or keyPoint[11] is None:

angle5 = 0

else:

temp = ((keyPoint[8][0]+keyPoint[11][0])/2,(keyPoint[8][1]+keyPoint[11][1])/2)#两腰的中间值

angle5 = self.__myAngle(keyPoint[4], temp, keyPoint[7])#手腰手

angle6 = self.__myAngle(keyPoint[4], keyPoint[1], keyPoint[8])#右手脖腰

angle7 = self.__myAngle(keyPoint[7], keyPoint[1], keyPoint[11])#右手脖腰

return [angle0, angle1, angle2, angle3, angle4, angle5, angle6, angle7]

def getBoneInformation(self,bone_points):

"""将距离和角度25个特征信息汇集

:param 骨骼关键点

:return list 距离和角度25个特征信息

"""

Information = []

#print("骨骼关键距离信息: ")

DistanceList = self.bonepointDistance(bone_points)# 3. 距离关键信息

#print(DistanceList)

#print("骨骼关键角度信息: ")

AngleList = self.bonepointAngle(bone_points)# 4. 角度关键信息

#print(AngleList)

for i in range(len(DistanceList)):

Information.append(DistanceList[i])

for j in range(len(AngleList)):

Information.append(AngleList[j])

return Information

def vis_bone_pose(self,imgfile,points):

"""显示标注骨骼点后的图像

:param 图像路径,COCO检测关键点坐标

:return 骨骼连线图、关键点图

"""

img_cv2 = cv2.imread(imgfile)

img_cv2_copy = np.copy(img_cv2)

for idx in range(len(points)):

if points[idx]:

cv2.circle(img_cv2_copy, points[idx], 5, (0, 255, 255), thickness=-1,lineType=cv2.FILLED)

cv2.putText(img_cv2_copy, "{}".format(idx), points[idx], cv2.FONT_HERSHEY_SIMPLEX,1,(0, 0, 255),4, lineType=cv2.LINE_AA)

h = int(self.__distance(points[4],points[3]))#小臂周长

if points[4]:

x_center = points[4][0]

y_center = points[4][1]

cv2.rectangle(img_cv2_copy, (x_center-h, y_center-h), (x_center+h, y_center+h), (255, 0, 0), 2)#框

cv2.circle(img_cv2_copy,(x_center, y_center), 1, (100, 100, 0), thickness=-1,lineType=cv2.FILLED)#坐标点

cv2.putText(img_cv2_copy,"%d,%d" % (x_center,y_center),(x_center, y_center), cv2.FONT_HERSHEY_SIMPLEX,

0.6, (100, 100, 0), 2, lineType=cv2.LINE_AA)#右手首

if points[7]:

x_center = points[7][0]

y_center = points[7][1]

cv2.rectangle(img_cv2_copy, (x_center-h, y_center-h), (x_center+h, y_center+h), (255, 0, 0), 1)

cv2.putText(img_cv2_copy,"%d,%d" % (x_center,y_center),(x_center, y_center), cv2.FONT_HERSHEY_SIMPLEX,

0.6, (100, 100, 0), 2, lineType=cv2.LINE_AA)#左手首

cv2.circle(img_cv2_copy,(x_center-h, y_center-h), 3, (225, 225, 255), thickness=-1,lineType=cv2.FILLED)#对角点

cv2.putText(img_cv2_copy, "{}".format(x_center-h),(x_center-h, y_center-h), cv2.FONT_HERSHEY_SIMPLEX,

0.6, (100, 100, 0), 2, lineType=cv2.LINE_AA)

cv2.circle(img_cv2_copy,(x_center+h, y_center+h), 3, (225, 225, 255), thickness=-1,lineType=cv2.FILLED)

cv2.putText(img_cv2_copy, "{}".format(x_center+h),(x_center+h, y_center+h), cv2.FONT_HERSHEY_SIMPLEX,

0.6, (100, 100, 0), 2, lineType=cv2.LINE_AA)#对角点

# 骨骼连线

for pair in self.bone_point_pairs:

partA = pair[0]

partB = pair[1]

if points[partA] and points[partB]:

cv2.line(img_cv2, points[partA], points[partB], (0, 255, 255), 3)

cv2.circle(img_cv2, points[partA],4, (0, 0, 255),thickness=-1, lineType=cv2.FILLED)

plt.figure(figsize=[10, 10])

plt.subplot(1, 2, 1)

plt.imshow(cv2.cvtColor(img_cv2, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(cv2.cvtColor(img_cv2_copy, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.show()

def vis_bone_heatmaps(self, imgfile, net_outputs):

"""显示骨骼关键点热力图

:param 图像路径,神经网络

"""

img_cv2 = cv2.imread(imgfile)

plt.figure(figsize=[10, 10])

for pdx in range(self.bone_num_points):

probMap = net_outputs[0, pdx, :, :]#全部heatmap都初始化为0

probMap = cv2.resize(probMap,(img_cv2.shape[1], img_cv2.shape[0]))

plt.subplot(5, 5, pdx+1)

plt.imshow(cv2.cvtColor(img_cv2, cv2.COLOR_BGR2RGB))# background

plt.imshow(probMap, alpha=0.6)

plt.colorbar()

plt.axis("off")

plt.show()

"""提取手势图像(在骨骼基础上定位左右手图片),handpose特征点,并可视化显示"""

def get_hand_model(self, modelpath):

prototxt = os.path.join(modelpath, "hand/pose_deploy.prototxt")

caffemodel = os.path.join(modelpath, "hand/pose_iter_102000.caffemodel")

hand_model = cv2.dnn.readNetFromCaffe(prototxt, caffemodel)

return hand_model

def getOneHandKeypoints(self, handimg):

"""hand手部关键点检测(单手)

:param 手部图像路径,手部关键点

:return 单手关键点坐标集合

"""

img_height, img_width, _ = handimg.shape

aspect_ratio = img_width / img_height

inWidth = int(((aspect_ratio * self.inHeight) * 8) // 8)

inpBlob = cv2.dnn.blobFromImage(handimg, 1.0 / 255, (inWidth, self.inHeight), (0, 0, 0), swapRB=False, crop=False)

self.hand_net.setInput(inpBlob)

output = self.hand_net.forward()

# vis heatmaps

self.vis_hand_heatmaps(handimg, output)

#

points = []

for idx in range(self.hand_num_points):

probMap = output[0, idx, :, :] # confidence map.

probMap = cv2.resize(probMap, (img_width, img_height))

# Find global maxima of the probMap.

minVal, prob, minLoc, point = cv2.minMaxLoc(probMap)

if prob > self.threshold:

points.append((int(point[0]), int(point[1])))

else:

points.append(None)

return points

def getHandROI(self,imgfile,bonepoints):

"""hand手部感兴趣的区域寻找到双手图像

:param 图像路径,骨骼关键点

:return 左手关键点,右手关键点坐标集合,原始图片位置参数

"""

img_cv2 = cv2.imread(imgfile)#原图像

img_height, img_width, _ = img_cv2.shape

rimg = img_cv2.copy()#图像备份

limg = img_cv2.copy()

# 以右手首为中心,裁剪长度为小臂长的图片

if bonepoints[4] and bonepoints[3]:#右手

h = int(self.__distance(bonepoints[4],bonepoints[3]))#小臂长

x_center = bonepoints[4][0]

y_center = bonepoints[4][1]

x1 = x_center-h

y1 = y_center-h

x2 = x_center+h

y2 = y_center+h

print(x1,x2,x_center,y_center,y1,y2)

if x1img_width:

x2 = img_width

if y1img_height:

y2 = img_height

rimg = img_cv2[y1:y2,x1:x2]# 裁剪坐标为[y0:y1, x0:x1]

if bonepoints[7] and bonepoints[6]:#左手

h = int(self.__distance(bonepoints[7],bonepoints[6]))#小臂长

x_center = bonepoints[7][0]

y_center = bonepoints[7][1]

x1 = x_center-h

y1 = y_center-h

x2 = x_center+h

y2 = y_center+h

print(x1,x2,x_center,y_center,y1,y2)

if x1img_width:

x2 = img_width

if y1img_height:

y2 = img_height

limg = img_cv2[y1:y2,x1:x2]# 裁剪坐标为[y0:y1, x0:x1]

plt.figure(figsize=[10, 10])

plt.subplot(1, 2, 1)

plt.imshow(cv2.cvtColor(rimg, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(cv2.cvtColor(limg, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.show()

return rimg,limg

def getHandsKeypoints(self,rimg,limg):

"""双手图像分别获取特征点

:param 图像路径,骨骼关键点

:return 左手关键点,右手关键点坐标集合

"""

# 分别获取手部特征点

rhandpoints = self.getOneHandKeypoints(rimg)

lhandpoints = self.getOneHandKeypoints(limg)

#显示

pose_model.vis_hand_pose(rimg, rhandpoints)

pose_model.vis_hand_pose(limg, lhandpoints)

return rhandpoints,lhandpoints

def handDistance(self,rkeyPoint,lkeyPoint):

"""距离辅助函数

:param keyPoint:

:return:list

:distance:

"""

if keyPoint[0] is None:

print("未识别到Wrist参考关键点")

distance0 = self.__distance(keyPoint[0],keyPoint[4])#Thumb拇指

distance1 = self.__distance(keyPoint[0],keyPoint[8])#Index食指

distance2 = self.__distance(keyPoint[0],keyPoint[12])#Middle中指

distance3 = self.__distance(keyPoint[0],keyPoint[16])#Ring无名指

distance4 = self.__distance(keyPoint[0],keyPoint[20])#Little小指

return [distance0, distance1, distance2, distance3, distance4]

def handpointAngle(self, keyPoint):

"""角度辅助函数

:param keyPoint:

:return:list

:角度:

"""

angle0 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[4])

angle1 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[8])

angle2 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[12])

angle3 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[16])

angle4 = self.__myAngle(keyPoint[0], keyPoint[9], keyPoint[20])

return [angle0, angle1, angle2, angle3, angle4]

def getHandsInformation(self,rpoints,lpoints):

"""将右手和左手(各距离5和角度5个特征)信息汇集

:param 左右手关键点

:return 特征集合共20特征点

"""

Information = []

#右手

DistanceList = self.bonepointDistance(rpoints)# 距离关键信息

AngleList = self.bonepointAngle(rpoints)# 角度关键信息

for i in range(len(DistanceList)):

Information.append(DistanceList[i])

for j in range(len(AngleList)):

Information.append(AngleList[j])

#左手

DistanceList = self.bonepointDistance(lpoints)# 距离关键信息

AngleList = self.bonepointAngle(lpoints)# 角度关键信息

for m in range(len(DistanceList)):

Information.append(DistanceList[m])

for n in range(len(AngleList)):

Information.append(AngleList[n])

return Information

def vis_hand_heatmaps(self, handimg, net_outputs):

"""显示手势关键点热力图(单手)

:param 图像路径,神经网络

"""

plt.figure(figsize=[10, 10])

for pdx in range(self.hand_num_points):

probMap = net_outputs[0, pdx, :, :]

probMap = cv2.resize(probMap, (handimg.shape[1], handimg.shape[0]))

plt.subplot(5, 5, pdx+1)

plt.imshow(cv2.cvtColor(handimg, cv2.COLOR_BGR2RGB))

plt.imshow(probMap, alpha=0.6)

plt.colorbar()

plt.axis("off")

plt.show()

def vis_hand_pose(self,handimg, points):

"""显示标注手势关键点后的图像(单手)

:param 图像路径,每只手检测关键点坐标

:return 关键点连线图,关键点图

"""

img_cv2_copy = np.copy(handimg)

for idx in range(len(points)):

if points[idx]:

cv2.circle(img_cv2_copy, points[idx], 2, (0, 255, 255), thickness=-1,lineType=cv2.FILLED)

cv2.putText(img_cv2_copy, "{}".format(idx), points[idx], cv2.FONT_HERSHEY_SIMPLEX,0.3,

(0, 0, 255), 1, lineType=cv2.LINE_AA)

# Draw Skeleton

for pair in self.hand_point_pairs:

partA = pair[0]

partB = pair[1]

if points[partA] and points[partB]:

cv2.line(handimg, points[partA], points[partB], (0, 255, 255), 2)

cv2.circle(handimg, points[partA], 2, (0, 0, 255), thickness=-1, lineType=cv2.FILLED)

plt.figure(figsize=[10, 10])

plt.subplot(1, 2, 1)

plt.imshow(cv2.cvtColor(handimg, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.subplot(1, 2, 2)

plt.imshow(cv2.cvtColor(img_cv2_copy, cv2.COLOR_BGR2RGB))

plt.axis("off")

plt.show()

"""提取手势傅里叶描述子"""

def find_contours(self,Laplacian):

"""获取连通域

:param: 输入Laplacian算子(空间锐化滤波)

:return: 最大连通域

"""

#binaryimg = cv2.Canny(res, 50, 200) #二值化,canny检测

h = cv2.findContours(Laplacian,cv2.RETR_EXTERNAL,cv2.CHAIN_APPROX_NONE) #寻找轮廓

contour = h[0]

contour = sorted(contour, key = cv2.contourArea, reverse=True)#对一系列轮廓点坐标按它们围成的区域面积进行排序

return contour

def skinMask(self,roi):

"""YCrCb颜色空间的Cr分量+Otsu法阈值分割算法

:param res: 输入原图像

:return: 肤色滤波后图像

"""

YCrCb = cv2.cvtColor(roi, cv2.COLOR_BGR2YCR_CB) #转换至YCrCb空间

(y,cr,cb) = cv2.split(YCrCb) #拆分出Y,Cr,Cb值

cr1 = cv2.GaussianBlur(cr, (5,5), 0)

_, skin = cv2.threshold(cr1, 0, 255, cv2.THRESH_BINARY + cv2.THRESH_OTSU) #Ostu处理

res = cv2.bitwise_and(roi,roi, mask = skin)

plt.figure(figsize=(10,10))

plt.subplot(1,2,1)

plt.imshow(cv2.cvtColor(roi, cv2.COLOR_BGR2RGB))

plt.xlabel(u'原图',fontsize=20)

plt.subplot(1,2,2)

plt.imshow(cv2.cvtColor(res, cv2.COLOR_BGR2RGB))

plt.xlabel(u'肤色滤波后的图像',fontsize=20)

plt.show()

plt.figure(figsize=(10,4))

plt.subplot(1, 3, 1)

hist1 = cv2.calcHist([roi], [0], None, [256], [0, 256])#直方图opencv

plt.xlabel(u'opencv直方图',fontsize=20)

plt.plot(hist1)

plt.subplot(1, 3, 2)

hist2 = np.bincount(roi.ravel(), minlength=256) #np直方图

hist2, bins = np.histogram(roi.ravel(), 256, [0, 256])#np直方图ravel()二维变一维

plt.plot(hist2)

plt.xlabel(u'np直方图',fontsize=20)

plt.subplot(1, 3, 3)

plt.hist(roi.ravel(), 256, [0, 256])#matlab自带直方图

plt.xlabel(u'matlab直方图',fontsize=20)

plt.show()

# gray= cv2.cvtColor(roi,cv2.IMREAD_GRAYSCALE)

# equ = cv2.equalizeHist(gray)

# cv2.imshow('equalization', np.hstack((roi, equ))) # 并排显示

# cv2.waitKey(0)

# 自适应均衡化,参数可选

# plt.figure()

# clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8, 8))

# cl1 = clahe.apply(roi)

# plt.show()

return res

def truncate_descriptor(self,fourier_result):

"""截短傅里叶描述子

:param res: 输入傅里叶描述子

:return: 截短傅里叶描述子

"""

descriptors_in_use = np.fft.fftshift(fourier_result)

#取中间的MIN_DESCRIPTOR项描述子

center_index = int(len(descriptors_in_use) / 2)

low, high = center_index - int(self.MIN_DESCRIPTOR / 2), center_index + int(self.MIN_DESCRIPTOR / 2)

descriptors_in_use = descriptors_in_use[low:high]

descriptors_in_use = np.fft.ifftshift(descriptors_in_use)

return descriptors_in_use

def fourierDesciptor(self,res):

"""计算傅里叶描述子

:param res: 输入图片

:return: 图像,描述子点

"""

#Laplacian算子进行八邻域检测

gray = cv2.cvtColor(res, cv2.COLOR_BGR2GRAY)

dst = cv2.Laplacian(gray, cv2.CV_16S, ksize = 3)

Laplacian = cv2.convertScaleAbs(dst)

contour = self.find_contours(Laplacian)#提取轮廓点坐标

contour_array = contour[0][:, 0, :]#注意这里只保留区域面积最大的轮廓点坐标

contours_complex = np.empty(contour_array.shape[:-1], dtype=complex)

contours_complex.real = contour_array[:,0]#横坐标作为实数部分

contours_complex.imag = contour_array[:,1]#纵坐标作为虚数部分

fourier_result = np.fft.fft(contours_complex)#进行傅里叶变换

#fourier_result = np.fft.fftshift(fourier_result)

descirptor_in_use = self.truncate_descriptor(fourier_result)#截短傅里叶描述子

img1 = res.copy()

self.reconstruct(res, descirptor_in_use)# 绘图显示描述子点

self.draw_circle(img1, descirptor_in_use)# 相关关定位框架

return res, descirptor_in_use

def reconstruct(self,img, descirptor_in_use):

"""由傅里叶描述子重建轮廓图

:param res: 输入图像,傅里叶描述子

:return: 重绘图像

"""

contour_reconstruct = np.fft.ifft(descirptor_in_use)#傅里叶反变换

contour_reconstruct = np.array([contour_reconstruct.real,contour_reconstruct.imag])

contour_reconstruct = np.transpose(contour_reconstruct)#转换矩阵

contour_reconstruct = np.expand_dims(contour_reconstruct, axis = 1)#改变数组维度在axis=1轴上加1

if contour_reconstruct.min() |

思路:

思路:  对于每幅图像提取出手型:指尖和重心(这里以手腕为参考点非实际重心),然后计算出距离和夹角,对于不同手势分别进行距离和夹角的统计,得到其分布的数字特征。

对于每幅图像提取出手型:指尖和重心(这里以手腕为参考点非实际重心),然后计算出距离和夹角,对于不同手势分别进行距离和夹角的统计,得到其分布的数字特征。 根据openpose【22关键点】识别手语效果如下:

根据openpose【22关键点】识别手语效果如下:  可以发现,主要是手指头的判别,同coco骨骼模型判别,我们将手势特征划分为:距离和角度。

可以发现,主要是手指头的判别,同coco骨骼模型判别,我们将手势特征划分为:距离和角度。

后半部分是傅里叶描述子呈现肤色提取,这里不适用!要求手语者身着长袖,且背景不与肤色相近。据情况选择。

后半部分是傅里叶描述子呈现肤色提取,这里不适用!要求手语者身着长袖,且背景不与肤色相近。据情况选择。 源码:

源码: