几何向量:ScreenToViewportPoint/ScreenToWorldPoint函数解析 |

您所在的位置:网站首页 › unity屏幕坐标转三维坐标 › 几何向量:ScreenToViewportPoint/ScreenToWorldPoint函数解析 |

几何向量:ScreenToViewportPoint/ScreenToWorldPoint函数解析

|

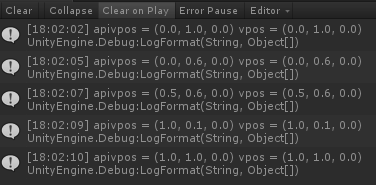

三维引擎中Camera类带有一系列几何函数,这里我们看一下unity中这两个Camera提供的几何函数的意义和实现: 1.ScreenToViewportPoint 顾名思义就是屏幕坐标转视口坐标,在渲染流程中,建模->世界->视口->裁剪->视图得到屏幕坐标系中坐标数值,那么阶段性反过来从屏幕到视口的坐标变换也好理解,屏幕的左下角(0,0)到右上角(width,height)(ps:如果为1080p屏幕的话,width=1920,height=1080)对应的视口空间左下角(0,0)到右上角(1,1),那这个就很好理解了,鼠标坐标x/width&y/height就得到屏幕中,函数设计如下: using System.Collections; using System.Collections.Generic; using UnityEngine; public class StoV : MonoBehaviour { void Start() { } void Update() { if (Input.GetMouseButtonDown(0)) { Vector3 apiVPos = Camera.main.ScreenToViewportPoint(Input.mousePosition); Vector3 vPos = StoVPoint(Input.mousePosition); Debug.LogFormat("apivpos = {0} vpos = {1}", apiVPos, vPos); } } public Vector3 StoVPoint(Vector3 smousePos) { return new Vector3(smousePos.x / Screen.width, smousePos.y / Screen.height, 0); } } 测试结果: 2.ScreenToWorldPoint 顾名思义了,就是屏幕坐标转世界坐标,从屏幕空间到视口空间到世界坐标,这里有个重要的问题我要解释一下: 屏幕坐标明明是二维坐标,为什么可以转到三维呢?如果看了之前写的图形学原理,就会知道,三维空间中z轴经过空间转换就成了最后的深度depth信息,所以说我们要理解一个屏幕坐标对应的像素其实是包含深度depth信息的,那么我们脑海中就可以模拟出来,在世界空间到视口空间的z值转换成depth,z值处于camera的near/far裁剪面,深度depth则处于0-255,这就是对应的转换关系,下面来一个示意图: 函数设计如下: using System.Collections; using System.Collections.Generic; using UnityEngine; public class StoW : MonoBehaviour { [Range(1,100)] [SerializeField] public float depth = 1; void Start() { } void Update() { DrawViewport(); if (Input.GetMouseButtonDown(0)) { Vector3 mousePos = new Vector3(Input.mousePosition.x, Input.mousePosition.y, depth); Vector3 apiwpos = Camera.main.ScreenToWorldPoint(mousePos); Vector3 wpos = StoWWorldPos(mousePos); Debug.LogFormat("mousePos = {0} apiwpos = {1} wpos = {2}", mousePos, apiwpos, wpos); } } //画出far裁剪面的视口线段 private void DrawViewport() { float far = Camera.main.farClipPlane; float hfov = Camera.main.fieldOfView / 2; float hhei = Mathf.Tan(hfov * Mathf.Deg2Rad) * far; float hwid = hhei * Camera.main.aspect; Vector3 world00 = CameraLocalToWorldPos(new Vector3(-hwid, -hhei, far)); Vector3 world01 = CameraLocalToWorldPos(new Vector3(-hwid, hhei, far)); Vector3 world11 = CameraLocalToWorldPos(new Vector3(hwid, hhei, far)); Vector3 world10 = CameraLocalToWorldPos(new Vector3(hwid, -hhei, far)); Debug.DrawLine(world00, world01, Color.red); Debug.DrawLine(world01, world11, Color.red); Debug.DrawLine(world11, world10, Color.red); Debug.DrawLine(world10, world00, Color.red); } //mainCamera处理local到world的TRS变换 private Vector3 CameraLocalToWorldPos(Vector3 localpos) { return Camera.main.transform.TransformPoint(localpos); } //将视口空间相对坐标转到世界坐标 //处理TRS矩阵变换 private Vector3 StoWWorldPos(Vector3 mousePos) { return CameraLocalToWorldPos(StoWLocalPos(mousePos)); } //计算视口空间中相对坐标 private Vector3 StoWLocalPos(Vector3 mousePos) { Vector3 eyePos = Camera.main.transform.position; float halffov = Camera.main.fieldOfView / 2; float halfhei = Mathf.Tan(halffov * Mathf.Deg2Rad) * depth; float halfwid = halfhei * Camera.main.aspect; float ratiox = (mousePos.x - Screen.width / 2) / (Screen.width / 2); float ratioy = (mousePos.y - Screen.height / 2) / (Screen.height / 2); float localx = halfwid * ratiox; float localy = halfhei * ratioy; Vector3 localxyz = new Vector3(localx, localy, depth); return localxyz; } } 测试结果如下:

顺便再次解释一下,首先使用camera的属性算出裁剪面的相对坐标范围,我是用红色line画出的区域就是,然后使用屏幕空间像素坐标映射到视口红色区域,得到相对xy坐标,最后得到localXYZ坐标,然后使用TRS矩阵变换到世界空间即可。 这里解析实现Camera的函数,可以为我以后发展一下图形库开发做准备。 |

【本文地址】

今日新闻 |

推荐新闻 |