全网最详细的openstack安装教程 |

您所在的位置:网站首页 › skyline安装不了 › 全网最详细的openstack安装教程 |

全网最详细的openstack安装教程

|

前言: 相信很多安装过openstack的人都知道,openstack的安装过程很麻烦,总是需要修改文件,并且还有一堆报错信息,遇到一些报错也不知道怎么去解决,所以这次就记录并分享一下如何完全安装openstack以及它的组件。 环境介绍: 本文使用的环境是centos8,以及openstack的v版,如有错误,欢迎大家指出。😃😃 目录 全网最详细的openstack安装教程 openstack 官方文档介绍 环境准备: 环境部署 1)安装centos8并修改ip地址(三台都做) 2)同步时间NTP服务(三台都做) 3)安装openstack的库(三台都做) 4)安装sql数据库(control节点) 5)消息队列安装(control节点) 6)memcached安装(control节点) 7)etcd的安装(control节点) openstack部署 keystone的安装 glace的安装 placement的安装 nova的安装!!!! neutron的安装!!! openstack 官方文档介绍官方文档最主要的界面就是这个了,如果英文很好bb可以直接看文档去安装openstack,链接放在下面了,如果英语不行又想安装的bb们继续往下看。

在官方文档中可以查询到openstack的其他版本安装。 以下为最小安装配置(可能不够),实线为必选节点,虚线为可选节点

本文使用了一台control节点,2台compute节点的架构,各虚拟机的配置如图: control内存硬盘ip系统环境8GB100G192.168.111.126centos8+openstack v版

添加并修改如下代码 如图:

配置名称解析:(三台都做) 1.将control节点主机名设置为control,compute1(2)节点主机名设置为compute1(2) vim /etc/hosts #在下面添加如下内容(记得修改为自己的ip地址) 192.168.111.126 control 192.168.111.127 compute1 192.168.111.128 compute22.配置完成后,验证是否成功 ping www.baidu.com ping control ping compute1 ping compute2如出现以下结果说明成功

1.在control中: 1.1安装chrony软件包 yum install chrony1.2修改chrony.conf文件 1. vim /etc/chrony.conf 在任意位置添加 :server NTP_SERVER iburst 2. 修改Allow NTP client access from local network此选项,改为(自己的ip.0) allow 192.168.111.0如图:

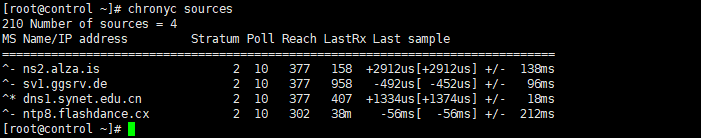

1.3然后启动ntp服务 systemctl enable chronyd.service systemctl start chronyd.service2.在compute上 2.1 安装软件包 yum install chrony2.2 配置文件 1. vim /etc/chrony.conf 在任意位置添加 :server control iburst 2. 注释掉该行 pool 2.debian.pool.ntp.org offline iburst2.3 重启服务 systemctl enable chronyd.service systemctl start chronyd.service验证操作:输入以下命令,出现如图结果说明成功 chronyc sourcescontrol:

其他节点:

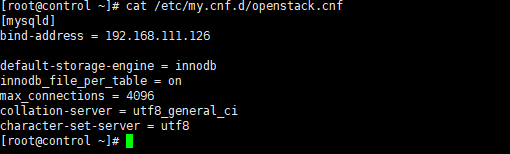

1.安装os的库,centos8需要开powertools yum install centos-release-openstack-victoria yum config-manager --set-enabled PowerTools2.安装完成后 2.1 升级软件包 yum upgrade2.2 安装客户端 centos7: yum install python-openstackclientcentos8: yum install python3-openstackclient2.3安装os的selinux yum install openstack-selinux 4)安装sql数据库(control节点)1.安装软件包 yum install mariadb mariadb-server python2-PyMySQL创建和编辑/etc/my.cnf.d/openstack.cnf文件 如图 vim /etc/my.cnf.d/openstack.cnf 添加内容: [mysqld] bind-address = 10.0.0.11 default-storage-engine = innodb innodb_file_per_table = on max_connections = 4096 collation-server = utf8_general_ci character-set-server = utf8

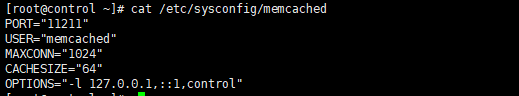

完成安装 启动服务 systemctl enable mariadb.service systemctl start mariadb.service设置密码 mysql_secure_installation 5)消息队列安装(control节点)安装软件包(需要配置epel的源,要不会报错) yum install rabbitmq-server启动消息队列服务并配置它在系统启动时启动: systemctl enable rabbitmq-server.service systemctl start rabbitmq-server.service添加openstck用户: abbitmqctl add_user openstack abcdefg允许用户的配置、写入和读取访问权限 openstack: rabbitmqctl set_permissions openstack ".*" ".*" ".*" 6)memcached安装(control节点)1.安装软件包 centos7 yum install memcached python-memcachedcentos8 yum install memcached python3-memcached2.配置文件 如图 vim /etc/sysconfig/memcached 修改 OPTIONS="-l 127.0.0.1,::1,control"

3.启动 Memcached 服务并配置它在系统启动时启动: systemctl enable memcached.service systemctl start memcached.service 7)etcd的安装(control节点)1.安装软件包 yum install etcd2.配置文件 如图所示 vim /etc/etcd/etcd.conf修改为下图这样即可3.启动服务 systemctl enable etcd systemctl start etcd完成这步后 恭喜你的环境配置完成了,现在可以来安装openstack的组件了!!!! openstack部署 keystone的安装1.首先创建一个数据库,用root的身份去连接 mysql -u root -p2.进入数据库后,创建keystone的数据库:如图所示 MariaDB [(none)]> CREATE DATABASE keystone;

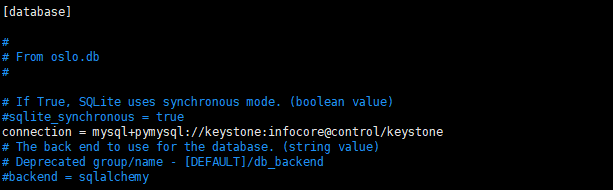

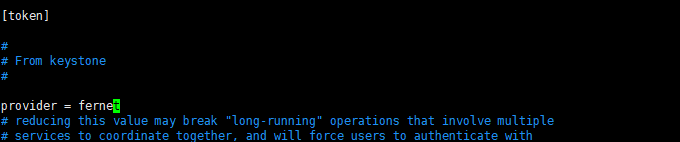

3.给访问权限 MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'localhost' \ IDENTIFIED BY '自己的密码'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON keystone.* TO 'keystone'@'%' \ IDENTIFIED BY '自己的密码';4.退出数据库 安装keystone的软件包 yum install openstack-keystone httpd python3-mod_wsgi配置文件如图: vim /etc/keystone/keystone.conf 找到database部分 添加 connection = mysql+pymysql://keystone:自己密码@control/keystone 再找到 token部分 添加 provider = fernet

退出文件,填充数据库 su -s /bin/sh -c "keystone-manage db_sync" keystone初始化fernet库 keystone-manage fernet_setup --keystone-user keystone --keystone-group keystone keystone-manage credential_setup --keystone-user keystone --keystone-group keystone身份服务 keystone-manage bootstrap --bootstrap-password (自己的密码) \ --bootstrap-admin-url http://control:5000/v3/ \ --bootstrap-internal-url http://control:5000/v3/ \ --bootstrap-public-url http://control:5000/v3/ \ --bootstrap-region-id RegionOne配置http服务器,如图 vim /etc/httpd/conf/httpd.conf 在下图位置添加 ServerName control 然后执行此命令 ln -s /usr/share/keystone/wsgi-keystone.conf /etc/httpd/conf.d/

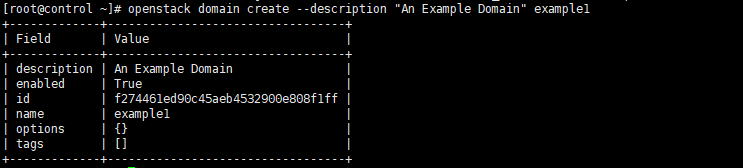

启动http服务 systemctl enable httpd.service systemctl start httpd.service设置临时变量(关机就没了!!) export OS_USERNAME=admin export OS_PASSWORD=(自己的密码) export OS_PROJECT_NAME=admin export OS_USER_DOMAIN_NAME=Default export OS_PROJECT_DOMAIN_NAME=Default export OS_AUTH_URL=http://control:5000/v3 export OS_IDENTITY_API_VERSION=3安装完成!!!! 12.验证操作: 1.新建域 如图所示 openstack domain create --description "An Example Domain" example

2.新建一个服务项目,如图所示 openstack project create --domain default \ --description "Service Project" service

3.新建一个项目和用户 如图所示 创建myproject项目 openstack project create --domain default \ --description "Demo Project" myproject

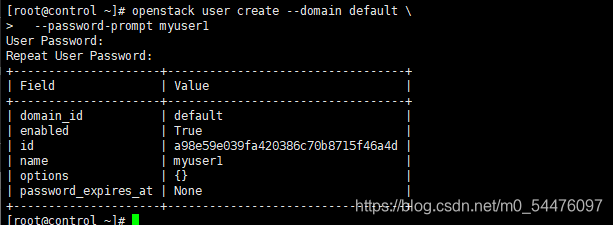

创建myuser用户: openstack user create --domain default \ --password-prompt myuser1

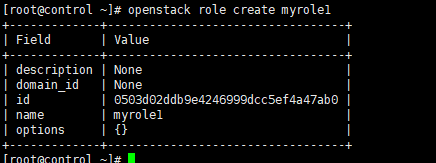

创建myrole角色 openstack role create myrole

将myrole角色添加到myproject项目和myuser用户: openstack role add --project myproject --user myuser myrole4.验证: 取消设置临时OS_AUTH_URL和OS_PASSWORD 环境变量: unset OS_AUTH_URL OS_PASSWORD请求身份验证令牌,如图所示 openstack --os-auth-url http://control:5000/v3 \ --os-project-domain-name Default --os-user-domain-name Default \ --os-project-name admin --os-username admin token issue

作为myuser在上一节中创建的用户,请求身份验证令牌: openstack --os-auth-url http://control:5000/v3 \ --os-project-domain-name Default --os-user-domain-name Default \ --os-project-name myproject --os-username myuser1 token issue

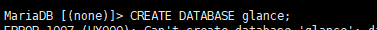

出现上图结果代表安装成功! glace的安装1.首先创建一个数据库,用root的身份去连接 mysql -u root -p2.进入数据库后,创建glance的数据库:如图所示 MariaDB [(none)]> CREATE DATABASE glance;

3.给与访问权限 MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'localhost' \ IDENTIFIED BY 自己密码; MariaDB [(none)]> GRANT ALL PRIVILEGES ON glance.* TO 'glance'@'%' \ IDENTIFIED BY 自己密码;4.创建glance用户 如图所示 openstack user create --domain default --password-prompt glance

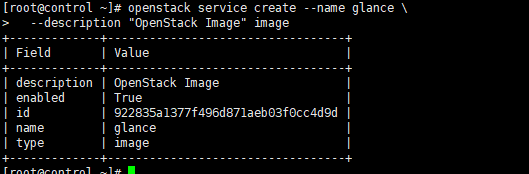

5.将admin角色添加到glance用户和 service项目: openstack role add --project service --user glance admin6.创建glance服务,如图所示 openstack service create --name glance \ --description "OpenStack Image" image

7.创建api端点 openstack endpoint create --region RegionOne \ image public http://control:9292 openstack endpoint create --region RegionOne \ image internal http://control:9292 openstack endpoint create --region RegionOne \ image admin http://control:9292

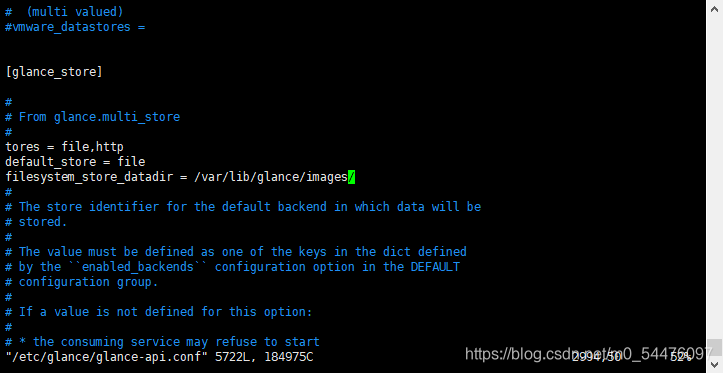

安装软件包 yum install openstack-glance配置文件 vim /etc/glance/glance-api.conf 1. [database] connection = mysql+pymysql://glance:自己的密码@control/glance 2. [keystone_authtoken] www_authenticate_uri = http://control:5000 auth_url = http://control:5000 memcached_servers = control:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = glance password = (自己的密码) 3. [paste_deploy] flavor = keystone 4. [glance_store] stores = file,http default_store = file filesystem_store_datadir = /var/lib/glance/images/

同步数据库 su -s /bin/sh -c "glance-manage db_sync" glance

启动服务 systemctl enable openstack-glance-api.service systemctl start openstack-glance-api.service

完成安装!!! 验证操作: 下载原图像 wget http://download.cirros-cloud.net/0.4.0/cirros-0.4.0-x86_64-disk.img

上传到image服务 glance image-create --name "cirros" \ --file cirros-0.4.0-x86_64-disk.img \ --disk-format qcow2 --container-format bare \ --visibility=public

确定上传图片以及验证属性 glance image-list

成功完成!!!! ## placement的安装1.准备工作 用root连接数据库 mysql -u root -p创建placement数据库 MariaDB [(none)]> CREATE DATABASE placement;给与访问权限 MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'localhost' \ IDENTIFIED BY '自己密码'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON placement.* TO 'placement'@'%' \ IDENTIFIED BY '自己密码';

准备完成! 配置用户和端点 创建一个placement服务和用户 openstack user create --domain default --password-prompt placement

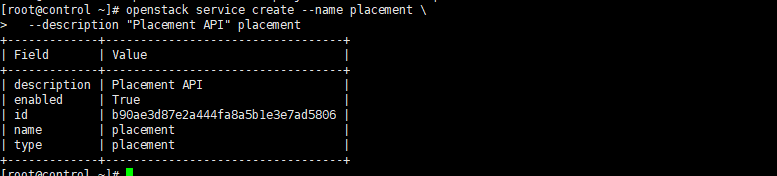

将 Placement 用户添加到具有 admin 角色的服务项目: openstack role add --project service --user placement admin创建 Placement API openstack service create --name placement \ --description "Placement API" placement

创建端口 openstack endpoint create --region RegionOne \ placement public http://control:8778 openstack endpoint create --region RegionOne \ placement internal http://control:8778 openstack endpoint create --region RegionOne \ placement admin http://control:8778安装软件包: yum install openstack-placement-api

配置文件 vim /etc/placement/placement.conf [placement_database] connection = mysql+pymysql://placement:自己密码@control/placement [api] auth_strategy = keystone [keystone_authtoken] auth_url = http://control:5000/v3 memcached_servers = control:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = placement password = 自己密码同步数据库 su -s /bin/sh -c "placement-manage db sync" placement重启httpd服务 systemctl restart httpd验证操作: 1.检查状态是否正常 placement-status upgrade check

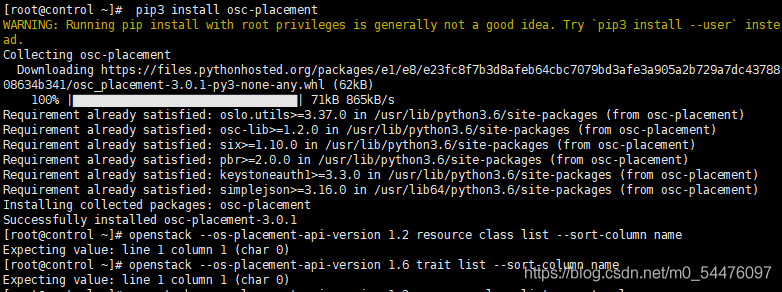

2运行以下命令,如果出现如图结果,说明安装成功 pip3 install osc-placement openstack --os-placement-api-version 1.2 resource class list --sort-column name openstack --os-placement-api-version 1.6 trait list --sort-column name

nova作为openstack中最重要的一部分,它该怎么安装呢???别急,哥来教你。 control节点: 准备工作 连接数据库 mysql -u root -p创建nova_api,nova和nova_cell0数据库: MariaDB [(none)]> CREATE DATABASE nova_api; MariaDB [(none)]> CREATE DATABASE nova; MariaDB [(none)]> CREATE DATABASE nova_cell0;给予权限(infocore为自己密码) MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'localhost' \ IDENTIFIED BY 'infocore'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_api.* TO 'nova'@'%' \ IDENTIFIED BY 'infocore'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'localhost' \ IDENTIFIED BY 'infocore'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova.* TO 'nova'@'%' \ IDENTIFIED BY 'infocore'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'localhost' \ IDENTIFIED BY 'infocore'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON nova_cell0.* TO 'nova'@'%' \ IDENTIFIED BY 'infocore';

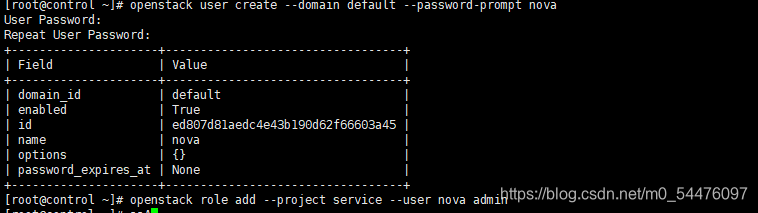

退出数据库 执行脚本(可忽略) . admin-openrc创建nova凭证 创建nova用户 openstack user create --domain default --password-prompt nova openstack role add --project service --user nova admin

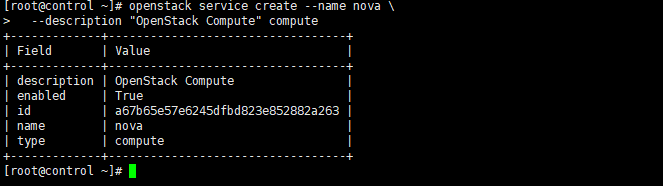

创建nova服务 openstack service create --name nova \ --description "OpenStack Compute" compute

创建compute api 服务节点 openstack endpoint create --region RegionOne \ compute public http://control:8774/v2.1 openstack endpoint create --region RegionOne \ compute internal http://control:8774/v2.1 openstack endpoint create --region RegionOne \ compute admin http://control:8774/v2.1

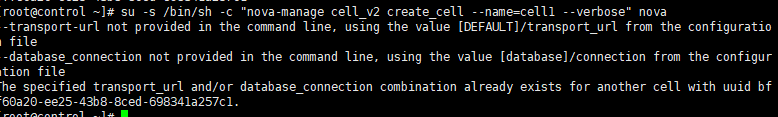

安装软件包 yum install openstack-nova-api openstack-nova-conductor \ openstack-nova-novncproxy openstack-nova-scheduler配置文件 vim /etc/nova/nova.conf 1. [DEFAULT] enabled_apis = osapi_compute,metadata 2.infocore位compute的密码 [api_database] connection = mysql+pymysql://nova:infocore@control/nova_api [database] connection = mysql+pymysql://nova:infocore@control/nova 3.infocore为rabbitmq消息队列的密码 [DEFAULT] transport_url = rabbit://openstack:infocore@control:5672/ 4.infocore为nova的密码 [api] auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://control:5000/ auth_url = http://control:5000/ memcached_servers = control:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = infocore 5. [DEFAULT] my_ip = 192.168.111.126(control节点) 6. [vnc] enabled = true server_listen = $my_ip server_proxyclient_address = $my_ip 7. [glance] api_servers = http://control:9292 8. [oslo_concurrency] lock_path = /var/lib/nova/tmp 9.infocore位placement的密码 [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://control:5000/v3 username = placement password = infocore同步数据库 su -s /bin/sh -c "nova-manage api_db sync" nova配置cell0数据库,结果如下 su -s /bin/sh -c "nova-manage cell_v2 map_cell0" nova su -s /bin/sh -c "nova-manage cell_v2 create_cell --name=cell1 --verbose" nova su -s /bin/sh -c "nova-manage db sync" nova

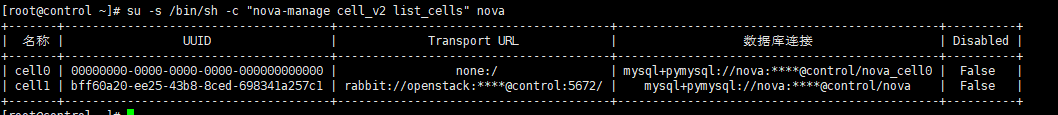

验证 nova cell0 和 cell1 是否正确注册,结果如下图所示 su -s /bin/sh -c "nova-manage cell_v2 list_cells" nova

启动compute服务,如下图所示 systemctl enable \ openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service \ openstack-nova-novncproxy.service systemctl start \ openstack-nova-api.service \ openstack-nova-scheduler.service \ openstack-nova-conductor.service \ openstack-nova-novncproxy.service

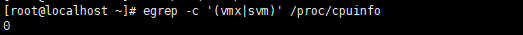

成功安装!!!!! compute节点 安装软件包 yum install openstack-nova-compute配置文件 vim /etc/nova/nova.conf 1. [DEFAULT] enabled_apis = osapi_compute,metadata transport_url = rabbit://openstack:infocore@control my_ip =192.168.111.127 2. [api] auth_strategy = keystone 3. [keystone_authtoken] www_authenticate_uri = http://control:5000/ auth_url = http://control:5000/ memcached_servers = control:11211 auth_type = password project_domain_name = Default user_domain_name = Default project_name = service username = nova password = infocore 4 [vnc] enabled = true server_listen = 0.0.0.0 server_proxyclient_address = $my_ip novncproxy_base_url = http://control:6080/vnc_auto.html 5. [glance] api_servers = http://control:9292 6. [oslo_concurrency] lock_path = /var/lib/nova/tmp 7. [placement] region_name = RegionOne project_domain_name = Default project_name = service auth_type = password user_domain_name = Default auth_url = http://control:5000/v3 username = placement password = infocore判断计算节点是否支持硬件加速 egrep -c '(vmx|svm)' /proc/cpuinfo 如果返回值为0,就需要配置/etc/nova/nova.conf [libvirt] virt_type = qemu

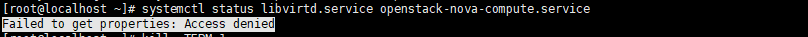

启动服务 systemctl enable libvirtd.service openstack-nova-compute.service systemctl start libvirtd.service openstack-nova-compute.service如果无法启动,报错如图:

解决方法 kill -TERM 1

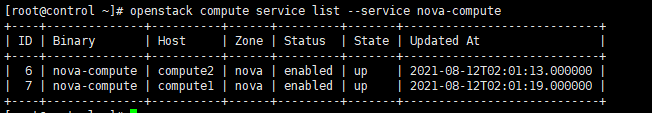

成功运行。 在control上执行以下命令: . admin-openrc openstack compute service list --service nova-compute su -s /bin/sh -c "nova-manage cell_v2 discover_hosts --verbose" nova如果出现报错,需要将虚拟机关机,然后勾选这两个选项,继续

出现此结果说明成功安装

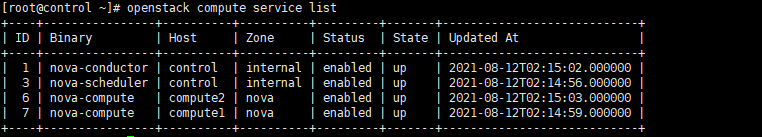

验证安装 列出成功的进程 openstack compute service list

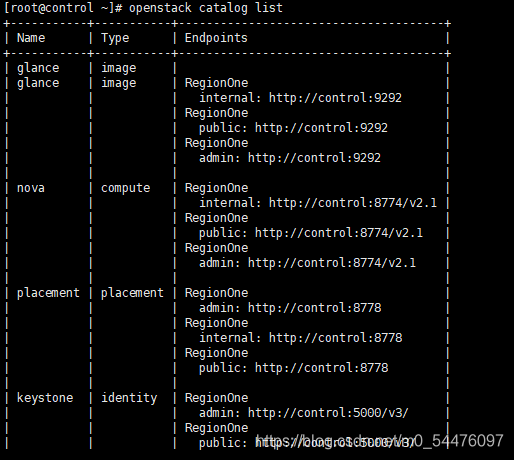

列出api端口 openstack catalog list

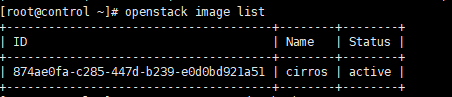

列出image的连接 openstack image list

列出单元和放置api是否运行成功 nova-status upgrade check

这里可能有些人会报错,解决方法如下 vim /etc/httpd/conf.d/00-placement-api.conf 把这个加在文件的最后面 = 2.4> Require all granted Order allow,deny Allow from all 重启httpd服务 systemctl restart httpd neutron的安装!!!control节点安装: 准备工作 创建数据库(轻车熟路了都) mysql -u root -p MariaDB [(none)] CREATE DATABASE neutron; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'localhost' \ IDENTIFIED BY '自己密码'; MariaDB [(none)]> GRANT ALL PRIVILEGES ON neutron.* TO 'neutron'@'%' \ IDENTIFIED BY 自己密码;

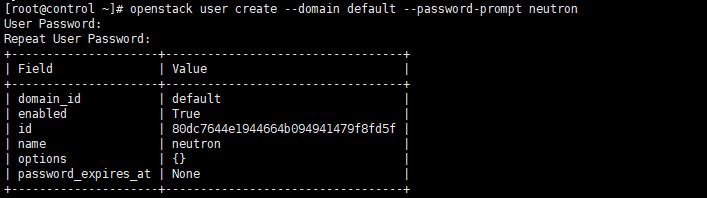

创建neutron用户 openstack user create --domain default --password-prompt neutron openstack role add --project service --user neutron admin

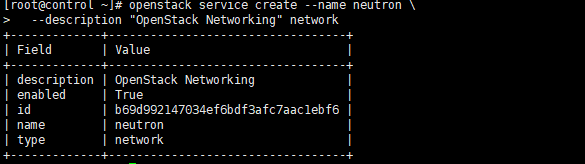

创建neutron服务 openstack service create --name neutron \ --description "OpenStack Networking" network

创建api端口 openstack endpoint create --region RegionOne \ network public http://control:9696 openstack endpoint create --region RegionOne \ network internal http://control:9696 openstack endpoint create --region RegionOne \ network admin http://control:9696

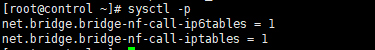

准备工作完成 安装网络 安装包 yum install openstack-neutron openstack-neutron-ml2 \ openstack-neutron-linuxbridge ebtables配置文件(把所有的infocore换成自己的密码) vim /etc/neutron/neutron.conf [database] connection = mysql+pymysql://neutron:infocore@control/neutron [DEFAULT] core_plugin = ml2 service_plugins = transport_url = rabbit://openstack:infocore@control auth_strategy = keystone notify_nova_on_port_status_changes = true notify_nova_on_port_data_changes = true [keystone_authtoken] www_authenticate_uri = http://control:5000 auth_url = http://control:5000 memcached_servers = control:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = infocore [nova] auth_url = http://control:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = nova password = infocore [oslo_concurrency] lock_path = /var/lib/neutron/tmp配置第二层文件 vim /etc/neutron/plugins/ml2/ml2_conf.ini在该[ml2]部分中,启用平面和 VLAN 网络: [ml2] type_drivers = flat,vlan在该[ml2]部分中,禁用自助服务网络: [ml2] tenant_network_types =在该[ml2]部分中,启用 Linux 桥接机制: [ml2] mechanism_drivers = linuxbridge在该[ml2]部分中,启用端口安全扩展驱动程序: [ml2] extension_drivers = port_security在该[ml2_type_flat]部分中,将提供者虚拟网络配置为平面网络: [ml2_type_flat] flat_networks = provider在该[securitygroup]部分中,启用ipset以提高安全组规则的效率: [securitygroup] enable_ipset = true配置第三层文件 vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini在该[linux_bridge]部分中,将提供者虚拟网络映射到提供者物理网络接口:(把ens192改成自己的ens啥啥啥) [linux_bridge] physical_interface_mappings = provider:ens192在该[vxlan]部分中,禁用 VXLAN 覆盖网络: [vxlan] enable_vxlan = false在该[securitygroup]部分中,启用安全组并配置 Linux 网桥 iptables 防火墙驱动程序: [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver通过验证以下所有sysctl值都设置为,确保您的 Linux 操作系统内核支持网桥过滤器1: vim /etc/sysctl.conf net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 执行下面命令 modprobe br_netfilter sysctl -p

配置最后一层文件 vim /etc/neutron/dhcp_agent.ini [DEFAULT] interface_driver = linuxbridge dhcp_driver = neutron.agent.linux.dhcp.Dnsmasq enable_isolated_metadata = true配置元数据 vim /etc/neutron/metadata_agent.ini [DEFAULT] nova_metadata_host = control metadata_proxy_shared_secret = infocore配置compute服务(不是compute节点,还是在control上搞) vim /etc/nova/nova.conf [neutron] auth_url = http://control:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = infocore service_metadata_proxy = true metadata_proxy_shared_secret = infocore执行初始化操作 ln -s /etc/neutron/plugins/ml2/ml2_conf.ini /etc/neutron/plugin.ini su -s /bin/sh -c "neutron-db-manage --config-file /etc/neutron/neutron.conf \ --config-file /etc/neutron/plugins/ml2/ml2_conf.ini upgrade head" neutron

重启api服务 systemctl restart openstack-nova-api.service重启网络服务 systemctl enable neutron-server.service \ neutron-linuxbridge-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service systemctl start neutron-server.service \ neutron-linuxbridge-agent.service neutron-dhcp-agent.service \ neutron-metadata-agent.service

control配置完成!!! compute节点的安装 安装包 yum install openstack-neutron-linuxbridge ebtables ipset配置文件 vim /etc/neutron/neutron.conf database中,注释掉上所有的connection选项 [DEFAULT] transport_url = rabbit://openstack:infocore@control auth_strategy = keystone [keystone_authtoken] www_authenticate_uri = http://control:5000 auth_url = http://control:5000 memcached_servers = control:11211 auth_type = password project_domain_name = default user_domain_name = default project_name = service username = neutron password = infocore [oslo_concurrency] lock_path = /var/lib/neutron/tmp配置网络选项 vim /etc/neutron/plugins/ml2/linuxbridge_agent.ini [linux_bridge] physical_interface_mappings = provider:ens192 [vxlan] enable_vxlan = false [securitygroup] enable_security_group = true firewall_driver = neutron.agent.linux.iptables_firewall.IptablesFirewallDriver vim /etc/sysctl.conf net.bridge.bridge-nf-call-iptables=1 net.bridge.bridge-nf-call-ip6tables=1 执行下面命令 modprobe br_netfilter sysctl -p配置网络服务 vim /etc/nova/nova.conf [neutron] auth_url = http://control:5000 auth_type = password project_domain_name = default user_domain_name = default region_name = RegionOne project_name = service username = neutron password = infocore重启服务 systemctl restart openstack-nova-compute.service systemctl enable neutron-linuxbridge-agent.service systemctl start neutron-linuxbridge-agent.service验证服务 openstack network agent list

|

【本文地址】

今日新闻 |

推荐新闻 |