ResNet代码详解 |

您所在的位置:网站首页 › resnet50代码 › ResNet代码详解 |

ResNet代码详解

|

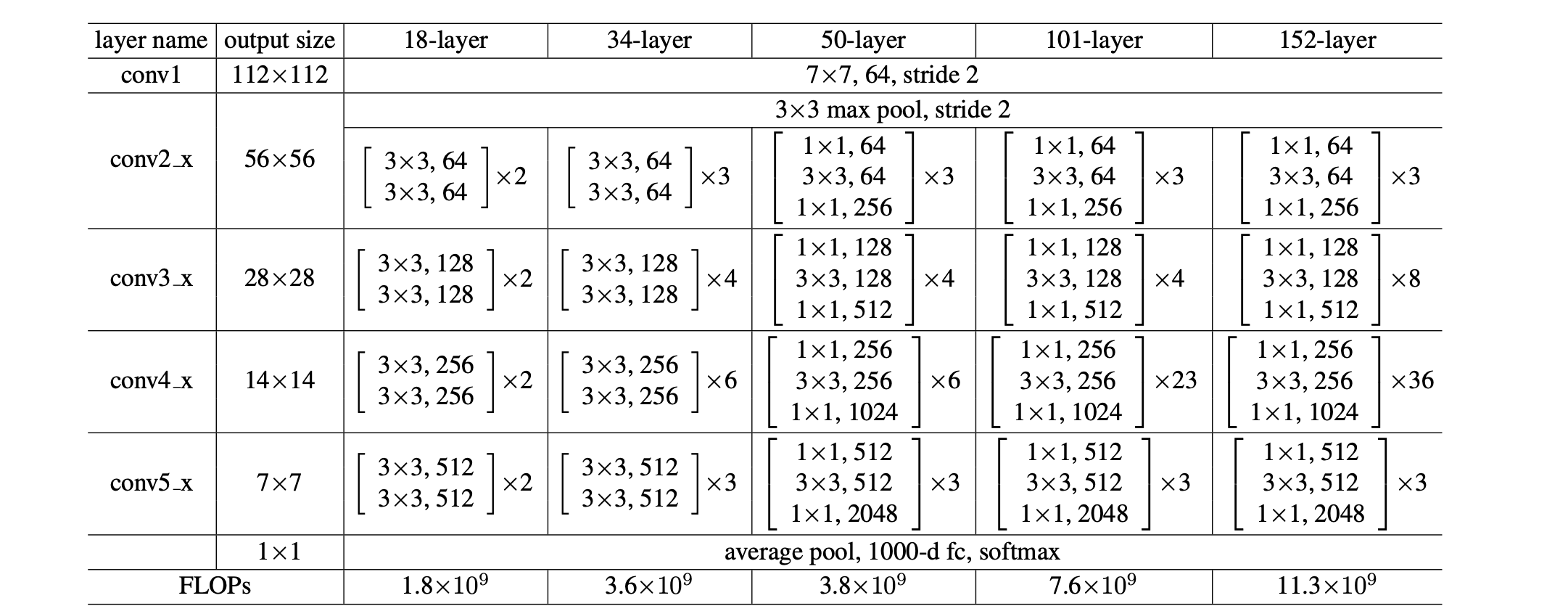

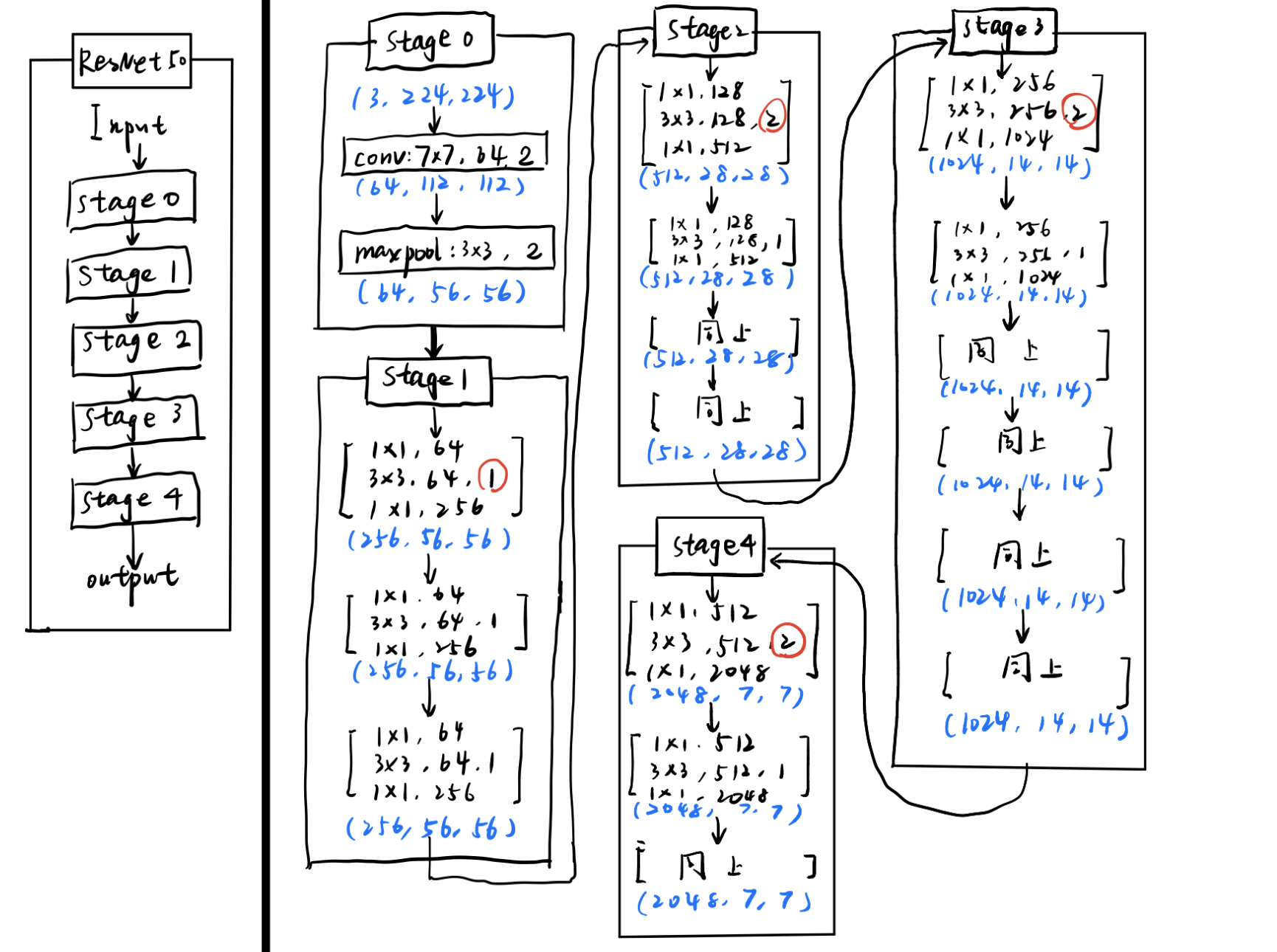

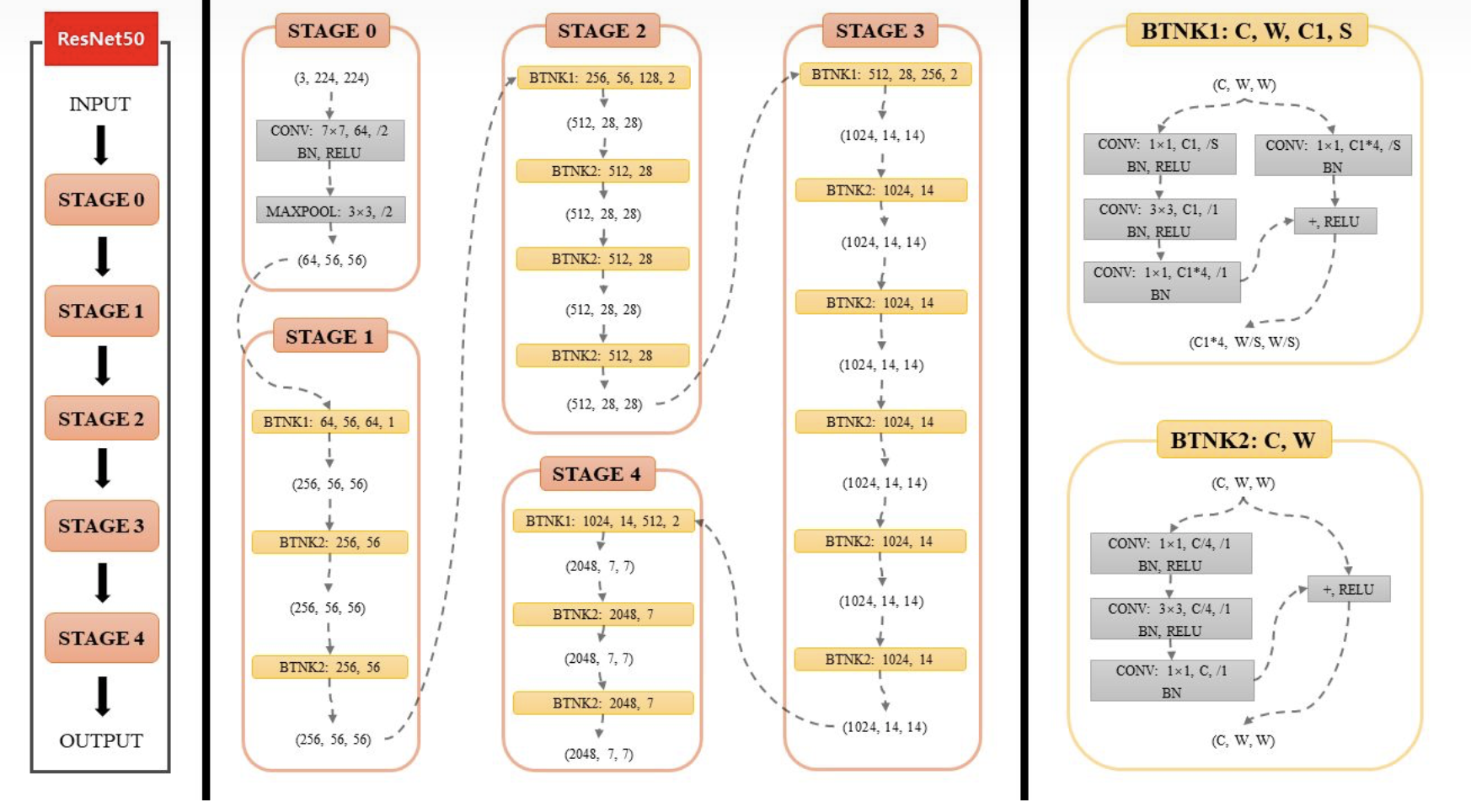

最近看Res2Net的代码时,有点生疏,于是回过头先学一下ResNet的代码。当时理论学习ResNet修仙:[论文笔记]ReNet:Deep Residual Learning for Image Recognition的时候自己写了一个很丑的代码,没有适用性。  图1 1. BasicBlock类 & Bottleneck类 图1 1. BasicBlock类 & Bottleneck类从图1中我们可以看到一共有五个不同大小的ResNet,其中18-layer、34-layer中使用的是BasicBlock,而50、101、152-layer中使用的是Bottleneck;下面先来看这两个block类的写法 1.1 BasicBlock类def conv3x3(in_planes, out_planes, stride=1): "3x3 convolution with padding" return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride, padding=1, bias=False) # 残差网络中的basicblock(在resnet18和resnet34中用到) class BasicBlock(nn.Module): expansion = 1 #在每个basicblock中有的两个conv大小通道数都一样,所以这边expansion = 1 # inplanes代表输入通道数,planes代表输出通道数 def __init__(self, inplanes, planes, stride=1, downsample=None): super(BasicBlock, self).__init__() self.conv1 = conv3x3(inplanes, planes, stride) self.bn1 = nn.BatchNorm2d(planes) self.relu = nn.ReLU(inplace=True) self.conv2 = conv3x3(planes, planes) self.bn2 = nn.BatchNorm2d(planes) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) #这边下采样的作用是,我们知道resnet一共有5个stage(0,1,2,3,4),第1-4个都是由block组成的,但stage1的第一个block不进行下采样 #也就是说它的stride=1,所以除了stage1的shortcut不需要进行下采样以外,其他的都需要;因为2-4的第一个block,stride=2 if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out上面那个下采样说的比较抽象,下面放上一张完整的ResNet50的结构图,可以看的更清晰一点  1.2 Bottleneck类 1.2 Bottleneck类bottleblock中有三个卷积,分别是1x1,3x3,1x1,分别完成的功能就是维度压缩,卷积,恢复维度 class Bottleneck(nn.Module): expansion = 4 # 输出通道数的倍乘 #因为Bottleneck 每个block里面三个卷积层通道数是不一样的,最后一个是前两个的4倍,所以expansion=4 def __init__(self, inplanes, planes, stride=1, downsample=None): super(Bottleneck, self).__init__() self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False) self.bn1 = nn.BatchNorm2d(planes) self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride, padding=1, bias=False) self.bn2 = nn.BatchNorm2d(planes) self.conv3 = nn.Conv2d(planes, planes * 4, kernel_size=1, bias=False) self.bn3 = nn.BatchNorm2d(planes * 4) self.relu = nn.ReLU(inplace=True) self.downsample = downsample self.stride = stride def forward(self, x): residual = x out = self.conv1(x) out = self.bn1(out) out = self.relu(out) out = self.conv2(out) out = self.bn2(out) out = self.relu(out) out = self.conv3(out) out = self.bn3(out) if self.downsample is not None: residual = self.downsample(x) out += residual out = self.relu(out) return out2.ResNet类class ResNet(nn.Module): def __init__(self, block, layers, num_classes=1000): # layers=参数列表 #对于stage0,大家都是一样的 self.inplanes = 64 super(ResNet, self).__init__() self.conv1 = nn.Conv2d(3, 64, kernel_size=7, stride=2, padding=3,bias=False) self.bn1 = nn.BatchNorm2d(64) self.relu = nn.ReLU(inplace=True) self.maxpool = nn.MaxPool2d(kernel_size=3, stride=2, padding=1) #从stage1开始不一样 self.layer1 = self._make_layer(block, 64, layers[0]) self.layer2 = self._make_layer(block, 128, layers[1], stride=2) self.layer3 = self._make_layer(block, 256, layers[2], stride=2) self.layer4 = self._make_layer(block, 512, layers[3], stride=2) self.avgpool = nn.AvgPool2d(7) self.fc = nn.Linear(512 * block.expansion, num_classes) if isinstance(m, nn.Conv2d): n = m.kernel_size[0] * m.kernel_size[1] * m.out_channels m.weight.data.normal_(0, math.sqrt(2. / n)) elif isinstance(m, nn.BatchNorm2d): m.weight.data.fill_(1) m.bias.data.zero_() def _make_layer(self, block, planes, blocks, stride=1): downsample = None #如果stride!=0 或者输入通道数不等于输出通道数就要进行下采样 #通道数不等于输出通道数:也就是从stage1开始的每个stage的第一个block if stride != 1 or self.inplanes != planes * block.expansion: downsample = nn.Sequential( nn.Conv2d(self.inplanes, planes * block.expansion, kernel_size=1, stride=stride, bias=False), nn.BatchNorm2d(planes * block.expansion), ) layers = [] #我们可以看到这边每个layer的第一个block都要分开加进去,因为第一个block涉及到的stride和downsample不一样 layers.append(block(self.inplanes, planes, stride, downsample)) #注意这边self.inplanes 改变之后的值 是保存的,下一个layer用的时候就是上一个算下来的值 self.inplanes = planes * block.expansion for i in range(1, blocks): layers.append(block(self.inplanes, planes)) return nn.Sequential(*layers) def forward(self, x): x = self.conv1(x) x = self.bn1(x) x = self.relu(x) x = self.maxpool(x) x = self.layer1(x) x = self.layer2(x) x = self.layer3(x) x = self.layer4(x) x = self.avgpool(x) x = x.view(x.size(0), -1) # 将输出结果展成一行 x = self.fc(x) return x3.调用ResNet类def resnet18(pretrained=False): model = ResNet(BasicBlock, [2, 2, 2, 2]) if pretrained: model.load_state_dict(model_zoo.load_url(model_urls['resnet18'])) return model def resnet34(pretrained=False): model = ResNet(BasicBlock, [3, 4, 6, 3]) if pretrained: model.load_state_dict(model_zoo.load_url(model_urls['resnet34'])) return model def resnet50(pretrained=False): model = ResNet(Bottleneck, [3, 4, 6, 3]) if pretrained: model.load_state_dict(model_zoo.load_url(model_urls['resnet50'])) return model def resnet101(pretrained=False): model = ResNet(Bottleneck, [3, 4, 23, 3]) if pretrained: model.load_state_dict(model_zoo.load_url(model_urls['resnet101'])) return model def resnet152(pretrained=False): model = ResNet(Bottleneck, [3, 8, 36, 3]) if pretrained: model.load_state_dict(model_zoo.load_url(model_urls['resnet152'])) return model以上就是整个ResNet的代码,最好要根据整个结构图+代码走一遍流程,这样理解会比较清晰。(写的时候从内往外写,从block到layer到resnet;看的时候从外往里看,从resnet到layer最后到block)。 最后附上臭咸鱼:ResNet50网络结构图及结构详解中的一张图,其中BTNK1就是我们上面说的输入和输出维度不一样,需要进行下采样的时候~  参考资料 参考资料臭咸鱼:ResNet50网络结构图及结构详解 小哼哼:ResNet代码详解 修仙:[论文笔记]ReNet:Deep Residual Learning for Image Recognition |

【本文地址】

今日新闻 |

推荐新闻 |