Python数据采集 |

您所在的位置:网站首页 › python采集数据代码 › Python数据采集 |

Python数据采集

|

一、采集豆瓣电影 Top 250的数据采集

1.进入豆瓣 Top 250的网页

豆瓣电影 Top 250 2.进入开发者选项 3.进入top250中去查看相关配置

3.进入top250中去查看相关配置

右键----检查----

pycharm中 File----右键----settings----Python Interpreter----+----(添加bs4、requests、lxml等安装包)

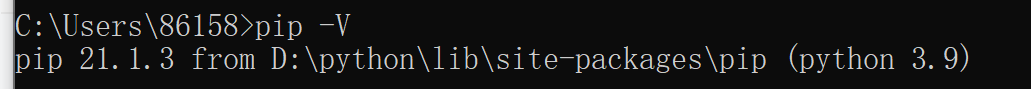

注意:如果安装库出现错误,即可能是对应的pip版本太低,需要更新 在 cmd 命令窗口查看pip版本 pip -V

更新pip版本 python -m pip install --upgrade pip

反反爬处理–——伪装浏览器 1.定义变量geaders={用户代理} 2.向服务器发送请求时携带上代理信息:response=requests.get(url,headrs = h) 6、bs4库中beautifulSoup类的使用1.导入:from bs4 import BeautifulSoup 2.定义soup对象:soup = BeautifulSoup(html,lxml) 3.使用soup对象调用类方法select(css选择器) 4.提取数据: get_text() text string 7、储存到CSV中1.先将数据存放到列表里 1.创建csv文档with open(‘xxx.csv’,‘a’,newlie=’’) as f: 3.调用CSV模块中的write()方法:w=csv.write(f 4.将列表中的数据写入文档:w.writerows(listdata) import requests,csv from bs4 import BeautifulSoup def getHtml(url): # 数据采集 h = { 'User-Agent': 'Mozilla / 5.0(Windows NT 10.0;WOW64)' } response = requests.get(url,headers = h) html = response.text # print(html) # getHtml('https://movie.douban.com/top250') # 数据解析:正则,BeautifulSoup,Xpath soup = BeautifulSoup(html,'lxml') filmtitle = soup.select('div.hd > a > span:nth-child(1)') ct = soup.select('div.bd > p:nth-child(1)') score = soup.select('div.bd > div > span.rating_num') evalue = soup.select('div.bd > div > span:nth-child(4)') print(score) filmlist = [] for t,c,s,e in zip(filmtitle,ct,score,evalue): title = t.text content = c.text filmscore = s.text num = e.text.strip('人评价') director = content.strip().split()[1] if "主演:" in content: actor = content.strip().split('主演:')[1].split()[0] else: actor = None year = content.strip().split('/')[-3].split()[-1] area = content.strip().split('/')[-2].strip() filmtype = content.strip().split('/')[-1].strip() # print(num) listdata = [title,director,actor,year,area,filmtype,filmscore,num] filmlist.append(listdata) print(filmlist) # 存储数据 with open('douban250.csv','a',encoding='utf-8',newline='') as f: w = csv.writer(f) w.writerows(filmlist) # 函数调用 listtitle = ['title','director','actor','year','area','type','score','evalueate'] with open('douban250.csv','a',encoding='utf-8',newline='') as f: w = csv.writer(f) w.writerow(listtitle) for i in range(0,226,25): getHtml('https://movie.douban.com/top250?start=%s&filter='%(i)) # getHtml('https://movie.douban.com/top250?start=150&filter=') 备注h 是到网站的开发者页面去寻找这句话就是

安居客 2.导入from lxml import etree 3.将采集的字符串转换为html格式:etree.html 4.转换后的数据调用xPath(xpath的路径):data.xpath(路径表达式) import requests from lxml import etree def getHtml(url): h = { 'user - agent': 'Mozilla / 5.0(Windows NT 10.0;Win64;x64)' } response = requests.get(url,headers=h) html = response.text # 数据解析 data = etree.HTML(html) print(data) name = data.xpath('//span[@class="items-name"]/text()') print(name) getHtml("https://bj.fang.anjuke.com/?from=AF_Home_switchcity") 三、拉勾网的数据采集 (一)requests数据采集 1.导入库 2.向服务器发送请求 3.下载数据 (二)post方式请求(参数不在url里) 1.通过网页解析找到post参数(请求数据Fromdata)并且参数定义到字典里 2.服务器发送请求调用requests.post(data=fromdata) (三)数据解析——json 1.导入 import——json 2. 将采集的json数据格式转换为python字典:json.load(json格式数据) 3.通过字典中的键访问值 (四)数据存储到mysql中 1.下载并导入pymysql import pymysql 2.建立链接:conn=pymysql.Connenct(host,port,user,oassword,db,charset=‘utf8’) 3.写sql语句 4.定义游标:cursor=conn.cursor() 5.使用定义的游标执行sql语句:cursor.execute()sql 6.向数据库提交数据:conn.commit() 7.关闭游标:cursor.close() 8.关闭链接:conn.close() 这次的爬虫是需要账户的所以h是使用的Request Headers中的数据 import requests,json,csv,time,pymysql

keyword = input("请输入查询的职务")

def getHtml(url):

# 数据采集

# h = {

# 'user-agent': ''' Mozilla/5.0 (Windows NT 10.0;

# WOW64) AppleWebKit/537.36 (KHTML, like

# Gecko) Chrome/84.0

# .4147

# .89 Safari/537.36'''

# }

h = {

'accept': 'application / json, text / javascript, * / *;q=0.01',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh - CN, zh;q = 0.9',

'content-length': '187',

'content-type': 'application / x - www - form - urlencoded;charset = UTF - 8',

'cookie': 'user_trace_token = 20210811171420 - 2ffaeedb - b564 - 4952 - bd4f - 98d653f8dd82;_ga = GA1.2.1124964589.1628673260;LGUID = 20210811171420 - 23da14c9 - 6dab - 4720 - bc7f - b69df2ceb1f3;sajssdk_2015_cross_new_user = 1;_gid = GA1.2.642774310.1628673284;gate_login_token = 6750561a88ef94f733a1fcd2a6b2904d62951a47bc7f22df805342a995a92f22;LG_LOGIN_USER_ID = 3ef1b26ba9555cb7bd5bc00ea2afc307e0396355ce430c44c7c7b0efd9754be6;LG_HAS_LOGIN = 1;showExpriedIndex = 1;showExpriedCompanyHome = 1;showExpriedMyPublish = 1;hasDeliver = 0;privacyPolicyPopup = false;index_location_city = % E5 % 85 % A8 % E5 % 9B % BD;RECOMMEND_TIP = true;__SAFETY_CLOSE_TIME__22449191 = 1;Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6 = 1628673260, 1628680870;LGSID = 20210811192110 - 5d357c31 - f5c5 - 4673 - a6fe - 1f7aa9832c9b;PRE_UTM = m_cf_cpt_baidu_pcbt;PRE_HOST = www.baidu.com;PRE_SITE = https % 3A % 2F % 2Fwww.baidu.com % 2Fother.php % 3Fsc.060000abstzkng4oZnusQQpAJ1RM1r469sUVFOyBxYezJNF % 5F3voalKPl75Aalwm55EuNlZYnyABo8sHQVsnubyZpCt6rg20SDkMFfi1ykMxURnb1uFvNn6gVFev7OaHIODVc1YLOzZuI % 5FP91K7bQn3c1eVyMa2NTINfLSw8vAeFm4RE2nVOm7rhVwkpuF8ToMGSuWJDYa5 - EfqJPTEEmFAiIPydo.7Y % 5FNR2Ar5Od663rj6tJQrGvKD77h24SU5WudF6ksswGuh9J4qt7jHzk8sHfGmYt % 5FrE - 9kYryqM764TTPqKi % 5FnYQZHuukL0.TLFWgv - b5HDkrfK1ThPGujYknHb0THY0IAYqs2v4VnL30ZN1ugFxIZ - suHYs0A7bgLw4TARqnsKLULFb5TaV8UHPS0KzmLmqnfKdThkxpyfqnHRzrjb1nH0zPsKVINqGujYkPjfsPjn3n6KVgv - b5HDknjTYPjTk0AdYTAkxpyfqnHczP1n0TZuxpyfqn0KGuAnqiDF70ZKGujYzP6KWpyfqnWDs0APzm1YznH01Ps % 26ck % 3D8577.2.151.310.161.341.197.504 % 26dt % 3D1628680864 % 26wd % 3D % 25E6 % 258B % 2589 % 25E5 % 258B % 25BE % 25E7 % 25BD % 2591 % 26tpl % 3Dtpl % 5F12273 % 5F25897 % 5F22126 % 26l % 3D1528931027 % 26us % 3DlinkName % 253D % 2525E6 % 2525A0 % 252587 % 2525E9 % 2525A2 % 252598 - % 2525E4 % 2525B8 % 2525BB % 2525E6 % 2525A0 % 252587 % 2525E9 % 2525A2 % 252598 % 2526linkText % 253D % 2525E3 % 252580 % 252590 % 2525E6 % 25258B % 252589 % 2525E5 % 25258B % 2525BE % 2525E6 % 25258B % 25259B % 2525E8 % 252581 % 252598 % 2525E3 % 252580 % 252591 % 2525E5 % 2525AE % 252598 % 2525E6 % 252596 % 2525B9 % 2525E7 % 2525BD % 252591 % 2525E7 % 2525AB % 252599 % 252520 - % 252520 % 2525E4 % 2525BA % 252592 % 2525E8 % 252581 % 252594 % 2525E7 % 2525BD % 252591 % 2525E9 % 2525AB % 252598 % 2525E8 % 252596 % 2525AA % 2525E5 % 2525A5 % 2525BD % 2525E5 % 2525B7 % 2525A5 % 2525E4 % 2525BD % 25259C % 2525EF % 2525BC % 25258C % 2525E4 % 2525B8 % 25258A % 2525E6 % 25258B % 252589 % 2525E5 % 25258B % 2525BE % 21 % 2526linkType % 253D;PRE_LAND = https % 3A % 2F % 2Fwww.lagou.com % 2Flanding - page % 2Fpc % 2Fsearch.html % 3Futm % 5Fsource % 3Dm % 5Fcf % 5Fcpt % 5Fbaidu % 5Fpcbt;_putrc = 49E09C21F104EE26123F89F2B170EADC;JSESSIONID = ABAAABAABAGABFA53D5BE8416D5D8A97CF86D4C78670054;login = true;unick = % E5 % B8 % AD % E4 % BA % 9A % E8 % 8E % 89;WEBTJ - ID = 20210811192139 - 17b34f24cf942a - 0a32363c696808 - 436d2710 - 1296000 - 17b34f24cfaa72;sensorsdata2015session = % 7B % 7D;TG - TRACK - CODE = index_user;X_HTTP_TOKEN = 0d37be70811080cf330186826144b8b492a87bfbbc;Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6 = 1628681034;LGRID = 20210811192358 - 526edbb5 - b009 - 4e55 - a806 - 3b99a453ed3b;sensorsdata2015jssdkcross = % 7B % 22distinct_id % 22 % 3A % 2222449191 % 22 % 2C % 22 % 24device_id % 22 % 3A % 2217b347e188019c - 04cc2319c4da9a - 436d2710 - 1296000 - 17b347e18818b0 % 22 % 2C % 22props % 22 % 3A % 7B % 22 % 24latest_traffic_source_type % 22 % 3A % 22 % E7 % 9B % B4 % E6 % 8E % A5 % E6 % B5 % 81 % E9 % 87 % 8F % 22 % 2C % 22 % 24latest_search_keyword % 22 % 3A % 22 % E6 % 9C % AA % E5 % 8F % 96 % E5 % 88 % B0 % E5 % 80 % BC_ % E7 % 9B % B4 % E6 % 8E % A5 % E6 % 89 % 93 % E5 % BC % 80 % 22 % 2C % 22 % 24latest_referrer % 22 % 3A % 22 % 22 % 2C % 22 % 24os % 22 % 3A % 22Windows % 22 % 2C % 22 % 24browser % 22 % A % 22Chrome % 22 % 2C % 22 % 24browser_version % 22 % 3A % 2284.0.4147.89 % 22 % 7D % 2C % 22first_id % 22 % 3A % 2217b347e188019c - 04cc2319c4da9a - 436d2710 - 1296000 - 17b347e18818b0 % 22 % 7D',

'origin': 'https: // www.lagou.com',

'referer': 'https: // www.lagou.com / wn / jobs?px = default & cl = false & fromSearch = true & labelWords = sug & suginput = python & kd = python % E5 % BC % 80 % E5 % 8F % 91 % E5 % B7 % A5 % E7 % A8 % 8B % E5 % B8 % 88 & pn = 1',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla / 5.0(Windows NT10.0;WOW64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 84.0.4147.89Safari / 537.36SLBrowser / 7.0.0.6241SLBChan / 30',

'x-anit-forge-code': '7cd23e45 - 40de - 449e - b201 - cddd619b37f3',

'x-anit-forge-token': '9b30b067 - ac6d - 4915 - af3e - c308979313d0'

}

# 定义post方式参数

for num in range(1,31):

formdata = {

'first': 'true',

'pn': num,

'kd': keyword

}

# 向服务器发送请求

response = requests.post(url, headers = h,data = formdata)

# 下载数据

jsonhtml = response.text

print(jsonhtml)

# 解析数据

dictdata = json.loads(jsonhtml)

# print(type(dictdata))

ct = dictdata['content']['positionResult']['result']

positionlist = []

for i in range(0,dictdata['content']['pageSize']):

positionName = ct[i]['positionName']

companyFullName = ct[i]['companyFullName']

city = ct[i]['city']

district = ct[i]['district']

companySize = ct[i]['companySize']

education = ct[i]['education']

salary = ct[i]['salary']

salaryMonth = ct[i]['salaryMonth']

workYear = ct[i]['workYear']

# print(workYear)

datalist = [positionName,companyFullName,city,district,companySize,education,salary,salaryMonth,workYear]

positionlist.append(datalist)

# 建立连接

conn = pymysql.Connect(host='localhost',port=3306,user='root',passwd='277877061#xyl',db='lagou',charset='utf8')

# sql

sql = "insert into lg values('%s','%s','%s','%s','%s','%s','%s','%s','%s');"%(positionName,companyFullName,city,district,companySize,education,salary,salaryMonth,workYear)

# 创建游标并执行

cursor = conn.cursor()

try:

cursor.execute(sql)

conn.commit()

except:

conn.rollback()

cursor.close()

conn.close()

# 设置时间延迟

time.sleep(3)

with open('拉勾网%s职位信息.csv'%keyword,'a',encoding='utf-8',newline='') as f:

w = csv.writer(f)

w.writerows(positionlist)

# print(ct)

# 调用函数

title = ['职位名称','公司名称','所在城市','所属地区','公司规模','教育水平','薪资范围','薪资月','工作经验']

with open('拉勾网%s职位信息.csv'%keyword,'a',encoding='utf-8',newline='') as f:

w = csv.writer(f)

w.writerow(title)

getHtml('https://www.lagou.com/jobs/v2/positionAjax.json?needAddtionalResult=false')

四、疫情数据采集

import requests,json,csv,time,pymysql

keyword = input("请输入查询的职务")

def getHtml(url):

# 数据采集

# h = {

# 'user-agent': ''' Mozilla/5.0 (Windows NT 10.0;

# WOW64) AppleWebKit/537.36 (KHTML, like

# Gecko) Chrome/84.0

# .4147

# .89 Safari/537.36'''

# }

h = {

'accept': 'application / json, text / javascript, * / *;q=0.01',

'accept-encoding': 'gzip, deflate, br',

'accept-language': 'zh - CN, zh;q = 0.9',

'content-length': '187',

'content-type': 'application / x - www - form - urlencoded;charset = UTF - 8',

'cookie': 'user_trace_token = 20210811171420 - 2ffaeedb - b564 - 4952 - bd4f - 98d653f8dd82;_ga = GA1.2.1124964589.1628673260;LGUID = 20210811171420 - 23da14c9 - 6dab - 4720 - bc7f - b69df2ceb1f3;sajssdk_2015_cross_new_user = 1;_gid = GA1.2.642774310.1628673284;gate_login_token = 6750561a88ef94f733a1fcd2a6b2904d62951a47bc7f22df805342a995a92f22;LG_LOGIN_USER_ID = 3ef1b26ba9555cb7bd5bc00ea2afc307e0396355ce430c44c7c7b0efd9754be6;LG_HAS_LOGIN = 1;showExpriedIndex = 1;showExpriedCompanyHome = 1;showExpriedMyPublish = 1;hasDeliver = 0;privacyPolicyPopup = false;index_location_city = % E5 % 85 % A8 % E5 % 9B % BD;RECOMMEND_TIP = true;__SAFETY_CLOSE_TIME__22449191 = 1;Hm_lvt_4233e74dff0ae5bd0a3d81c6ccf756e6 = 1628673260, 1628680870;LGSID = 20210811192110 - 5d357c31 - f5c5 - 4673 - a6fe - 1f7aa9832c9b;PRE_UTM = m_cf_cpt_baidu_pcbt;PRE_HOST = www.baidu.com;PRE_SITE = https % 3A % 2F % 2Fwww.baidu.com % 2Fother.php % 3Fsc.060000abstzkng4oZnusQQpAJ1RM1r469sUVFOyBxYezJNF % 5F3voalKPl75Aalwm55EuNlZYnyABo8sHQVsnubyZpCt6rg20SDkMFfi1ykMxURnb1uFvNn6gVFev7OaHIODVc1YLOzZuI % 5FP91K7bQn3c1eVyMa2NTINfLSw8vAeFm4RE2nVOm7rhVwkpuF8ToMGSuWJDYa5 - EfqJPTEEmFAiIPydo.7Y % 5FNR2Ar5Od663rj6tJQrGvKD77h24SU5WudF6ksswGuh9J4qt7jHzk8sHfGmYt % 5FrE - 9kYryqM764TTPqKi % 5FnYQZHuukL0.TLFWgv - b5HDkrfK1ThPGujYknHb0THY0IAYqs2v4VnL30ZN1ugFxIZ - suHYs0A7bgLw4TARqnsKLULFb5TaV8UHPS0KzmLmqnfKdThkxpyfqnHRzrjb1nH0zPsKVINqGujYkPjfsPjn3n6KVgv - b5HDknjTYPjTk0AdYTAkxpyfqnHczP1n0TZuxpyfqn0KGuAnqiDF70ZKGujYzP6KWpyfqnWDs0APzm1YznH01Ps % 26ck % 3D8577.2.151.310.161.341.197.504 % 26dt % 3D1628680864 % 26wd % 3D % 25E6 % 258B % 2589 % 25E5 % 258B % 25BE % 25E7 % 25BD % 2591 % 26tpl % 3Dtpl % 5F12273 % 5F25897 % 5F22126 % 26l % 3D1528931027 % 26us % 3DlinkName % 253D % 2525E6 % 2525A0 % 252587 % 2525E9 % 2525A2 % 252598 - % 2525E4 % 2525B8 % 2525BB % 2525E6 % 2525A0 % 252587 % 2525E9 % 2525A2 % 252598 % 2526linkText % 253D % 2525E3 % 252580 % 252590 % 2525E6 % 25258B % 252589 % 2525E5 % 25258B % 2525BE % 2525E6 % 25258B % 25259B % 2525E8 % 252581 % 252598 % 2525E3 % 252580 % 252591 % 2525E5 % 2525AE % 252598 % 2525E6 % 252596 % 2525B9 % 2525E7 % 2525BD % 252591 % 2525E7 % 2525AB % 252599 % 252520 - % 252520 % 2525E4 % 2525BA % 252592 % 2525E8 % 252581 % 252594 % 2525E7 % 2525BD % 252591 % 2525E9 % 2525AB % 252598 % 2525E8 % 252596 % 2525AA % 2525E5 % 2525A5 % 2525BD % 2525E5 % 2525B7 % 2525A5 % 2525E4 % 2525BD % 25259C % 2525EF % 2525BC % 25258C % 2525E4 % 2525B8 % 25258A % 2525E6 % 25258B % 252589 % 2525E5 % 25258B % 2525BE % 21 % 2526linkType % 253D;PRE_LAND = https % 3A % 2F % 2Fwww.lagou.com % 2Flanding - page % 2Fpc % 2Fsearch.html % 3Futm % 5Fsource % 3Dm % 5Fcf % 5Fcpt % 5Fbaidu % 5Fpcbt;_putrc = 49E09C21F104EE26123F89F2B170EADC;JSESSIONID = ABAAABAABAGABFA53D5BE8416D5D8A97CF86D4C78670054;login = true;unick = % E5 % B8 % AD % E4 % BA % 9A % E8 % 8E % 89;WEBTJ - ID = 20210811192139 - 17b34f24cf942a - 0a32363c696808 - 436d2710 - 1296000 - 17b34f24cfaa72;sensorsdata2015session = % 7B % 7D;TG - TRACK - CODE = index_user;X_HTTP_TOKEN = 0d37be70811080cf330186826144b8b492a87bfbbc;Hm_lpvt_4233e74dff0ae5bd0a3d81c6ccf756e6 = 1628681034;LGRID = 20210811192358 - 526edbb5 - b009 - 4e55 - a806 - 3b99a453ed3b;sensorsdata2015jssdkcross = % 7B % 22distinct_id % 22 % 3A % 2222449191 % 22 % 2C % 22 % 24device_id % 22 % 3A % 2217b347e188019c - 04cc2319c4da9a - 436d2710 - 1296000 - 17b347e18818b0 % 22 % 2C % 22props % 22 % 3A % 7B % 22 % 24latest_traffic_source_type % 22 % 3A % 22 % E7 % 9B % B4 % E6 % 8E % A5 % E6 % B5 % 81 % E9 % 87 % 8F % 22 % 2C % 22 % 24latest_search_keyword % 22 % 3A % 22 % E6 % 9C % AA % E5 % 8F % 96 % E5 % 88 % B0 % E5 % 80 % BC_ % E7 % 9B % B4 % E6 % 8E % A5 % E6 % 89 % 93 % E5 % BC % 80 % 22 % 2C % 22 % 24latest_referrer % 22 % 3A % 22 % 22 % 2C % 22 % 24os % 22 % 3A % 22Windows % 22 % 2C % 22 % 24browser % 22 % A % 22Chrome % 22 % 2C % 22 % 24browser_version % 22 % 3A % 2284.0.4147.89 % 22 % 7D % 2C % 22first_id % 22 % 3A % 2217b347e188019c - 04cc2319c4da9a - 436d2710 - 1296000 - 17b347e18818b0 % 22 % 7D',

'origin': 'https: // www.lagou.com',

'referer': 'https: // www.lagou.com / wn / jobs?px = default & cl = false & fromSearch = true & labelWords = sug & suginput = python & kd = python % E5 % BC % 80 % E5 % 8F % 91 % E5 % B7 % A5 % E7 % A8 % 8B % E5 % B8 % 88 & pn = 1',

'sec-fetch-dest': 'empty',

'sec-fetch-mode': 'cors',

'sec-fetch-site': 'same-origin',

'user-agent': 'Mozilla / 5.0(Windows NT10.0;WOW64) AppleWebKit / 537.36(KHTML, likeGecko) Chrome / 84.0.4147.89Safari / 537.36SLBrowser / 7.0.0.6241SLBChan / 30',

'x-anit-forge-code': '7cd23e45 - 40de - 449e - b201 - cddd619b37f3',

'x-anit-forge-token': '9b30b067 - ac6d - 4915 - af3e - c308979313d0'

}

# 定义post方式参数

for num in range(1,31):

formdata = {

'first': 'true',

'pn': num,

'kd': keyword

}

# 向服务器发送请求

response = requests.post(url, headers = h,data = formdata)

# 下载数据

jsonhtml = response.text

print(jsonhtml)

# 解析数据

dictdata = json.loads(jsonhtml)

# print(type(dictdata))

ct = dictdata['content']['positionResult']['result']

positionlist = []

for i in range(0,dictdata['content']['pageSize']):

positionName = ct[i]['positionName']

companyFullName = ct[i]['companyFullName']

city = ct[i]['city']

district = ct[i]['district']

companySize = ct[i]['companySize']

education = ct[i]['education']

salary = ct[i]['salary']

salaryMonth = ct[i]['salaryMonth']

workYear = ct[i]['workYear']

# print(workYear)

datalist = [positionName,companyFullName,city,district,companySize,education,salary,salaryMonth,workYear]

positionlist.append(datalist)

# 建立连接

conn = pymysql.Connect(host='localhost',port=3306,user='root',passwd='277877061#xyl',db='lagou',charset='utf8')

# sql

sql = "insert into lg values('%s','%s','%s','%s','%s','%s','%s','%s','%s');"%(positionName,companyFullName,city,district,companySize,education,salary,salaryMonth,workYear)

# 创建游标并执行

cursor = conn.cursor()

try:

cursor.execute(sql)

conn.commit()

except:

conn.rollback()

cursor.close()

conn.close()

# 设置时间延迟

time.sleep(3)

with open('拉勾网%s职位信息.csv'%keyword,'a',encoding='utf-8',newline='') as f:

w = csv.writer(f)

w.writerows(positionlist)

# print(ct)

# 调用函数

title = ['职位名称','公司名称','所在城市','所属地区','公司规模','教育水平','薪资范围','薪资月','工作经验']

with open('拉勾网%s职位信息.csv'%keyword,'a',encoding='utf-8',newline='') as f:

w = csv.writer(f)

w.writerow(title)

getHtml('https://www.lagou.com/jobs/v2/positionAjax.json?needAddtionalResult=false')

四、疫情数据采集

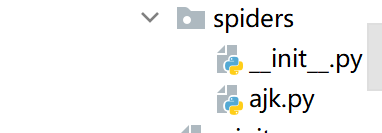

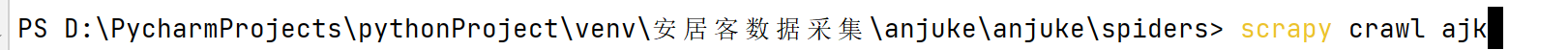

360疫情数据 import requests,json,csv,pymysql def getHtml(url): h = { 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0;Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36' } response = requests.get(url,headers=h) joinhtml = response.text directro = joinhtml.split('(')[1] directro2 = directro[0:-2] dictdata = json.loads(directro2) ct = dictdata['country'] positionlist = [] for i in range(0,196): provinceName = ct[i]['provinceName'] cured = ct[i]['cured'] diagnosed = ct[i]['diagnosed'] died = ct[i]['died'] diffDiagnosed = ct[i]['diffDiagnosed'] datalist = [provinceName,cured,diagnosed,died,diffDiagnosed] positionlist.append(datalist) print(diagnosed) conn = pymysql.Connect(host = 'localhost',port = 3306,user = 'root',passwd='277877061#xyl',db='lagou',charset='utf8') sql = "insert into yq values('%s','%s','%s','%s','%s');" %(provinceName,cured,diagnosed,died,diffDiagnosed) cursor = conn.cursor() try: cursor.execute(sql) conn.commit() except: conn.rollback() cursor.close() conn.close() with open('360疫情数据.csv','a',encoding="utf-8",newline="") as f: w = csv.writer(f) w.writerows(positionlist) title = ['provinceName','cured','diagnosed','died','diffDiagnosed'] with open('360疫情数据.csv', 'a', encoding="utf-8", newline="") as f: w = csv.writer(f) w.writerow(title) getHtml('https://m.look.360.cn/events/feiyan?sv=&version=&market=&device=2&net=4&stype=&scene=&sub_scene=&refer_scene=&refer_subscene=&f=jsonp&location=true&sort=2&_=1626165806369&callback=jsonp2') 五、scrapy 数据采集 (一)创建爬虫步骤 1.创建项目,在命令行输入:scrapy startproject 项目名称 2.创建爬虫文件:在命令行定位到spiders文件夹下:scrapy genspider 爬虫文件名 网站域名 3.运行程序:scrapy crawl 爬虫文件名 (二)scrapy文件结构 1.spiders文件夹:编写解析程序 2.init.py:包标志文件,只有存在这个python文件的文件夹才是包 3.items.py:定义数据结构,用于定义爬取的字段 4.middlewares.py:中间件 5.pipliness.py:管道文件,用于定义数据存储方向 6.settings.py:配置文件,通常配置用户代理,管道文件开启 (三)scrapy爬虫框架使用流程 1.配置settings.py文件:(1)User-Agent:“浏览器参数” (2)ROBOTS协议:Fasle (3)修改ITEM_PIPLINES:打开管道 2.在item.py中定义爬取的字段 字段名 = scrapy.Field() 3.在spiders文件夹中的parse()方法中写解析代码并将解析结果提交给item对象 4.在piplines.py中定义存储路径 5.分页采集:1)查找url的规律 2)在爬虫文件中先定义页面变量,使用if判断和字符串格式化方法生成新url 3)yield scrapy Requests(url,callback=parse) 案例 (一)安居客数据采集 其中的文件venv----右键----New----Directory----安居库数据采集 venv----右键----Open in----terminal----命令行输入: scrapy startproject anjuke

回车

查看自动生成的文件

在terminal里面进去spiders目录: cd anjuke\anjuke\spiders在命令行中输入:(创建ajk.py文件) scrapy genspider ajk bj.fang.anjuke.com/?from=navigation

运行:(命令行输入) scrapy crawl ajk

1.ajk.py中 import scrapy from anjuke.items import AnjukeItem class AjkSpider(scrapy.Spider): name = 'ajk' #allowed_domains = ['bj.fang.anjuke.com/?from=navigation'] start_urls = ['http://bj.fang.anjuke.com/loupan/all/p1'] pagenum = 1 def parse(self, response): #print(response.text) item = AnjukeItem() # 解析数据 name = response.xpath('//span[@class="items-name"]/text()').extract() temp = response.xpath('//span[@class="list-map"]/text()').extract() place = [] district = [] #print(temp) for i in temp: placetemp ="".join(i.split("]")[0].strip("[").strip().split()) districttemp = i.split("]")[1].strip() # print(districttemp) place.append(placetemp) district.append(districttemp) # apartment = response.xpath('//a[@class="huxing"]/span[not(@class)]/text()').extract() area1 = response.xpath('//span[@class="building-area"]/text()').extract() area = [] for j in area1: areatemp = j.strip("建筑面积:").strip('㎡') area.append(areatemp) price = response.xpath('//p[@class="price"]/span/text()').extract() # print(name) # 将处理后的数据传入item中 item['name'] = name item['place'] = place item['district'] = district #item['apartment'] = apartment item['area'] = area item['price'] = price yield item #print(type(item['name'])) for a, b, c, d, e in zip(item['name'], item['place'], item['district'], item['area'], item['price']): print(a, b, c, d, e) # 分页爬虫 if self.pagenum < 5: self.pagenum += 1 newurl = "https://bj.fang.anjuke.com/loupan/all/p{}/".format(str(self.pagenum)) print(newurl) yield scrapy.Request(newurl, callback=self.parse) # print(type(dict(item)))2.pipelines.py中 # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter # 存放到csv中 import csv,pymysql class AnjukePipeline: def open_spider(self, spider): self.f = open("安居客北京.csv", "w", encoding='utf-8', newline="") self.w = csv.writer(self.f) titlelist = ['name', 'place', 'distract', 'area', 'price'] self.w.writerow(titlelist) def process_item(self, item, spider): # writerow() [1,2,3,4] writerows() [[第一条记录],[第二条记录],[第三条记录]] # 数据处理 k = list(dict(item).values()) self.listtemp = [] for a, b, c, d, e in zip(k[0], k[1], k[2], k[3], k[4]): self.temp = [a, b, c, d, e] self.listtemp.append(self.temp) # print(listtemp) self.w.writerows(self.listtemp) return item def close_spider(self,spider): self.f.close() # 存储到mysql中 class MySqlPipeline: def open_spider(self,spider): self.conn = pymysql.Connect(host="localhost",port=3306,user='root',password='277877061#xyl',db="anjuke",charset='utf8') def process_item(self,item,spider): self.cursor = self.conn.cursor() for a, b, c, d, e in zip(item['name'], item['place'], item['district'], item['area'], item['price']): sql = 'insert into ajk values("%s","%s","%s","%s","%s");'%(a,b,c,d,e) self.cursor.execute(sql) self.conn.commit() return item def close_spider(self,spider): self.cursor.close() self.conn.close()3.items.py中 # Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class AnjukeItem(scrapy.Item): # define the fields for your item here like: name = scrapy.Field() place = scrapy.Field() district = scrapy.Field() #apartment = scrapy.Field() area = scrapy.Field() price = scrapy.Field()4.settings.py中 # Scrapy settings for anjuke project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://docs.scrapy.org/en/latest/topics/settings.html # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html # https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'anjuke' SPIDER_MODULES = ['anjuke.spiders'] NEWSPIDER_MODULE = 'anjuke.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla / 5.0(Windows NT 10.0;WOW64)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'anjuke.middlewares.AnjukeSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'anjuke.middlewares.AnjukeDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'anjuke.pipelines.AnjukePipeline': 300, 'anjuke.pipelines.MySqlPipeline': 301 } # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'5.middlewares.py中 # Define here the models for your spider middleware # # See documentation in: # https://docs.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals # useful for handling different item types with a single interface from itemadapter import is_item, ItemAdapter class AnjukeSpiderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider. # Should return None or raise an exception. return None def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response. # Must return an iterable of Request, or item objects. for i in result: yield i def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception. # Should return either None or an iterable of Request or item objects. pass def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated. # Must return only requests (not items). for r in start_requests: yield r def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name) class AnjukeDownloaderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware. # Must either: # - return None: continue processing this request # - or return a Response object # - or return a Request object # - or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called return None def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception. # Must either: # - return None: continue processing this exception # - return a Response object: stops process_exception() chain # - return a Request object: stops process_exception() chain pass def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)6、Mysql中 1)创建anjuke数据库 mysql> create database anjuke;2)使用库 mysql> use anjuke;3)创建表 mysql> create table ajk( -> name varchar(100),place varchar(100),distarct varchar(100),area varchar(100),price varchar(50)); Query OK, 0 rows affected (0.03 sec)4)查看表结构 mysql> desc ajk; +----------+--------------+------+-----+---------+-------+ | Field | Type | Null | Key | Default | Extra | +----------+--------------+------+-----+---------+-------+ | name | varchar(100) | YES | | NULL | | | place | varchar(100) | YES | | NULL | | | distarct | varchar(100) | YES | | NULL | | | area | varchar(100) | YES | | NULL | | | price | varchar(50) | YES | | NULL | | +----------+--------------+------+-----+---------+-------+5)查看表数据 mysql> select * from ajk; +-------------------------------------+-----------------------+-----------------------------------------------------------+--------------+--------+ | name | place | distarct | area | price | +-------------------------------------+-----------------------+-----------------------------------------------------------+--------------+--------+ | 和锦华宸品牌馆 | 顺义马坡 | 和安路与大营二街交汇处东北角 | 95-180 | 400 | | 保利建工•和悦春风 | 大兴庞各庄 | 永兴河畔 | 76-109 | 36000 | | 华樾国际·领尚 | 朝阳东坝 | 东坝大街和机场二高速交叉路口西方向3... | 96-196.82 | 680 | | 金地·璟宸品牌馆 | 房山良乡 | 政通西路 | 97-149 | 390 | | 中海首钢天玺 | 石景山古城 | 北京市石景山区古城南街及莲石路交叉口... | 122-147 | 900 | | 傲云品牌馆 | 朝阳孙河 | 顺黄路53号 | 87288 | 2800 | | 首开香溪郡 | 通州宋庄 | 通顺路 | 90-220 | 39000 | | 北京城建·北京合院 | 顺义顺义城 | 燕京街与通顺路交汇口东800米(仁和公... | 93-330 | 850 | | 金融街武夷·融御 | 通州政务区 | 北京城市副中心01组团通胡大街与东六环... | 99-176 | 66000 | | 北京城建·天坛府品牌馆 | 东城天坛 | 北京市东城区景泰路与安乐林路交汇处 | 60-135 | 800 | | 亦庄金悦郡 | 大兴亦庄 | 新城环景西一路与景盛南二街交叉口 | 72-118 | 38000 | | 金茂·北京国际社区 | 顺义顺义城 | 水色时光西路西侧 | 50-118 | 175 | (二)太平洋汽车数据采集1.tpy.py中 import scrapy from taipingyangqiche.item import TaipingyangqicheItem class TpyqcSpider(scrapy.Spider): name = 'tpyqc' allowed_domains = ['price.pcauto.com.cn/top'] start_urls = ['http://price.pcauto.com.cn/top/'] pagenum = 1 def parse(self, response): item = TaipingyangqicheItem() name = response.xpath('//p[@class="sname"]/a/text()').extract() temperature = response.xpath('//p[@class="col rank"]/span[@class="fl red rd-mark"]/text()').extract() price = response.xpath('//p/em[@class="red"]/text()').extract() brand2 = response.xpath('//p[@class="col col1"]/text()').extract() brand = [] for j in brand2: areatemp = j.strip('品牌:').strip('排量:').strip('\r\n') brand.append(areatemp) brand = [i for i in brand if i != ''] rank2 = response.xpath('//p[@class="col"]/text()').extract() rank = [] for j in rank2: areatemp = j.strip('级别:').strip('变速箱:').strip('\r\n') rank.append(areatemp) rank = [i for i in rank if i != ''] item['name'] = name item['temperature'] = temperature item['price'] = price item['brand'] = brand item['rank'] = rank yield item for a, b, c, d, e in zip(item['name'], item['temperature'], item['price'], item['brand'], item['rank']): print(a, b, c, d, e) if self.pagenum < 6: self.pagenum += 1 newurl = "https://price.pcauto.com.cn/top/k0-p{}.html".format(str(self.pagenum)) print(newurl) yield scrapy.Request(newurl, callback=self.parse) # print(type(dict(item)))2.pipelines.py中 # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter import csv,pymysql class TaipingyangqichePipeline: def open_spider(self, spider): self.f = open("太平洋.csv", "w", encoding='utf-8', newline="") self.w = csv.writer(self.f) titlelist = ['name', 'temperature', 'price', 'brand', 'rank'] self.w.writerow(titlelist) def process_item(self, item, spider): k = list(dict(item).values()) self.listtemp = [] for a, b, c, d, e in zip(k[0], k[1], k[2], k[3], k[4]): self.temp = [a, b, c, d, e] self.listtemp.append(self.temp) print(self.listtemp) self.w.writerows(self.listtemp) return item def close_spider(self, spider): self.f.close() class MySqlPipeline: def open_spider(self, spider): self.conn = pymysql.Connect(host="localhost", port=3306, user='root', password='277877061#xyl', db="taipy",charset='utf8') def process_item(self, item, spider): self.cursor = self.conn.cursor() for a, b, c, d, e in zip(item['name'], item['temperature'], item['price'], item['brand'], item['rank']): sql = 'insert into tpy values("%s","%s","%s","%s","%s");' % (a, b, c, d, e) self.cursor.execute(sql) self.conn.commit() return item def close_spider(self, spider): self.cursor.close() self.conn.close()3.items.py中 # Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class TaipingyangqicheItem(scrapy.Item): # define the fields for your item here like: name = scrapy.Field() temperature = scrapy.Field() price = scrapy.Field() brand = scrapy.Field() rank = scrapy.Field()4.settings.py中 # Scrapy settings for taipingyangqiche project # # For simplicity, this file contains only settings considered important or # commonly used. You can find more settings consulting the documentation: # # https://docs.scrapy.org/en/latest/topics/settings.html # https://docs.scrapy.org/en/latest/topics/downloader-middleware.html # https://docs.scrapy.org/en/latest/topics/spider-middleware.html BOT_NAME = 'taipingyangqiche' SPIDER_MODULES = ['taipingyangqiche.spiders'] NEWSPIDER_MODULE = 'taipingyangqiche.spiders' # Crawl responsibly by identifying yourself (and your website) on the user-agent USER_AGENT = 'Mozilla / 5.0(Windows NT 10.0;WOW64)' # Obey robots.txt rules ROBOTSTXT_OBEY = False # Configure maximum concurrent requests performed by Scrapy (default: 16) #CONCURRENT_REQUESTS = 32 # Configure a delay for requests for the same website (default: 0) # See https://docs.scrapy.org/en/latest/topics/settings.html#download-delay # See also autothrottle settings and docs #DOWNLOAD_DELAY = 3 # The download delay setting will honor only one of: #CONCURRENT_REQUESTS_PER_DOMAIN = 16 #CONCURRENT_REQUESTS_PER_IP = 16 # Disable cookies (enabled by default) #COOKIES_ENABLED = False # Disable Telnet Console (enabled by default) #TELNETCONSOLE_ENABLED = False # Override the default request headers: #DEFAULT_REQUEST_HEADERS = { # 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', # 'Accept-Language': 'en', #} # Enable or disable spider middlewares # See https://docs.scrapy.org/en/latest/topics/spider-middleware.html #SPIDER_MIDDLEWARES = { # 'taipingyangqiche.middlewares.TaipingyangqicheSpiderMiddleware': 543, #} # Enable or disable downloader middlewares # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html #DOWNLOADER_MIDDLEWARES = { # 'taipingyangqiche.middlewares.TaipingyangqicheDownloaderMiddleware': 543, #} # Enable or disable extensions # See https://docs.scrapy.org/en/latest/topics/extensions.html #EXTENSIONS = { # 'scrapy.extensions.telnet.TelnetConsole': None, #} # Configure item pipelines # See https://docs.scrapy.org/en/latest/topics/item-pipeline.html ITEM_PIPELINES = { 'taipingyangqiche.pipelines.TaipingyangqichePipeline': 300, 'taipingyangqiche.pipelines.MySqlPipeline': 301 } # Enable and configure the AutoThrottle extension (disabled by default) # See https://docs.scrapy.org/en/latest/topics/autothrottle.html #AUTOTHROTTLE_ENABLED = True # The initial download delay #AUTOTHROTTLE_START_DELAY = 5 # The maximum download delay to be set in case of high latencies #AUTOTHROTTLE_MAX_DELAY = 60 # The average number of requests Scrapy should be sending in parallel to # each remote server #AUTOTHROTTLE_TARGET_CONCURRENCY = 1.0 # Enable showing throttling stats for every response received: #AUTOTHROTTLE_DEBUG = False # Enable and configure HTTP caching (disabled by default) # See https://docs.scrapy.org/en/latest/topics/downloader-middleware.html#httpcache-middleware-settings #HTTPCACHE_ENABLED = True #HTTPCACHE_EXPIRATION_SECS = 0 #HTTPCACHE_DIR = 'httpcache' #HTTPCACHE_IGNORE_HTTP_CODES = [] #HTTPCACHE_STORAGE = 'scrapy.extensions.httpcache.FilesystemCacheStorage'5.middlewares.py中 # Define here the models for your spider middleware # # See documentation in: # https://docs.scrapy.org/en/latest/topics/spider-middleware.html from scrapy import signals # useful for handling different item types with a single interface from itemadapter import is_item, ItemAdapter class TaipingyangqicheSpiderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider. # Should return None or raise an exception. return None def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response. # Must return an iterable of Request, or item objects. for i in result: yield i def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception. # Should return either None or an iterable of Request or item objects. pass def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated. # Must return only requests (not items). for r in start_requests: yield r def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name) class TaipingyangqicheDownloaderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects. @classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware. # Must either: # - return None: continue processing this request # - or return a Response object # - or return a Request object # - or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called return None def process_response(self, request, response, spider): # Called with the response returned from the downloader. # Must either; # - return a Response object # - return a Request object # - or raise IgnoreRequest return response def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception. # Must either: # - return None: continue processing this exception # - return a Response object: stops process_exception() chain # - return a Request object: stops process_exception() chain pass def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name) |

【本文地址】

今日新闻 |

推荐新闻 |

运行也是要在终端:

运行也是要在终端: