【精选】事件解析树Drain3使用方法和解释 |

您所在的位置:网站首页 › punctuation记忆方法 › 【精选】事件解析树Drain3使用方法和解释 |

【精选】事件解析树Drain3使用方法和解释

|

事件解析树Drain

开源代码链接:https://github.com/IBM/Drain3 开源代码:IBM/Drain3 起初是香港中文大学发表的论文,发明的目的用于解析日志模板,发起研究并开放源码,后由IBM团队的工程师发掘,进行了python3.x的迭代,重构了代码,方法是完全一样的方法,Drain升级为Drain3。 这里是原作论文地址: http://jiemingzhu.github.io/pub/pjhe_icws2017.pdf Drain3原理作用介绍以下文章部分介绍了IBM团队在香港中文大学团队工作的基础上,进一步完善的工作,和Drain3的能力介绍。 Use open source Drain3 log-template mining project to monitor for network outages使用开源的Drain3日志模板挖掘项目来监控网络中断Log-template mining is a method for extracting useful information from unstructured log files. This blog post explains how you can use log-template mining to monitor for network outages and introduces you to Drain3, an open source streaming log template miner.日志模板挖掘是一种从非结构化日志文件中提取有用信息的方法。这篇博文解释了如何使用日志模板挖掘来监视网络中断,并向您介绍了Drain3,这是一款开源流日志模板挖掘工具。 Our main goal in this use case is the early identification of incidents in the networking infrastructure of data centers that are part of the IBM Cloud. The IBM Cloud is based on many data centers in different regions and continents. At each data center (DC), thousands of network devices produce a high volume of syslog events. Using that information to quickly identify and troubleshoot outages and performance issues in the networking infrastructure helps reduce downtime and improve customer satisfaction. 在这个用例中,我们的主要目标是尽早识别属于IBM Cloud的数据中心的网络基础设施中的事件。IBM Cloud基于位于不同地区和大陆的许多数据中心。在每个数据中心(DC),数千个网络设备都会产生大量的syslog事件。使用该信息快速识别和排除网络基础设施中的中断和性能问题,有助于减少停机时间并提高客户满意度。 Logs usually exist as raw text files, although they can also be in other formats like csv, json, etc. A log contains a series of events that occurred in the system/component that wrote them. Each event usually carries a timestamp field and a free text field that describes the nature of the event (generated from a template written in a free language). Usually, each log event includes additional information, which might cover event severity level, thread ID, ID of the software component that wrote the event, host name, etc. 日志通常以原始文本文件的形式存在,尽管它们也可以是其他格式,如csv、json等。日志包含在编写日志的系统/组件中发生的一系列事件。每个事件通常都带有一个时间戳字段和一个描述事件性质的自由文本字段(由一个用自由语言编写的模板生成)。通常,每个日志事件都包含额外的信息,包括事件的严重级别、线程ID、编写事件的软件组件ID、主机名等。 This log event contains the following information parts (headers): timestamp, hostname, priority, and message body. 该日志事件包含以下信息部分(头):时间戳、主机名、优先级和消息体。 Each data center contains devices covering many vendors, types and versions. And these devices output log messages in many formats. Our first step was to extract the headers specified above. This can be done deterministically with some regular-expression based rules.每个数据中心包含许多厂商、类型和版本的设备。这些设备以多种格式输出日志消息。我们的第一步是提取上面指定的头文件。这可以通过一些基于正则表达式的规则确定地完成。 Logs vs metrics日志和指标When we want to troubleshoot or debug a complex software component, logs are usually among our best friends. Often we can also use metrics that cover periodic measurements of the system’s performance. These may include: CPU usage, memory usage, number of requests per second, etc. There are multiple mature and useful techniques and algorithms to analyze metrics, but significantly fewer to analyze logs. Therefore, we decided to build our own.当我们想要排除故障或调试一个复杂的软件组件时,日志通常是我们最好的朋友。通常,我们还可以使用包含系统性能周期性度量的指标。这些可能包括:CPU使用情况、内存使用情况、每秒请求数等。有多种成熟且有用的技术和算法用于分析指标,但用于分析日志的技术和算法却少得多。因此,我们决定自己建一个。 While metrics are easier to deal with because they represent a more structured type of information, they don’t always have the information we’re looking for. From a developer perspective, the effort required to add a new type of metric is greater than what is needed to add a new log event. Also, we usually want to keep the number of metrics we collect reasonably small to avoid bloating storage space and I/O.虽然度量标准更容易处理,因为它们代表了一种更结构化的信息类型,但它们并不总是包含我们正在寻找的信息。从开发人员的角度来看,添加新类型的度量所需的工作量要比添加新日志事件所需的工作量大。此外,我们通常希望将收集的指标数量保持在相当小的范围内,以避免增加存储空间和I/O。 We tend to add metric types post-mortem (after some kind of error occurred) in an effort to detect that same kind of error in the future. Software with a good set of metrics is usually mature and has been deployed in production for a long period. Logging, on the other hand, is easier to write and more expressive. This naturally results in log files containing more information that is useful for debugging than what is found in metric streams. This is especially true in less-mature systems. As a result, logs are better for troubleshooting errors and performance problems that were not anticipated when the software was written.我们倾向于在事后(发生某种类型的错误之后)添加度量类型,以便在将来检测到相同类型的错误。具有良好度量标准的软件通常是成熟的,并且已经在生产环境中部署了很长一段时间。另一方面,日志记录更容易编写,也更有表现力。这自然会导致日志文件中包含比度量流中更有用的调试信息。在不太成熟的系统中尤其如此。因此,日志可以更好地排除在编写软件时没有预料到的错误和性能问题。 Dealing with the unstructured nature of logs处理日志的非结构化性质One major difference between a log file and a book, for example, is that a log is produced from a finite number of immutable print statements. The log template in each print statement never changes, and the only dynamic part is the template variables. For example, the following Python print statement would generate the free-text (message-body) portion of the log event in the example above:例如,日志文件和书籍之间的一个主要区别是,日志是由有限数量的不可变打印语句生成的。每条打印语句中的日志模板都不会改变,唯一动态的部分是模板变量。例如,下面的Python print语句将在上面的例子中生成日志事件的自由文本(message-body)部分: print (f’User {user_name} logged out successfully, session duration = {hours} hours, {seconds} seconds’)It’s generally not a simple task to identify the templates used in a log file because the source code or parts of it are not always available. If we had a unique identifier per event type included in each log message, that would help a lot, but that kind of information is rarely included.识别日志文件中使用的模板通常不是一项简单的任务,因为源代码或其中的部分并不总是可用的。如果在每个日志消息中包含的每个事件类型都有一个惟一标识符,那将非常有帮助,但是很少包含这类信息。 Fortunately, the de-templating problem has received some research attention in recent years. The LogPAI project at the Chinese University of Hong Kong performed extensive benchmarking on the accuracy of 13 template miners. We evaluated the leading performers on that benchmark for our use case and settled on Drain as the best template-miner for our needs. Drain uses a simple approach for de-templating that is both accurate and fast.幸运的是,去模板问题近年来得到了一些研究关注。香港中文大学的LogPAI项目对13个模板挖掘器的准确性进行了广泛的基准测试。我们在用例的基准测试中评估了领先的性能,并确定Drain是最适合我们需求的模板挖掘器。Drain使用一种既准确又快速的简单方法去模板化。 Preparing Drain for production usage为生产使用准备DrainMoving forward, our next step was to extend Drain for the production environment, giving birth to Drain3 (i.e., adjusted for Python 3). In our scenario, syslogs are collected from the IBM Cloud network devices and then streamed into an Apache Kafka topic. Although Drain was developed with a streaming use-case in mind, we had to solve some issues before we could use Drain in a production pipeline. We detail these issues below.继续前进,我们的下一步是在生产环境中扩展Drain,产生Drain3(即,针对Python 3进行调整)。在我们的场景中,syslog日志从IBM云网络设备收集,然后流到Apache Kafka主题中。尽管Drain是基于流用例开发的,但在我们能够在生产管道中使用Drain之前,我们必须解决一些问题。我们将在下面详细讨论这些问题。 Refactoring - Drain was originally implemented in Python 2.7. We converted the code into a modern Python 3.6 codebase, while refactoring, improving the readability of the code, removing dependencies (e.g., pandas) and unused code, and fixing some bugs.重构——Drain最初是在Python 2.7中实现的。我们将代码转换为现代的Python 3.6代码库,同时进行重构,提高了代码的可读性,删除了依赖项(如pandas)和未使用的代码,并修复了一些bug。 Streaming Support - Instead of using the original function that reads a static log file, we externalized the log-reading code, and added a feeding function add_log_message() to process the next log line and return the ID of the identified log cluster (template). The cluster ID format was changed from a hash code into a more human-readable sequential ID.流支持——我们没有使用读取静态日志文件的原始函数,而是将日志读取代码外部化,并添加了一个补充函数add_log_message()来处理下一个日志行,并返回标识的日志集群(模板)的ID。集群ID格式从哈希码改为更易于阅读的顺序ID。 Resiliency - A production pipeline must be resilient. Drain is a stateful algorithm that maintains a parse tree that can change after each new log line begins being processed. To ensure resiliency, the template extractor must be able to restart using a saved state. In this way, after a failure, any knowledge already gathered will remain when the process recovers. Otherwise, we would have to stream all the logs from scratch to reach the same knowledge level. The state should be saved to a fault-tolerant, distributed medium and not a local file. A messaging system such as Apache Kafka is suitable for this, since it is distributed and persistent. Moreover, we were already using Kafka in our pipeline and did not want to add a dependency on another database/technology.弹性——生产管道必须具有弹性。Drain是一种有状态算法,它维护一个解析树,可以在开始处理每个新的日志行之后更改该解析树。为了确保弹性,模板提取器必须能够使用保存的状态重新启动。这样,在失败之后,当过程恢复时,已经收集到的任何知识都将保留下来。否则,我们将不得不从头开始流所有的日志,以达到相同的知识水平。状态应该保存到一个容错的分布式媒体,而不是本地文件。像Apache Kafka这样的消息传递系统适合于此,因为它是分布式的和持久的。此外,我们已经在我们的管道中使用Kafka,并不想添加对另一个数据库/技术的依赖。 We had the Drain state serialized and published into a dedicated Kafka topic upon each change. Our assumption was that after an initial learning period, new or changed templates will occur rather infrequently, and that would not be a big performance hit. Another advantage of Kafka as a persistence medium is that, in addition to loading the latest state, we could also load prior versions, depending on the topic size, which is dependent on the topic retention policy we define.我们序列化了Drain状态,并在每次更改时将其发布到一个专用的Kafka主题中。我们的假设是,在最初的学习阶段之后,新的或更改的模板将很少出现,这不会对性能造成很大的影响。Kafka作为持久性媒体的另一个优势是,除了加载最新的状态,我们还可以加载以前的版本,这取决于主题的大小,这取决于我们定义的主题保留策略。 We also made the persistence pluggable (i.e., injectable), so it would be easy to add additional persistence mediums or databases, and provide persistence to a local file for testing purposes.我们还使持久性可插入(即可注入),因此很容易添加额外的持久性介质或数据库,并将持久性提供给本地文件以进行测试。 Masking - We also improved the template mining accuracy by adding support for masking. Masking of a log message is the process of identifying constructs such as decimal numbers, hex numbers, IPs, email addresses, and so on, and replacing those with wildcards. In Drain3, masking is performed prior to processing of the log de-templating algorithm to help improve its accuracy. For example, the message ‘request 0xefef23 from 127.0.0.1 handled in 23ms‘ could be masked to ‘request from handled in ms’. Usually, just a few regular expression rules are sufficient for masking.屏蔽——我们还通过添加对屏蔽的支持来提高模板挖掘的精度。屏蔽日志消息是识别诸如十进制数、十六进制数、ip、电子邮件地址等结构,并使用通配符替换这些结构的过程。在Drain3中,在处理日志反模板算法之前进行屏蔽,以帮助提高其准确性。例如,消息’ request 0xefef23 from 127.0.0.1 handled in 23ms ‘可以被屏蔽为’ request from handled in ms '。通常,只需几个正则表达式规则就足以屏蔽。 Packaging - The improved Drain3 code-base, including state persistence support and the enhancements, is available as an open source software under the MIT license in GitHub: https://github.com/IBM/Drain3. It is also consumable with pip as a PyPI package. Contributions are welcome.打包—经过改进的Drain3代码库,包括状态持久性支持和增强功能,可以在GitHub上作为开源软件在MIT许可下使用:https://github.com/IBM/Drain3。它还可以作为PyPI包与pip一起使用。贡献是受欢迎的。 Using log templates for analytics使用日志模板进行分析Now that we could identify the template for each log message, how would we use that information to identify outages? Our model detects sudden changes in the frequencies of message types over time, and those may indicate networking issues. Take for example, a network device that normally outputs 10 log message of type L1 every 5 minutes. If that rate drops to 0, it might mean the device is down. Similarly, if the rate increased significantly, it may indicate another issue, e.g. a DDoS attack. Generally, rare types of messages, or message types we have never seen before, are also highly correlated with issues. This is keeping in mind that errors in a production data center are rare.既然我们可以为每个日志消息确定模板,那么如何使用该信息来确定中断呢?我们的模型检测随着时间的推移消息类型频率的突然变化,这些变化可能表明网络问题。例如,某网络设备每5分钟正常输出10条L1类型的日志信息。如果速率下降到0,这可能意味着设备故障了。类似地,如果速率显著增加,可能意味着另一个问题,例如DDoS攻击。通常,罕见的消息类型或我们从未见过的消息类型也与问题高度相关。这要记住,生产数据中心中很少出现错误。 It is also possible to analyze the values of parameters in the log messages, just as we would for metrics. An anomaly in a parameter value could indicate of an issue. For example, we could look at a log message that prints the time it took to perform some operation:还可以分析日志消息中的参数值,就像我们分析度量一样。参数值的异常可能表明有问题。例如,我们可以查看一条日志消息,它打印了执行某些操作所花费的时间: fine tuning completed (took ms)Or a new categorical (string) parameter we never encountered before, e.g., a new task name that did not load:或者一个我们从未遇到过的新的类别(字符串)参数,例如,一个没有加载的新任务名: Task Did Not Load:Let’s take the following synthetic log snippet and see how we turn it into a time series on which we can do anomaly detection:让我们以以下合成日志片段为例,看看我们如何将其转换为一个时间序列,以便进行异常检测:

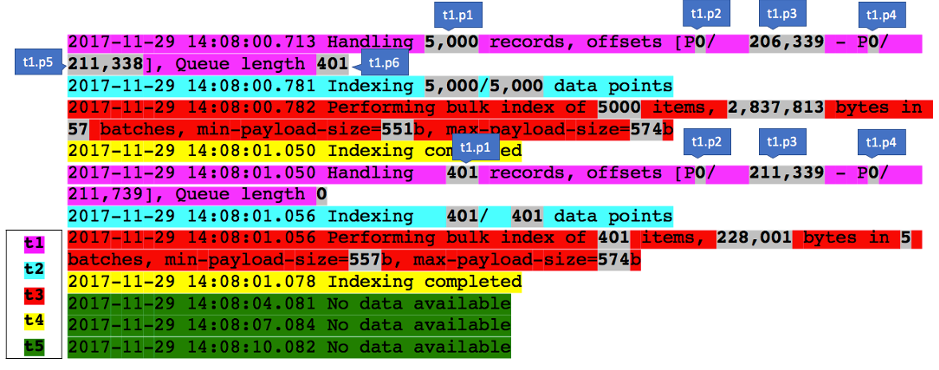

We cluster the messages using the color codes for message types, as follows:我们使用消息类型的颜色编码对消息进行聚类,如下所示:

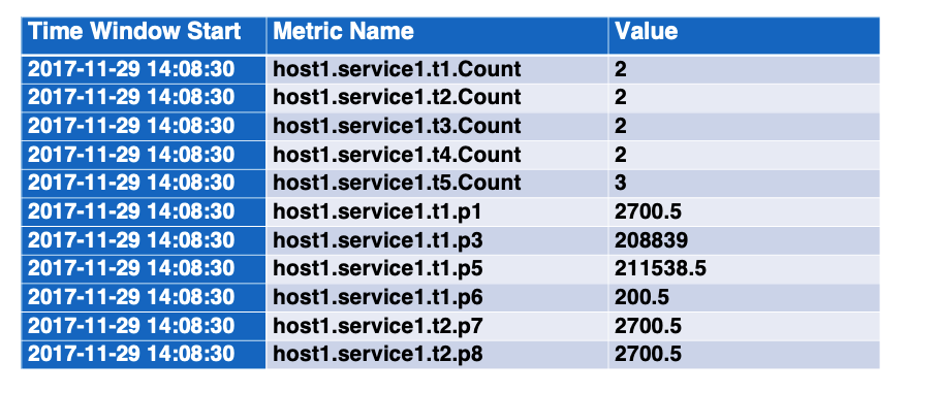

Now we count the occurrences of each message type in each time window, and also calculate an average value for each parameter:现在我们计算每种消息类型在每个时间窗口中的出现次数,并计算每个参数的平均值:

The outcome is multiple time-series, which can be used for anomaly detection.结果是多个时间序列,可用于异常检测。 What’s next?接下来是什么?Of course, not every anomaly is an outage or an incident. In a big data center, it is almost guaranteed that you will get a few anomalies every hour. Weighting and correlating multiple anomalies is a must in order to estimate when an alert is justified.当然,并不是每一个异常都是中断或事故。在大型数据中心,几乎可以保证每小时都会出现一些异常。加权和关联多个异常是必须的,以估计什么时候警报是合理的。 Drain3开源代码使用介绍以下文章是对开源Drain3代码的使用介绍,使用方式,和流程 Introduction介绍Drain3 is an online log template miner that can extract templates (clusters) from a stream of log messages in a timely manner. It employs a parse tree with fixed depth to guide the log group search process, which effectively avoids constructing a very deep and unbalanced tree. Drain3是一款在线日志模板挖掘工具,可以从日志消息流中及时提取模板(集群)。它采用固定深度的解析树来指导日志组搜索过程,有效地避免了构造深度非常大、不平衡的树。 Drain3 continuously learns on-the-fly and extracts log templates from raw log entries. Drain3不断动态学习,并从原始日志条目中提取日志模板。 Example:例子For the input: connected to 10.0.0.1 connected to 192.168.0.1 Hex number 0xDEADBEAF user davidoh logged in user eranr logged inDrain3 extracts the following templates: Drain3提取了以下模板: ID=1 : size=2 : connected to ID=2 : size=1 : Hex number ID=3 : size=2 : user logged inFull sample program output: 完整的示例程序输出: Starting Drain3 template miner Checking for saved state Saved state not found Drain3 started with 'FILE' persistence Starting training mode. Reading from std-in ('q' to finish) > connected to 10.0.0.1 Saving state of 1 clusters with 1 messages, 528 bytes, reason: cluster_created (1) {"change_type": "cluster_created", "cluster_id": 1, "cluster_size": 1, "template_mined": "connected to ", "cluster_count": 1} Parameters: [ExtractedParameter(value='10.0.0.1', mask_name='IP')] > connected to 192.168.0.1 {"change_type": "none", "cluster_id": 1, "cluster_size": 2, "template_mined": "connected to ", "cluster_count": 1} Parameters: [ExtractedParameter(value='192.168.0.1', mask_name='IP')] > Hex number 0xDEADBEAF Saving state of 2 clusters with 3 messages, 584 bytes, reason: cluster_created (2) {"change_type": "cluster_created", "cluster_id": 2, "cluster_size": 1, "template_mined": "Hex number ", "cluster_count": 2} Parameters: [ExtractedParameter(value='0xDEADBEAF', mask_name='HEX')] > user davidoh logged in Saving state of 3 clusters with 4 messages, 648 bytes, reason: cluster_created (3) {"change_type": "cluster_created", "cluster_id": 3, "cluster_size": 1, "template_mined": "user davidoh logged in", "cluster_count": 3} Parameters: [] > user eranr logged in Saving state of 3 clusters with 5 messages, 644 bytes, reason: cluster_template_changed (3) {"change_type": "cluster_template_changed", "cluster_id": 3, "cluster_size": 2, "template_mined": "user logged in", "cluster_count": 3} Parameters: [ExtractedParameter(value='eranr', mask_name='*')] > q Training done. Mined clusters: ID=1 : size=2 : connected to ID=2 : size=1 : Hex number ID=3 : size=2 : user logged inThis project is an upgrade of the original Drain project by LogPAI from Python 2.7 to Python 3.6 or later with additional features and bug-fixes. Read more information about Drain from the following paper: Pinjia He, Jieming Zhu, Zibin Zheng, and Michael R. Lyu. Drain: An Online Log Parsing Approach with Fixed Depth Tree, Proceedings of the 24th International Conference on Web Services (ICWS), 2017. (2017年香港中文大学初创的分析方法,并将原理表论文。git项目地址:https://github.com/logpai/logparser) A Drain3 use case is presented in this blog post: Use open source Drain3 log-template mining project to monitor for network outages . (近年IBM团队基于2017年的工作,进一步重构代码,并增加相应设计在里边,具体内容见博客。) New features 新特性 Persistence. Save and load Drain state into an Apache Kafka topic, Redis or a file.Streaming. Support feeding Drain with messages one-be-one.Masking. Replace some message parts (e.g numbers, IPs, emails) with wildcards. This improves the accuracy of template mining.Packaging. As a pip package.Configuration. Support for configuring Drain3 using an .ini file or a configuration object.Memory efficiency. Decrease the memory footprint of internal data structures and introduce cache to control max memory consumed (thanks to @StanislawSwierc)Inference mode. In case you want to separate training and inference phase, Drain3 provides a function for fast matching against already-learned clusters (templates) only, without the usage of regular expressions.Parameter extraction. Accurate extraction of the variable parts from a log message as an ordered list, based on its mined template and the defined masking instructions (thanks to @Impelon).略 Expected Input and Output 预期的输入和输出Although Drain3 can be ingested with full raw log message, template mining accuracy can be improved if you feed it with only the unstructured free-text portion of log messages, by first removing structured parts like timestamp, hostname. severity, etc. 虽然Drain3可以吸收完整的原始日志消息,但是如果只提供日志消息的非结构化自由文本部分,则可以提高模板挖掘的准确性,首先删除结构化部分,如时间戳、主机名。严重程度等。 The output is a dictionary with the following fields: 输出是一个字典,包含以下字段: change_type - indicates either if a new template was identified, an existing template was changed or message added to an existing cluster.表明如果一个新模板被确认,现有模板被改变或消息添加到现有的集群。cluster_id - Sequential ID of the cluster that the log belongs to.顺序的ID日志属于集群。cluster_size- The size (message count) of the cluster that the log belongs to.日志所属集群的大小(消息计数)。cluster_count - Count clusters seen so far.集群计数。template_mined- the last template of above cluster_id.上面cluster_id的最后一个模板。 Configuration配置Drain3 is configured using configparser. By default, config filename is drain3.ini in working directory. It can also be configured passing a [TemplateMinerConfig] object to the [TemplateMiner]constructor. 使用[configparser]配置Drain3。默认情况下,工作目录下的config filename为’ drain3.ini '。它也可以通过将一个[TemplateMinerConfig]对象传递给[TemplateMiner]构造函数来配置。 Primary configuration parameters: 主要配置参数: [DRAIN]/sim_th - similarity threshold. if percentage of similar tokens for a log message is below this number, a new log cluster will be created (default 0.4)相似度阈值。如果日志消息的类似令牌的百分比低于这个数字,则将创建一个新的日志集群(默认为0.4)。[DRAIN]/depth - max depth levels of log clusters. Minimum is 2. (default 4)日志集群的最大深度级别。最低是2。(默认4)[DRAIN]/max_children - max number of children of an internal node (default 100)内部节点的最大子节点数(默认100)[DRAIN]/max_clusters - max number of tracked clusters (unlimited by default). When this number is reached, model starts replacing old clusters with a new ones according to the LRU cache eviction policy.可跟踪集群的最大数量(默认无限制)。当达到这个数量时,模型开始根据LRU缓存回收策略用新的集群替换旧的集群。[DRAIN]/extra_delimiters - delimiters to apply when splitting log message into words (in addition to whitespace) ( default none). Format is a Python list e.g. ['_', ':'].将日志消息拆分为单词(除了空格)时应用的分隔符(默认为无)。Format是一个Python列表。['_', ':'].[MASKING]/masking - parameters masking - in json format (default “”) 参数屏蔽- json格式(默认"")[MASKING]/mask_prefix & [MASKING]/mask_suffix - the wrapping of identified parameters in templates. By default, it is respectively. 在模板中包装已标识的参数。默认情况下,分别为’ < ‘和’ > '。[SNAPSHOT]/snapshot_interval_minutes - time interval for new snapshots (default 1) time interval for new snapshots (default 1)[SNAPSHOT]/compress_state - whether to compress the state before saving it. This can be useful when using Kafka persistence.保存前是否压缩状态。这在使用Kafka持久性时非常有用。 Masking屏蔽(掩码)This feature allows masking of specific variable parts in log message with keywords, prior to passing to Drain. A well-defined masking can improve template mining accuracy.该特性允许在传递给Drain之前,用关键字屏蔽日志消息中的特定可变部分。定义良好的掩模可以提高模板挖掘的精度。 Template parameters that do not match any custom mask in the preliminary masking phase are replaced with by Drain core.在初始掩模阶段不匹配任何定制掩模的模板参数将被Drain core替换为’ '。 Use a list of regular expressions in the configuration file with the format {'regex_pattern', 'mask_with'} to set custom masking. For example, following masking instructions in drain3.ini will mask IP addresses and integers: 在配置文件中使用格式为’ {‘regex_pattern’, ‘mask_with’} '的正则表达式列表来设置自定义屏蔽。 [MASKING] masking = [ {"regex_pattern":"((? |

【本文地址】

今日新闻 |

推荐新闻 |