NLTK |

您所在的位置:网站首页 › protectively词性 › NLTK |

NLTK

|

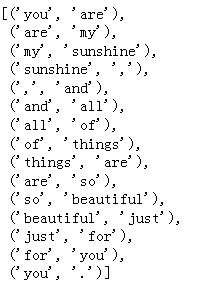

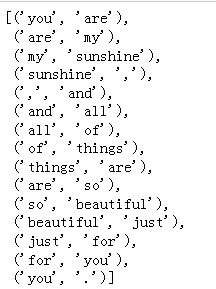

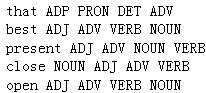

tag标注说明 1.词性标注器 text=word_tokenize('And now for something completely different') print(pos_tag(text)) out:[('And', 'CC'), ('now', 'RB'), ('for', 'IN'), ('something', 'NN'), ('completely', 'RB'), ('different', 'JJ')]2.str2tuple()创建标注元组 直接从一个字符串构造一个已标注的标识符的链表。 第一步是对字符串分词以 便能访问单独的词/标记字符串,然后将每一个转换成一个元组(使用 str2tuple()) sent = ''' The/AT grand/JJ jury/NN commented/VBD on/IN a/AT number/NN of/IN other/AP topics/NNS ''' [nltk.tag.str2tuple(t) for t in sent.split()] out:[('The', 'AT'), ('grand', 'JJ'), ('jury', 'NN'), ('commented', 'VBD'), ('on', 'IN'), ('a', 'AT'), ('number', 'NN'), ('of', 'IN'), ('other', 'AP'), ('topics', 'NNS')] print (nltk.corpus.brown.tagged_words()) [('The', 'AT'), ('Fulton', 'NP-TL'), ...] #为避免标记的复杂化,可设置tagset为‘universal’ print (nltk.corpus.brown.tagged_words(tagset='universal')) [('The', 'DET'), ('Fulton', 'NOUN'), ...]3.nltk.bigrams(tokens) 和 nltk.trigrams(tokens) nltk.bigrams(tokens) 和 nltk.trigrams(tokens) 一般如果只是要求穷举双连词或三连词,则可以直接用nltk中的函数bigrams()或trigrams(), 效果如下面代码: import nltk str='you are my sunshine, and all of things are so beautiful just for you.' tokens=nltk.wordpunct_tokenize(str) bigram=nltk.bigrams(tokens) bigram list(bigram)

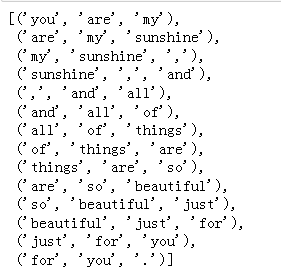

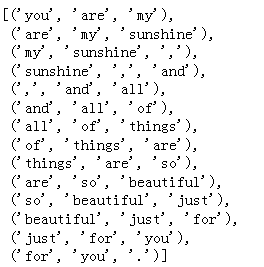

4.nltk.ngrams(tokens, n) 如果要求穷举四连词甚至更长的多词组,则可以用统一的函数ngrams(tokens, n),其中n表示n词词组, 该函数表达形式较统一,效果如下代码: nltk.ngrams(tokens, 2) list(nltk.ngrams(tokens,2))

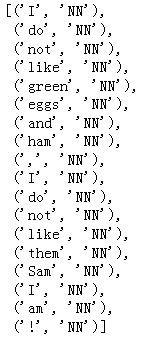

7.自动标注器 from nltk.corpus import brown brown_tagger_sents=brown.tagged_sents(categories='news') brown_sents=brown.sents(categories='news') tags=[tag for (word,tag) in brown.tagged_words(categories='news')] nltk.FreqDist(tags).max() out:'NN'8.默认标注器 raw = 'I do not like green eggs and ham, I do not like them Sam I am!' tokens = nltk.word_tokenize(raw) default_tagger = nltk.DefaultTagger('NN') default_tagger.tag(tokens)

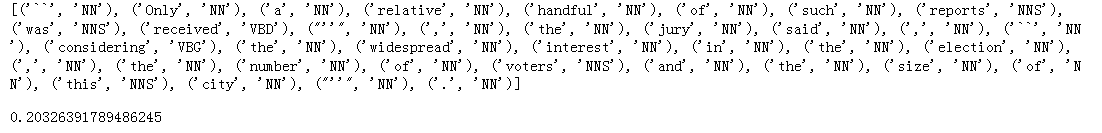

9.正则表达式标注器 patterns = [ (r'.*ing$', 'VBG'), # gerunds (r'.*ed$', 'VBD'), # simple past (r'.*es$', 'VBZ'), # 3rd singular present (r'.*ould$', 'MD'), # modals (r'.*\'s$', 'NN$'), # possessive nouns (r'.*s$', 'NNS'), # plural nouns (r'^-?[0-9]+(.[0-9]+)?$', 'CD'), # cardinal numbers (r'.*', 'NN') # nouns (default) ] regexp_tagger = nltk.RegexpTagger(patterns) print(regexp_tagger.tag(brown.sents()[3])) regexp_tagger.evaluate(brown.tagged_sents(categories='news'))

12.储存标注器 from pickle import dump output = open('t2.pkl','wb') dump(t2,output,-1) output.close() #加载标注器 from pickle import load input = open('t2.pkl', 'rb') tagger = load(input) input.close() tagger.tag(brown_sents[22])

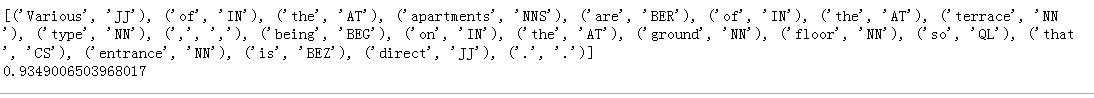

14.跨句子边界标注 brown_tagged_sents = brown.tagged_sents(categories='news') brown_sents = brown.sents(categories='news') size = int(len(brown_tagged_sents) * 0.9) train_sents = brown_tagged_sents[:size] test_sents = brown_tagged_sents[size:] t0 = nltk.DefaultTagger('NN') t1 = nltk.UnigramTagger(train_sents, backoff=t0) t2 = nltk.BigramTagger(train_sents, backoff=t1) t2.evaluate(test_sents) out:0.8452108043456593 |

【本文地址】

今日新闻 |

推荐新闻 |

5.ConditionalFreqDist条件概率分布函数 可以查看每个单词在各新闻语料中出现的次数

5.ConditionalFreqDist条件概率分布函数 可以查看每个单词在各新闻语料中出现的次数 6.搜查看跟随词的词性标记: 查看‘often’后面跟随的词的词性分布

6.搜查看跟随词的词性标记: 查看‘often’后面跟随的词的词性分布 "NN"出现的次数最多,设置"NN"为默认的词性, 但是效果不佳

"NN"出现的次数最多,设置"NN"为默认的词性, 但是效果不佳 10.查询标注器

10.查询标注器 11.N-gram标注

11.N-gram标注

13.组合标注器

13.组合标注器