springboot整合mybatis采用druid连接池对mysql,hive双数据源整合并打包 |

您所在的位置:网站首页 › hive数据库连接配置yml › springboot整合mybatis采用druid连接池对mysql,hive双数据源整合并打包 |

springboot整合mybatis采用druid连接池对mysql,hive双数据源整合并打包

|

目录

准备application.yml公共配置类MySQL配置类Hive配置类DAO层Mapper接口类Mapper.xmlService层

准备

maven依赖,包括mybatis,springboot,大数据连接,MySQL依赖,druid等 cloudera https://repository.cloudera.com/artifactory/cloudera-repos/ org.mybatis.spring.boot mybatis-spring-boot-starter 2.1.0 org.springframework.boot spring-boot-starter-web org.springframework.boot spring-boot-starter-web-services org.springframework.boot spring-boot-starter-webflux org.springframework.boot spring-boot-configuration-processor true org.springframework spring-beans org.springframework.boot spring-boot-starter-test test mysql mysql-connector-java runtime 8.0.13 org.apache.hive hive-jdbc 1.1.0 org.eclipse.jetty.aggregate * org.apache.hadoop hadoop-common 2.6.0-cdh5.15.2 org.apache.hadoop hadoop-mapreduce-client-core 2.6.0-cdh5.15.2 org.apache.hadoop hadoop-mapreduce-client-common 2.6.0-cdh5.15.2 org.apache.hadoop hadoop-hdfs 2.6.0-cdh5.15.2 com.alibaba druid 1.0.29 com.alibaba druid-spring-boot-starter 1.1.10 org.springframework.boot spring-boot-maven-plugin org.springframework.boot spring-boot-maven-plugin repackage org.apache.maven.plugins maven-surefire-plugin 2.12.3 unit org.apache.maven.plugins maven-compiler-plugin 3.0 true true 1.5 src/main/resources **/*.properties **/*.yml **/*.xml false compile application.yml spring: datasource: mysqlMain: # 数据源1mysql配置 type: com.alibaba.druid.pool.DruidDataSource jdbc-url: jdbc:mysql://0.0.0.0:3306/analysis?characterEncoding=UTF-8&useUnicode=true&serverTimezone=GMT%2B8 username: root password: root driver-class-name: com.mysql.cj.jdbc.Driver hive: # 数据源2hive配置 jdbc-url: jdbc:hive2://0.0.0.0:10000/iot username: hive password: hive driver-class-name: org.apache.hive.jdbc.HiveDriver type: com.alibaba.druid.pool.DruidDataSource common-config: #连接池统一配置,应用到所有的数据源 initialSize: 1 minIdle: 1 maxIdle: 5 maxActive: 50 maxWait: 10000 timeBetweenEvictionRunsMillis: 10000 minEvictableIdleTimeMillis: 300000 validationQuery: select 'x' testWhileIdle: true testOnBorrow: false testOnReturn: false poolPreparedStatements: true maxOpenPreparedStatements: 20 filters: stat采用双数据源(hive和mysql) 配置jdbc串连接信息 ,注意是hive2;配置Druid连接池信息 以便进行后续编写默认连接池配置类 公共配置类用于读取hive和mysql配置信息 import lombok.Data; import org.springframework.boot.context.properties.ConfigurationProperties; import java.util.Map; @Data @ConfigurationProperties(prefix = DataSourceProperties.DS, ignoreUnknownFields = false) public class DataSourceProperties { final static String DS = "spring.datasource"; private Map mysqlMain; private Map hive; private Map commonConfig; }读取druid连接池配置 import lombok.Data; import org.springframework.boot.context.properties.ConfigurationProperties; @Data @ConfigurationProperties(prefix = DataSourceCommonProperties.DS, ignoreUnknownFields = false) public class DataSourceCommonProperties { final static String DS = "spring.datasource.common-config"; private int initialSize = 10; private int minIdle; private int maxIdle; private int maxActive; private int maxWait; private int timeBetweenEvictionRunsMillis; private int minEvictableIdleTimeMillis; private String validationQuery; private boolean testWhileIdle; private boolean testOnBorrow; private boolean testOnReturn; private boolean poolPreparedStatements; private int maxOpenPreparedStatements; private String filters; private String mapperLocations; private String typeAliasPackage; } MySQL配置类其中@MapperScan路径见后面解释 import com.alibaba.druid.pool.DruidDataSource; import com.xxxx.xxxx.Config.DataSourceCommonProperties; import com.xxxx.xxxx.Config.DataSourceProperties; import lombok.extern.log4j.Log4j2; import org.apache.ibatis.session.SqlSessionFactory; import org.mybatis.spring.SqlSessionFactoryBean; import org.mybatis.spring.SqlSessionTemplate; import org.mybatis.spring.annotation.MapperScan; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.beans.factory.annotation.Qualifier; import org.springframework.boot.context.properties.EnableConfigurationProperties; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.context.annotation.Primary; import org.springframework.core.io.support.PathMatchingResourcePatternResolver; import javax.sql.DataSource; import java.sql.SQLException; @Configuration @MapperScan(basePackages = "com.xxxx.xxxx.Dao.db1", sqlSessionFactoryRef = "db1SqlSessionFactory") @Log4j2 @EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class}) public class MysqlConfig { @Autowired private DataSourceProperties dataSourceProperties; @Autowired private DataSourceCommonProperties dataSourceCommonProperties; // 设置为主数据源 @Primary @Bean("db1DataSource") public DataSource getDb1DataSource(){ DruidDataSource datasource = new DruidDataSource(); //配置数据源属性 datasource.setUrl(dataSourceProperties.getMysqlMain().get("jdbc-url")); datasource.setUsername(dataSourceProperties.getMysqlMain().get("username")); datasource.setPassword(dataSourceProperties.getMysqlMain().get("password")); datasource.setDriverClassName(dataSourceProperties.getMysqlMain().get("driver-class-name")); //配置统一属性 datasource.setInitialSize(dataSourceCommonProperties.getInitialSize()); datasource.setMinIdle(dataSourceCommonProperties.getMinIdle()); datasource.setMaxActive(dataSourceCommonProperties.getMaxActive()); datasource.setMaxWait(dataSourceCommonProperties.getMaxWait()); datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis()); datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis()); datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery()); datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle()); datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow()); datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn()); datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements()); try { datasource.setFilters(dataSourceCommonProperties.getFilters()); } catch (SQLException e) { log.error("Druid configuration initialization filter error.", e); } return datasource; } // 创建工厂bean对象 @Primary @Bean("db1SqlSessionFactory") public SqlSessionFactory db1SqlSessionFactory(@Qualifier("db1DataSource") DataSource dataSource) throws Exception { SqlSessionFactoryBean bean = new SqlSessionFactoryBean(); bean.setDataSource(dataSource); // mapper的xml形式文件位置必须要配置,不然将报错:no statement (这种错误也可能是mapper的xml中,namespace与项目的路径不一致导致) bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath*:Mapper/db1/*.xml")); return bean.getObject(); } // 创建模板bean @Primary @Bean("db1SqlSessionTemplate") public SqlSessionTemplate db1SqlSessionTemplate(@Qualifier("db1SqlSessionFactory") SqlSessionFactory sqlSessionFactory){ return new SqlSessionTemplate(sqlSessionFactory); } } Hive配置类 import com.alibaba.druid.pool.DruidDataSource; import com.xxxx.xxxx.Config.DataSourceCommonProperties; import com.xxxx.xxxx.Config.DataSourceProperties; import lombok.extern.log4j.Log4j2; import org.apache.ibatis.session.SqlSessionFactory; import org.mybatis.spring.SqlSessionFactoryBean; import org.mybatis.spring.SqlSessionTemplate; import org.mybatis.spring.annotation.MapperScan; import org.springframework.beans.factory.annotation.Autowired; import org.springframework.beans.factory.annotation.Qualifier; import org.springframework.boot.context.properties.EnableConfigurationProperties; import org.springframework.context.annotation.Bean; import org.springframework.context.annotation.Configuration; import org.springframework.core.io.support.PathMatchingResourcePatternResolver; import javax.sql.DataSource; import java.sql.SQLException; @Configuration @MapperScan(basePackages = "com.xxxx.xxxx.Dao.db2", sqlSessionFactoryRef = "db2SqlSessionFactory") @Log4j2 @EnableConfigurationProperties({DataSourceProperties.class, DataSourceCommonProperties.class}) public class HiveConfig { @Autowired private DataSourceProperties dataSourceProperties; @Autowired private DataSourceCommonProperties dataSourceCommonProperties; @Bean("db2DataSource") public DataSource getDb2DataSource(){ DruidDataSource datasource = new DruidDataSource(); //配置数据源属性 datasource.setUrl(dataSourceProperties.getHive().get("jdbc-url")); datasource.setUsername(dataSourceProperties.getHive().get("username")); datasource.setDriverClassName(dataSourceProperties.getHive().get("driver-class-name")); //配置统一属性 datasource.setInitialSize(dataSourceCommonProperties.getInitialSize()); datasource.setMinIdle(dataSourceCommonProperties.getMinIdle()); datasource.setMaxActive(dataSourceCommonProperties.getMaxActive()); datasource.setMaxWait(dataSourceCommonProperties.getMaxWait()); datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis()); datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis()); datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery()); datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle()); datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow()); datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn()); datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements()); try { datasource.setFilters(dataSourceCommonProperties.getFilters()); } catch (SQLException e) { log.error("Druid configuration initialization filter error.", e); } return datasource; } @Bean("db2SqlSessionFactory") public SqlSessionFactory db2SqlSessionFactory(@Qualifier("db2DataSource") DataSource dataSource) throws Exception { SqlSessionFactoryBean bean = new SqlSessionFactoryBean(); bean.setDataSource(dataSource); // mapper的xml形式文件位置必须要配置,不然将报错:no statement (这种错误也可能是mapper的xml中,namespace与项目的路径不一致导致) // 设置mapper.xml路径,classpath不能有空格 bean.setMapperLocations(new PathMatchingResourcePatternResolver().getResources("classpath*:Mapper/db2/*.xml")); return bean.getObject(); } @Bean("db2SqlSessionTemplate") public SqlSessionTemplate db2SqlSessionTemplate(@Qualifier("db2SqlSessionFactory") SqlSessionFactory sqlSessionFactory){ return new SqlSessionTemplate(sqlSessionFactory); } }mapper接口层如图片所示,为@MapperScan扫描的包,扫描进spring容器 接口 public interface GetData{ List findByStartEndTime(String startTime, String endTime); List findByHive(String startTime, String endTime); }实现类 @Service @Slf4j @SuppressWarnings("all") public class SectionTempAlarmServiceImpl implements SectionTempAlarmService { @Autowired private MySQLMapper mySQLMapper; @Autowired private HiveMapper hiveMapper; @Override public List findByStartEndTime(String startTime, String endTime) { List data= mySQLMapper.findByStartEndTime(startTime, endTime); return data; } @Override public List findByHive(String startTime, String endTime) { long currentTimeMillis = System.currentTimeMillis(); List allHiveData = hiveMapper.findByHive(startTime, endTime); long queryTimeMills = System.currentTimeMillis(); System.out.println("=====================select from hive cost: " + (queryTimeMills - currentTimeMillis) / 1000 + "==============="); return allHiveData; } }经过测试,采用mvn package打包就行 |

【本文地址】

今日新闻 |

推荐新闻 |

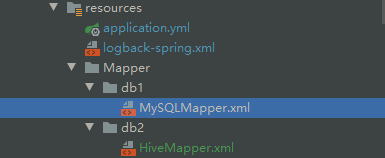

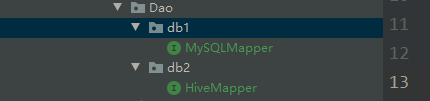

mapper.xml如图

mapper.xml如图