基于LSTM encoder |

您所在的位置:网站首页 › 21的翻译的英语 › 基于LSTM encoder |

基于LSTM encoder

|

前言

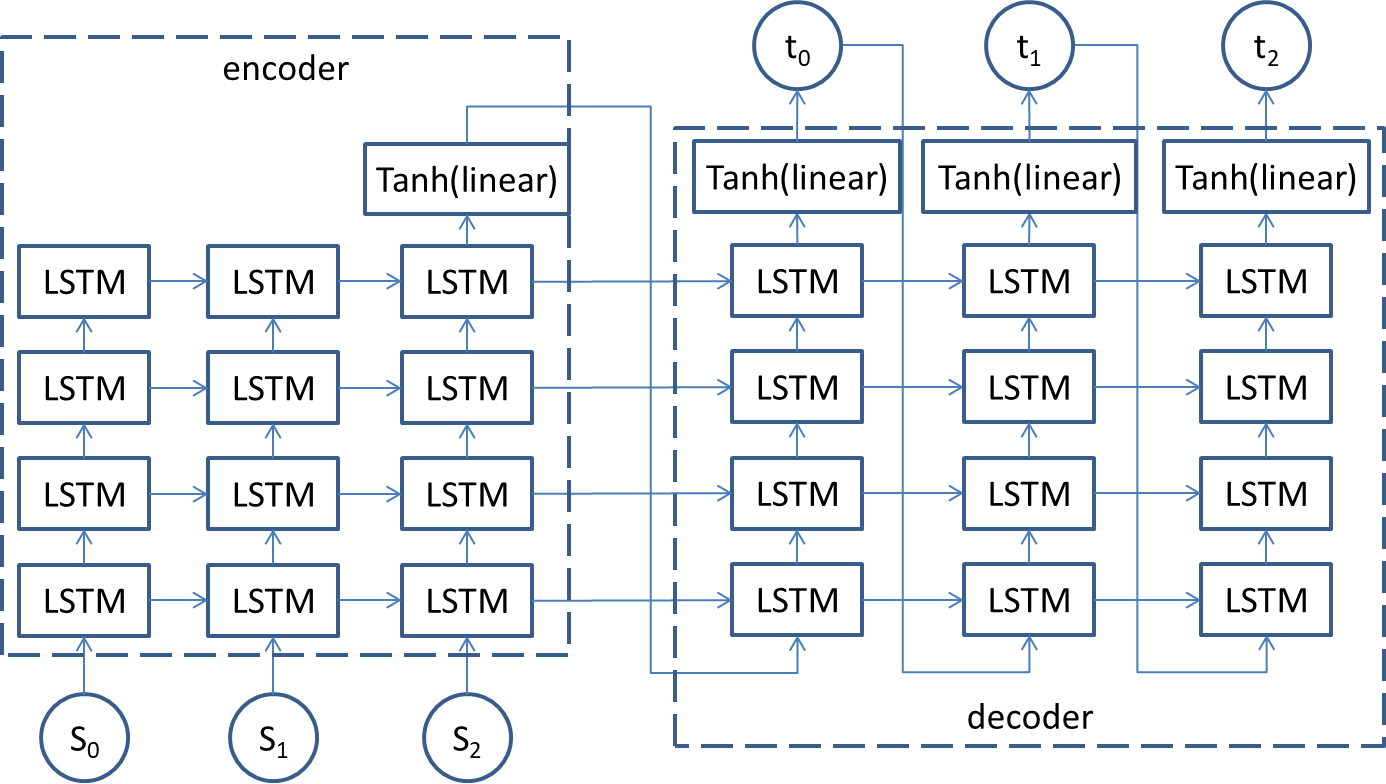

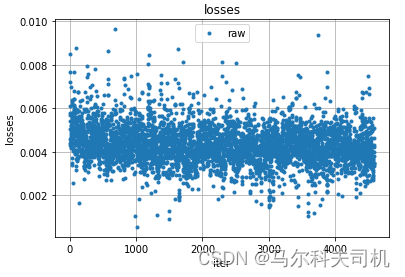

神经网络机器翻译(NMT, neuro machine tranlation)是AIGC发展道路上的一个重要应用。正是对这个应用的研究,发展出了注意力机制,在此基础上产生了AIGC领域的霸主transformer。我们今天先把注意力机制这些东西放一边,介绍一个对机器翻译起到重要里程碑作用的模型:LSTM encoder-decoder模型(sutskever et al. 2014)。根据这篇文章的描述,这个模型不需要特别的优化,就可以取得超过其他NMT模型的效果,所以我们也来动手实现一下,看看是不是真的有这么厉害。 模型原文作者采用了4层LSTM模型,每层有1000个单元(每个单元有输入门,输出门,遗忘门和细胞状态更新共计4组状态),采用1000维单词向量,纯RNN部分,就有64M参数。同时,在encoder的输出,和decoder的输出后放一个长度为80000的softmax层(因为论文的输出字典长80000),用于softmax的参数量为320M。整个模型共计320M + 64M = 384M。该模型用了8GPU的服务器训练了10天。 模型大概长这样: 笔者的模型看起来像这样: 该模型的主要参数如下: 词向量维度:300 LSTM隐藏层个数:900 LSTM层数:4 linear层输入:900 linear层输出:300 模型参数个数如下为: ========================================================================================== Layer (type:depth-idx) Output Shape Param # ========================================================================================== Seq2Seq [1, 11, 300] -- ├─Encoder: 1-1 [1, 300] -- │ └─LSTM: 2-1 [1, 10, 900] 23,788,800 │ └─Linear: 2-2 [1, 10, 300] 270,300 │ └─ReLU: 2-3 [1, 300] -- ├─Decoder: 1-2 [1, 11, 300] -- │ └─LSTM: 2-4 [1, 11, 900] 23,788,800 │ └─Linear: 2-5 [1, 11, 300] 270,300 │ └─ReLU: 2-6 [1, 11, 300] -- ========================================================================================== Total params: 48,118,200 Trainable params: 48,118,200 Non-trainable params: 0 Total mult-adds (M): 500.11 ========================================================================================== Input size (MB): 0.02 Forward/backward pass size (MB): 0.20 Params size (MB): 192.47 Estimated Total Size (MB): 192.70 ==========================================================================================如果大家希望了解LSTM层的23,788,800个参数如何计算出来,可以参考pytorch源码 pytorch/torch/csrc/api/src/nn/modules/rnn.cpp中方法void RNNImplBase::reset()的实现。笔者如果日后有空也可能会写一写。 3 单词向量及语料 3.1 语料先说语料,NMT需要大量的平行语料,语料可以从这里获取。另外有个语料天涯网站大全分享给大家。 3.2 词向量首先需要对句子进行分词,中英文都需要做分词。中文分词工具本例采用jieba,可直接安装。 $ pip install jieba ... $ python Python 3.11.6 (tags/v3.11.6:8b6ee5b, Oct 2 2023, 14:57:12) [MSC v.1935 64 bit (AMD64)] on win32 Type "help", "copyright", "credits" or "license" for more information. >>> for token in jieba.cut("我爱踢足球!", cut_all=False): ... print(token) ... 我 爱 踢足球 !英文分词采用nltk,安装之后,需要下载一个分词模型。 $ pip install nltk ... $ python Python 3.11.6 (tags/v3.11.6:8b6ee5b, Oct 2 2023, 14:57:12) [MSC v.1935 64 bit (AMD64)] on win32 Type "help", "copyright", "credits" or "license" for more information. >>> import nltk >>> nltk.download("punkt") ... >>> from nltk import word_tokenize >>> word_tokenize('i love you') ['i', 'love', 'you']国内有墙,一般下载不了,所以可以到这里找到punkt文件并下载,解压到~/nltk_data/tokenizers/下边。 3.3 加载语料代码 import xml.etree.ElementTree as ET class TmxHandler(): def __init__(self): self.tag=None self.lang=None self.corpus={} def handleStartTu(self, tag): self.tag=tag self.lang=None self.corpus={} def handleStartTuv(self, tag, attributes): if self.tag == 'tu': if attributes['{http://www.w3.org/XML/1998/namespace}lang']: self.lang=attributes['{http://www.w3.org/XML/1998/namespace}lang'] else: raise Exception('tuv element must has a xml:lang attribute') self.tag = tag else: raise Exception('tuv element must go under tu, not ' + tag) def handleStartSeg(self, tag, elem): if self.tag == 'tuv': self.tag = tag if self.lang: if elem.text: self.corpus[self.lang]=elem.text else: raise Exception('lang must not be none') else: raise Exception('seg element must go under tuv, not ' + tag) def startElement(self, tag, attributes, elem): if tag== 'tu': self.handleStartTu(tag) elif tag == 'tuv': self.handleStartTuv(tag, attributes) elif tag == 'seg': self.handleStartSeg(tag, elem) def endElem(self, tag): if self.tag and self.tag != tag: raise Exception(self.tag + ' could not end with ' + tag) if tag == 'tu': self.tag=None self.lang=None self.corpus={} elif tag == 'tuv': self.tag='tu' self.lang=None elif tag == 'seg': self.tag='tuv' def parse(self, filename): for event, elem in ET.iterparse(filename, events=('start','end')): if event == 'start': self.startElement(elem.tag, elem.attrib, elem) elif event == 'end': if elem.tag=='tu': yield self.corpus self.endElem(elem.tag) 3.4 句子转词向量代码 from gensim.models import KeyedVectors import torch import jieba from nltk import word_tokenize import numpy as np class WordEmbeddingLoader(): def __init__(self): pass def load(self, fname): self.model = KeyedVectors.load_word2vec_format(fname) def get_embeddings(self, word:str): if self.model: try: return self.model.get_vector(word) except(KeyError): return None else: return None def get_scentence_embeddings(self, scent:str, lang:str): embeddings = [] ws = [] if(lang == 'zh'): ws = jieba.cut(scent, cut_all=False) elif lang == 'en': ws = word_tokenize(scent) else: raise Exception('Unsupported language ' + lang) for w in ws: embedding = self.get_embeddings(w.lower()) if embedding is None: embedding = np.zeros(self.model.vector_size) embedding = torch.from_numpy(embedding).float() embeddings.append(embedding.unsqueeze(0)) return torch.cat(embeddings, dim=0) 4 模型代码实现 4.1 encoder import torch.nn as nn class Encoder(nn.Module): def __init__(self, device, embeddings=300, hidden_size=600, num_layers=4): super().__init__() self.device = device self.hidden_layer_size = hidden_size self.n_layers = num_layers self.embedding_size = embeddings self.lstm = nn.LSTM(embeddings, hidden_size, num_layers, batch_first=True) self.linear = nn.Linear(hidden_size, embeddings) self.tanh = nn.Tanh() # init weights for name, param in self.lstm.named_parameters(): if 'bias' in name: nn.init.constant_(param, 0.0) elif 'weight' in name: nn.init.xavier_uniform_(param,gain=0.02) nn.init.xavier_uniform_(self.linear.weight.data,gain=0.25) def forward(self, x): # x: [batch size, seq length, embeddings] # lstm_out: [batch size, x length, hidden size] lstm_out, (hidden, cell) = self.lstm(x) # linear input is the lstm output of the last word lineared = self.linear(lstm_out[:,-1,:].squeeze(1)) out = self.tanh(lineared) # hidden: [n_layer, batch size, hidden size] # cell: [n_layer, batch size, hidden size] return out, hidden, cell 4.2 decoder import torch.nn as nn class Decoder(nn.Module): def __init__(self, device, embedding_size=300, hidden_size=900, num_layers=4): super().__init__() self.device = device self.hidden_layer_size = hidden_size self.n_layers = num_layers self.embedding_size = embedding_size self.lstm = nn.LSTM(embedding_size, hidden_size, num_layers, batch_first=True) self.linear = nn.Linear(hidden_size, embedding_size) self.tanh = nn.Tanh() # init parameter for name, param in self.lstm.named_parameters(): if 'bias' in name: nn.init.constant_(param, 0.0) elif 'weight' in name: nn.init.xavier_uniform_(param,gain=0.02) nn.init.xavier_uniform_(self.linear.weight.data,gain=0.25) def forward(self, x, hidden_in, cell_in): # x: [batch_size, x length, embeddings] # hidden: [n_layers, batch size, hidden size] # cell: [n_layers, batch size, hidden size] # lstm_out: [seq length, batch size, hidden size] lstm_out, (hidden,cell) = self.lstm(x, (hidden_in, cell_in)) # prediction: [seq length, batch size, embeddings] prediction=self.relu(self.linear(lstm_out)) return prediction, hidden, cell 4.3 encoder-decoder接下来把encoder和decoder串联起来。 import torch import encoder as enc import decoder as dec import torch.nn as nn import time class Seq2Seq(nn.Module): def __init__(self, device, embeddings, hiddens, n_layers): super().__init__() self.device = device self.encoder = enc.Encoder(device, embeddings, hiddens, n_layers) self.decoder= dec.Decoder(device, embeddings, hiddens, n_layers) self.embeddings = self.encoder.embedding_size assert self.encoder.n_layers == self.decoder.n_layers, "Number of layers of encoder and decoder must be equal!" assert self.decoder.hidden_layer_size==self.decoder.hidden_layer_size, "Hidden layer size of encoder and decoder must be equal!" # x: [batches, x length, embeddings] # x is the source scentences # y: [batches, y length, embeddings] # y is the target scentences def forward(self, x, y): # encoder_out: [batches, n_layers, embeddings] # hidden, cell: [n layers, batch size, embeddings] encoder_out, hidden, cell = self.encoder(x) # use encoder output as the first word of the decode sequence decoder_input = torch.cat((encoder_out.unsqueeze(0), y), dim=1) # predicted: [batches, y length, embeddings] predicted, hidden, cell = self.decoder(decoder_input, hidden, cell) return predicted 5 模型训练 5.1 训练代码 def do_train(model:Seq2Seq, train_set, optimizer, loss_function): step = 0 model.train() # seq: [seq length, embeddings] # labels: [label length, embeddings] for seq, labels in train_set: step = step + 1 # ignore the last word of the label scentence # because it is to be predicted label_input = labels[:-1].unsqueeze(0) # seq_input: [1, seq length, embeddings] seq_input = seq.unsqueeze(0) # y_pred: [1, seq length + 1, embeddings] y_pred = model(seq_input, label_input) # single_loss = loss_function(y_pred.squeeze(0), labels.to(self.device)) single_loss = loss_function(y_pred.squeeze(0), labels) optimizer.zero_grad() single_loss.backward() optimizer.step() print_steps = 100 if print_steps != 0 and step%print_steps==1: print(f'[step: {step} - {time.asctime(time.localtime(time.time()))}] - loss:{single_loss.item():10.8f}') def train(device, model, embedding_loader, corpus_fname, batch_size:int, batches: int): reader = corpus_reader.TmxHandler() loss = torch.nn.MSELoss() # summary(model, input_size=[(1, 10, 300),(1,10,300)]) optimizer = torch.optim.Adam(model.parameters()) generator = reader.parse(corpus_fname) for _b in range(batches): batch = [] try: for _c in range(batch_size): try: corpus = next(generator) if 'en' in corpus and 'zh' in corpus: en = embedding_loader.get_scentence_embeddings(corpus['en'], 'en').to(device) zh = embedding_loader.get_scentence_embeddings(corpus['zh'], 'zh').to(device) batch.append((en,zh)) except (StopIteration): break finally: print(time.localtime()) print("batch: " + str(_b)) do_train(model, batch, optimizer, loss) torch.save(model, "./models/seq2seq_" + str(time.time())) if __name__=="__main__": # device = torch.device('cuda') device = torch.device('cpu') embeddings = 300 hiddens = 900 n_layers = 4 embedding_loader = word2vec.WordEmbeddingLoader() print("loading embedding") # a full vocabulary takes too long to load, a baby vocabulary is used for demo purpose embedding_loader.load("../sgns.merge.word.toy") print("load embedding finished") # if there is an existing model, load the existing model from file # model_fname = "./models/_seq2seq_1698000846.3281412" model_fname = None model = None if not model_fname is None: print('loading model from ' + model_fname) model = torch.load(model_fname, map_location=device) print('model loaded') else: model = Seq2Seq(device, embeddings, hiddens, n_layers).to(device) train(device, model, embedding_loader, "../News-Commentary_v16.tmx", 1000, 100) 5.2 使用CPU进行训练让我们先来体验一下CPU的龟速训练。下图是每100句话的训练输出。每次打印的间隔大约为2-3分钟。 [step: 1 - Thu Oct 26 05:14:13 2023] - loss:0.00952744 [step: 101 - Thu Oct 26 05:17:11 2023] - loss:0.00855174 [step: 201 - Thu Oct 26 05:20:07 2023] - loss:0.00831730 [step: 301 - Thu Oct 26 05:23:09 2023] - loss:0.00032693 [step: 401 - Thu Oct 26 05:25:55 2023] - loss:0.00907284 [step: 501 - Thu Oct 26 05:28:55 2023] - loss:0.00937218 [step: 601 - Thu Oct 26 05:32:00 2023] - loss:0.00823146 5.3 使用GPU进行训练如果把main函数的第一行中的"cpu"改成“cuda”,则可以使用显卡进行训练。笔者使用的是一张GTX1660显卡,打印间隔缩短为15秒。 [step: 1 - Thu Oct 26 06:38:45 2023] - loss:0.00955237 [step: 101 - Thu Oct 26 06:38:50 2023] - loss:0.00844441 [step: 201 - Thu Oct 26 06:38:56 2023] - loss:0.00820994 [step: 301 - Thu Oct 26 06:39:01 2023] - loss:0.00030389 [step: 401 - Thu Oct 26 06:39:06 2023] - loss:0.00896622 [step: 501 - Thu Oct 26 06:39:11 2023] - loss:0.00929985 [step: 601 - Thu Oct 26 06:39:17 2023] - loss:0.00813591 5.4 训练结果训练的损失值显得有些离散(如下图)。 使用SGD优化器之后的训练损失值: 通过调整模型中的其他地方(比如激活函数、各种超参等),也可能使优化效果获得明显的改善或者变差。例如笔者发现用tanh作为激活函数的效果比relu,sigmoid等效果更好。 |

【本文地址】

今日新闻 |

推荐新闻 |

按照现在的算力价格,用8张4090的主机训练每小时要花20多块钱,训练一轮下来需要花费小5000,笔者当然没有这么土豪,所以我们会使用一个参数量小得多的模型,主要为了记录整个搭建过程使用到的工具链和技术。另外,由于笔者使用了一个预训练的词向量库,包含了中英文单词共计128万多条,其中中文90多万,英文30多万,要像论文中一样用一个超大的softmax来预测每个词的概率并不现实,因此先使用一个linear层再加上tanh来简化,加快训练过程,只求能看到收敛。使用tanh而不是其他激活性函数的原因是:经过测试,使用tanh收敛效果是最好的。

按照现在的算力价格,用8张4090的主机训练每小时要花20多块钱,训练一轮下来需要花费小5000,笔者当然没有这么土豪,所以我们会使用一个参数量小得多的模型,主要为了记录整个搭建过程使用到的工具链和技术。另外,由于笔者使用了一个预训练的词向量库,包含了中英文单词共计128万多条,其中中文90多万,英文30多万,要像论文中一样用一个超大的softmax来预测每个词的概率并不现实,因此先使用一个linear层再加上tanh来简化,加快训练过程,只求能看到收敛。使用tanh而不是其他激活性函数的原因是:经过测试,使用tanh收敛效果是最好的。

我们可以对上边的训练结果用一个滑动窗口进行平滑处理,得到下边的图形。

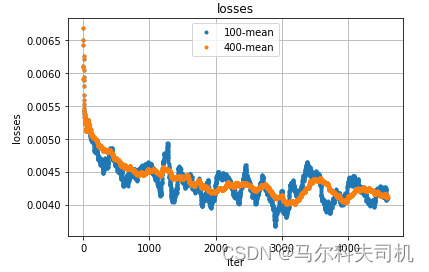

我们可以对上边的训练结果用一个滑动窗口进行平滑处理,得到下边的图形。  通过这张图可以看到明显的收敛过程。这是使用了adam优化器的结果,adam是一个现代优化器,对广泛机器学习任务性能都优于一般优化器。如果我们使用普通的SGD优化器,会怎么样呢?

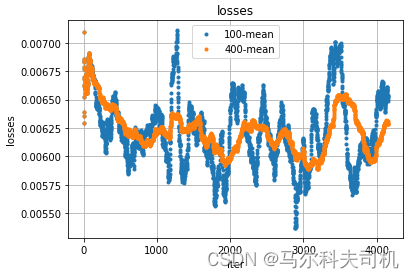

通过这张图可以看到明显的收敛过程。这是使用了adam优化器的结果,adam是一个现代优化器,对广泛机器学习任务性能都优于一般优化器。如果我们使用普通的SGD优化器,会怎么样呢? 优化效果肉眼可见地变差了。

优化效果肉眼可见地变差了。